At its core, data annotation is the process of labeling or tagging data so that machine learning models can make sense of it. Think of it as creating a study guide for AI. Humans essentially point out what's important in a sea of raw information, teaching the AI what to look for.

Understanding Data Annotation and Its Importance

Imagine you’re teaching a toddler what a "dog" is. You wouldn't just show them a dictionary definition. You'd point to a fluffy golden retriever at the park and say, "dog." You'd show them a picture of a tiny chihuahua and say, "That's a dog, too." Each time you do this, you're labeling an example, helping the child build a mental model of what constitutes a dog.

Data annotation does the exact same thing, but for machines. It's the human touch that turns a jumble of raw data—like pixels in an image or sound waves in an audio file—into something an AI can understand and learn from.

Without this crucial step, a self-driving car's computer would just see a chaotic mess of shapes and colors, unable to tell the difference between a stop sign and a red balloon. It’s the annotation that provides the context.

To quickly grasp the core elements, this table breaks down the essentials of data annotation.

Data Annotation At A Glance

| Component | Description | Example |

|---|---|---|

| Raw Data | The initial, unprocessed information that needs labeling. | A folder containing 10,000 photos of street scenes. |

| Annotators | The people responsible for applying the labels accurately. | A team trained to draw boxes around every car in the photos. |

| Annotation Platform | The software or tool used to manage and apply the labels. | A program where annotators can easily draw boxes and assign tags. |

| Quality Control | The process of verifying the accuracy of the labels. | A senior annotator reviewing a sample of labeled images for errors. |

This systematic process ensures the data fed into an AI model is clean, accurate, and consistent.

Ultimately, the performance of any AI system is a direct reflection of the quality of its training data. High-quality annotation leads to a smart, reliable AI; poor annotation leads to one that makes mistakes. This is why understanding why data annotation is critical for AI startups in 2025 is not just a technical detail—it's a fundamental pillar of building successful AI products.

The 4 Main Types Of Data Annotation

Data annotation isn't a one-size-fits-all process. The right technique depends entirely on what you're trying to teach your AI model. Some methods are broad and simple, while others are incredibly granular, mapping out data with pixel-perfect precision.

Think of it like giving directions. Sometimes, a general "it's that building over there" is all you need. Other times, you have to trace the building's exact footprint on a map. Data annotation works the same way, with the complexity of the task dictating the level of detail required.

1. Image and Video Annotation

This is what most people picture when they think of data annotation. It's the process of labeling visual data to train computer vision models.

For many projects, a simple bounding box is enough. Annotators draw a rectangle around an object, like a car or a pedestrian. This is a fast and efficient way to teach an AI to recognize the presence and general location of objects—a crucial first step for something like a self-driving car's perception system.

When the stakes are higher and the AI needs a deeper understanding, the annotation has to get more detailed. For instance, you can't train a medical AI to spot tumors with a simple box. You need something far more precise.

Semantic segmentation is the technique for this. It involves classifying every single pixel in an image. An annotator would meticulously label all the pixels that make up a tumor as "tumor" and the surrounding pixels as "healthy tissue," giving the AI a highly detailed map to learn from.

Another key technique is polygon annotation, which involves tracing the exact outline of an object. This is perfect for irregularly shaped items where a bounding box would capture too much background noise. Think of identifying specific products on a cluttered supermarket shelf.

Finally, there’s keypoint annotation. Imagine a smart fitness app that corrects your exercise form. It doesn't need to see your full outline, just the position of your joints. By placing dots on your shoulders, elbows, and knees, keypoint annotation teaches an AI to understand posture, movement, and gestures.

To help clarify which method to use, here’s a quick breakdown of common image annotation techniques.

Comparing Image Annotation Techniques

| Annotation Type | Primary Use Case | Detail Level |

|---|---|---|

| Bounding Boxes | General object detection and localization. | Low |

| Polygon Annotation | Precisely outlining irregular or occluded objects. | Medium-High |

| Semantic Segmentation | Scene understanding, medical imaging, autonomous driving. | Very High |

| Keypoint Annotation | Pose estimation, facial recognition, gesture tracking. | High (for specific points) |

Each of these methods offers a different trade-off between speed, cost, and the level of detail the final model will have.

2. Text Annotation

Annotation isn't just for pictures. Text annotation is the foundation of Natural Language Processing (NLP), the technology that helps machines understand human language.

Some common examples include:

- Sentiment Analysis: This involves tagging text—like customer reviews or social media posts—as positive, negative, or neutral. Companies use this to get a real-time pulse on public opinion.

- Named Entity Recognition (NER): Here, annotators identify and classify key information in text, such as people's names, organizations, locations, dates, and monetary values. This is what allows you to ask a search engine, "Who was the CEO of Apple in 2010?" and get a direct answer.

- Text Categorization: This is the process of assigning predefined categories to a whole document or paragraph, like sorting news articles into "Sports," "Politics," or "Technology."

3. Audio Annotation

Ever wonder how voice assistants like Siri or Alexa understand you? The answer is audio annotation.

This process involves transcribing spoken words into text. But it often goes further, with annotators identifying who is speaking, flagging background noises like a dog barking or a car horn, and even labeling the emotional tone of a speaker. This rich, labeled data is what trains an AI to isolate your voice and accurately respond to your commands, even in a noisy room.

4. Video Annotation

While similar to image annotation, video annotation adds the dimension of time. Instead of labeling objects in a single frame, annotators track them as they move and interact across multiple frames.

This process, often called object tracking, is essential for teaching an AI to understand motion and behavior. It’s the technology behind systems that analyze traffic flow, monitor security footage for suspicious activity, or even track a ball during a sports broadcast.

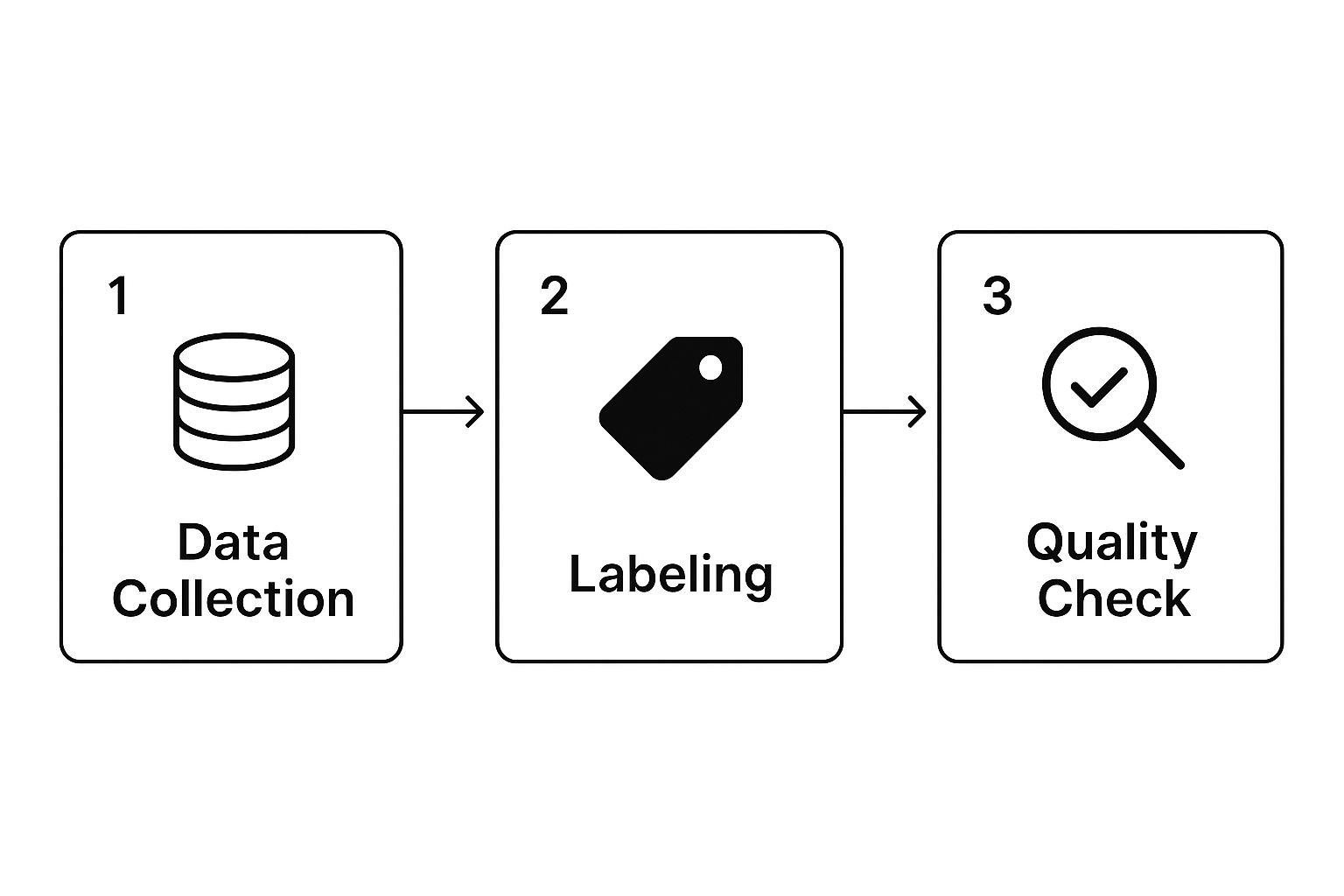

How The Data Annotation Process Actually Works

So, how do you get from a massive folder of raw, unlabeled data to a high-quality dataset that can actually train a smart AI? It’s a carefully managed process. Think of it less like a single task and more like a production line where precision at every stage is what determines the success of your final model.

It all kicks off with gathering the right data and, just as importantly, creating a rock-solid set of labeling guidelines. These rules are your project's bible. They ensure every single person working on the data is on the same page, which is essential for consistency. If you skip this step or rush it, you're setting yourself up for a messy, unreliable dataset down the road.

The Core Annotation Workflow

With your guidelines in place, the real hands-on work begins. This is where human annotators get to work, using specialized software to apply labels directly to the data. That could mean drawing boxes around every car in a street-view image, carefully outlining a tumor in a medical scan, or transcribing spoken words from an audio clip.

But the labeling itself is only part of the story. The most important phase comes next: quality assurance (QA).

You can’t just label data and call it a day. The QA process is a constant cycle of review and correction. This is where labeled data is double-checked against the guidelines to find mistakes, fix inconsistencies, and clear up any confusion. It’s this back-and-forth that makes the difference between a breakthrough AI and a model that completely misses the mark.

This workflow breaks down the essential steps, showing how structured and cyclical the whole process really is.

As you can see, quality checks aren't just tacked on at the end. They're a built-in feedback loop that constantly refines the labeling work. To see this in action, it helps to understand how to annotate images for AI training success. Diving into a practical guide like that can help you translate these concepts into a real-world plan.

Ultimately, this methodical approach is what ensures your final dataset isn't just labeled—it's accurately and reliably prepared to train a truly high-performing AI model.

Choosing The Right Data Annotation Tools For The Job

Knowing the process is half the battle; having the right tools is the other. Picking the best data annotation software for your project isn't just a minor detail—it’s a decision that directly shapes your team's efficiency, the accuracy of your labels, and your ability to scale. Think of it as the digital workbench for your annotators, and a good one makes all the difference.

The market for these tools is exploding for a reason. Valued at USD 2.11 billion in 2024, it's expected to hit a staggering USD 12.45 billion by 2033. This massive growth underscores just how essential high-quality labeled data has become for building any kind of serious AI.

Key Factors To Consider

When you start looking at different tools, it's easy to get overwhelmed. The goal isn't to find the single "best" tool, but the best one for your specific project. Start by thinking about your data. Are you labeling images, video, text, audio, or complex 3D point clouds? Each data type needs its own specialized features.

Your project's size and team setup are also major factors.

- Open-Source Tools: For smaller projects, academic research, or teams just dipping their toes in, open-source options like CVAT are fantastic. They provide powerful core features without a hefty price tag.

- Enterprise Platforms: When you're dealing with massive datasets and large teams, commercial platforms are often worth the investment. They come with built-in quality control workflows, better user management, and the peace of mind of dedicated support.

A crucial consideration is workflow optimization. The right tool should not only facilitate labeling but also actively help in reducing manual effort. For those looking to maximize efficiency, exploring advanced active learning techniques can be a game-changer, as they help prioritize which data to label next.

Finally, never forget the people doing the work. A tool can have all the features in the world, but if its interface is clunky and confusing, your annotators will be slower and more prone to errors. Always lean towards software with a clean, intuitive user experience to keep quality high.

For particularly complex or large-scale projects, it might even make sense to partner with a specialized vendor. If you're exploring that route, our guide on the top data annotation service companies in India can offer some great starting points.

How Data Annotation Is Changing Industries

https://www.youtube.com/embed/OfLOeu_as70

Data annotation is where the rubber meets the road for artificial intelligence. It’s the essential process that translates raw, messy data into the structured information that AI models need to learn. Far from being just a technical footnote, this work is the engine driving real-world breakthroughs in nearly every industry you can think of.

Take a high-stakes field like healthcare. Here, annotation is literally a life-saver. When an AI model analyzes an X-ray or MRI to detect cancer, it's because human annotators have meticulously labeled countless scans, teaching the algorithm to distinguish between healthy tissue and malignant cells. This process trains the AI to spot subtle anomalies a radiologist might overlook, paving the way for earlier diagnoses and dramatically better patient outcomes.

Driving Innovation and Growth

The automotive industry is another perfect example. The dream of self-driving cars navigating busy city streets is built on a mountain of annotated data. For a car to safely identify a pedestrian, a stop sign, or another vehicle, its AI needs to have learned from millions of hours of driving footage. Each frame has been painstakingly labeled by annotators to teach the car's "brain" how to perceive and react to its environment in real-time.

The sheer importance of this work has fueled a booming global market. The data annotation industry is on track to hit USD 8.22 billion by 2028, driven by its critical role in everything from e-commerce and finance to agriculture.

In the world of retail, annotation makes your online shopping experience smoother. When you search for "red running shoes," it's the carefully labeled product images that ensure the website shows you exactly what you’re looking for. This same principle is transforming professional fields, with AI-driven legal document analysis and data extraction workflows automating tasks that once took hundreds of hours.

Even farming is getting smarter. Drones fly over fields capturing images, and annotators label sections showing crop disease, pest infestations, or dehydration. This allows smart farming systems to precisely target water or pesticides only where needed, boosting crop yields and making agriculture more sustainable.

Ultimately, these examples show a clear pattern: high-quality annotation is the foundation for better data-driven decision making everywhere.

Answering Your Top Data Annotation Questions

As you start working with data annotation, you'll naturally have questions. We see the same ones pop up all the time. Think of this as a quick FAQ to clear up some of the most common points of confusion and give you a better sense of how this all works in the real world.

Let's tackle a big one first: Are data annotation and data labeling the same thing? For the most part, yes. In day-to-day conversation, people use them interchangeably. Both refer to the core process of adding meaningful tags to raw data.

However, if you want to get technical, some specialists draw a fine line. They might use "labeling" for simpler tasks, like putting a single tag on an entire image ("car"). "Annotation," on the other hand, often implies more detailed work, like drawing precise outlines around every pedestrian in a video frame.

How Much Do Data Annotation Projects Cost?

This is the million-dollar question, and the honest answer is: it depends. There’s no flat rate for data annotation. The final cost comes down to a few key variables that determine the time and skill needed for the job.

- Data Complexity: There's a world of difference between drawing simple boxes around cars and performing pixel-perfect segmentation on an MRI scan. The more intricate the task, the more it costs.

- Data Volume: This one’s straightforward. A project with 100,000 images will have a much larger price tag than one with only 1,000. More data means more work.

- Required Expertise: Do you need a trained radiologist to identify tumors in X-rays? Or a linguist who understands a specific regional dialect? Tapping into that kind of specialized knowledge costs more than hiring a generalist annotator.

Because of these factors, projects can run anywhere from a few hundred dollars for a small, simple task to hundreds of thousands for a massive, complex undertaking.

Does Automation Replace Human Annotators?

Not at all. This is probably the biggest misconception out there. While AI-powered automation has become a massive help in data annotation, it’s not here to replace people. It’s here to make them faster and more effective.

Automated tools are great for doing a first pass on a dataset, but human oversight is still absolutely essential for quality.

Think of automation as a powerful assistant, not a replacement. An AI model might pre-label 80% of your data with decent accuracy. A human expert then steps in to review that work, fix the inevitable mistakes, and handle all the tricky edge cases the machine struggled with.

This collaborative process is called human-in-the-loop (HITL). It perfectly blends the raw speed of AI with the nuanced judgment and accuracy of a human expert. This is how you get high-quality labeled data at scale.

Ready to power your AI initiatives with high-quality, accurately labeled data? Zilo AI provides comprehensive data annotation and skilled manpower solutions to accelerate your projects. From intricate image segmentation to large-scale text analysis, our expert teams ensure your models are built on a foundation of excellence. Get your AI-ready data now.