Think of AI training data services as the expert coaches for your AI model. They take raw, messy data—like jumbled images, unfiltered text, or raw audio files—and meticulously prepare it so your AI can actually learn from it.

This prep work is the absolute bedrock of a successful AI project. Without it, you're just feeding your model junk.

So, What Exactly Are AI Training Data Services?

Imagine you’re teaching a self-driving car to spot a stop sign. If you only show it blurry, poorly-lit photos, it's going to fail. The problem isn't the car's programming; it's the terrible study material you gave it. This is exactly where AI training data services come in.

They are the bridge between chaotic, real-world information and the clean, structured datasets that intelligent systems need to thrive. An AI model learns by finding patterns, and the quality of those patterns depends entirely on the data it's shown.

These services aren't just doing a simple cleanup. They're running a rigorous, multi-stage process to turn raw data into a high-value asset.

The Data Preparation Workflow

Getting data ready for an AI is a journey. Expert services guide the data through several critical steps to make sure it's perfect for an algorithm to digest.

- Data Collection: It all starts with gathering the right information from different places to create a solid, diverse dataset.

- Data Cleaning: Next, they hunt down and remove errors, duplicate files, and anything irrelevant that could throw the AI off track.

- Data Annotation and Labeling: This is the most hands-on part. Human experts add descriptive tags to the data. This could mean drawing boxes around every car in a picture or tagging customer reviews as "positive" or "negative."

- Data Validation: Finally, a thorough quality check is done to make sure every label is accurate and the entire dataset is consistent.

To really get why this matters, you need to understand the basics of how algorithms learn from experience. If you want to go a bit deeper on this, exploring the machine learning fundamentals is a great next step.

You can think of AI training data services as the quality control team for your AI's education. They make sure every single piece of information it learns from is accurate, relevant, and consistent.

Ultimately, these services are providing the essential building blocks for any serious AI initiative. Without properly prepared data, even the most powerful algorithms will fall flat. Investing in professional data preparation is really an investment in the long-term success of your entire AI system.

Why High-Quality Data Is Your AI's Secret Weapon

The old programming mantra "garbage in, garbage out" has never been more relevant—or more high-stakes—than in the world of AI. When you're building a new model, the data you feed it isn't just one ingredient for success. It's the only thing that truly matters. Even the most sophisticated algorithm will churn out useless, unreliable, or even harmful results if it's fed low-quality data.

Think of it like raising a child prodigy. You can have the most brilliant mind in the world, but if their library is full of inaccurate books and biased stories, their understanding of reality will be completely skewed. High-quality data is the verified, fact-checked, and comprehensive library your AI needs to learn properly.

This isn't just a theoretical problem. We've seen the real-world consequences time and again. Biased datasets have produced AI hiring tools that discriminate against entire groups of people. Flawed image recognition data has caused self-driving cars to misidentify critical road signs. These aren't just bugs in the code; they're direct reflections of poor-quality source material.

What Makes AI Training Data "High-Quality"?

So, what exactly turns raw information into the high-octane fuel your model needs? It all comes down to a few core attributes that professional AI training data services are obsessed with getting right. These aren't just buzzwords; they are the absolute pillars of a successful model.

- Accuracy: Every single label and annotation has to be correct. If you're building an e-commerce AI, a product tagged as a "red t-shirt" must actually be a red t-shirt, not a burgundy polo. Even small inaccuracies teach the AI the wrong lessons.

- Consistency: The rules you use for labeling must be applied the same way across the entire dataset. If one person labels an object a "sofa" and another calls it a "couch," the AI gets confused. Consistency ensures the model learns clear, unambiguous patterns.

- Diversity and Representativeness: The data must mirror the real world your AI will operate in. A facial recognition model trained only on images of white men will fail spectacularly when it encounters anyone else. This lack of diversity is a primary driver of AI bias.

- Relevance: The data has to be directly related to the problem you're trying to solve. You wouldn't train a medical diagnostic AI on stock market data, would you? The information must be targeted and specific to the AI's intended job.

Getting these principles right from the very beginning is the difference between success and failure. For new companies, this is especially vital, a topic we dive into deeper in our guide on why data annotation is critical for AI startups in 2025.

The Business Impact of Data Quality

Investing in high-quality data isn't just about avoiding technical glitches; it's a core business strategy that directly impacts your bottom line and reputation. Bad data leads to models that make expensive mistakes, which quickly erodes customer trust and creates operational nightmares.

A study by IBM revealed that poor data quality costs the U.S. economy an estimated $3.1 trillion every single year. For individual businesses, this translates to wasted money, failed projects, and missed opportunities.

On the flip side, a model trained on a superior dataset becomes a massive competitive advantage. It performs more accurately, adapts to new situations more effectively, and delivers a reliable experience that customers will trust and come back for.

This is where AI training data services act as your quality control team. They implement tough validation processes, use skilled human annotators, and deploy advanced tools to make sure every single data point meets the highest standards. This meticulous work is what separates a world-class AI from a failed experiment, ensuring your model’s "education" is based on ground truth.

The Different Flavors of AI Training Data

Not all data is created equal. Think of it like cooking: a chef needs specific ingredients for a specific dish. In the same way, an AI developer needs the right "flavor" of data to build a model that actually works. The whole world of AI training data services is built around getting these diverse ingredients ready for the machine to "eat."

Each type—from images and videos to text and audio—needs its own special kind of preparation, or annotation. Understanding what makes them different is the first step to planning your AI project and, just as importantly, finding the right partner to help you.

Visual Data: Images and Video

If you want an AI to "see," you need visual data. This is the bedrock of computer vision, the technology that lets self-driving cars navigate roads and helps doctors spot diseases in medical scans. But getting this data ready is a painstaking, detail-oriented job.

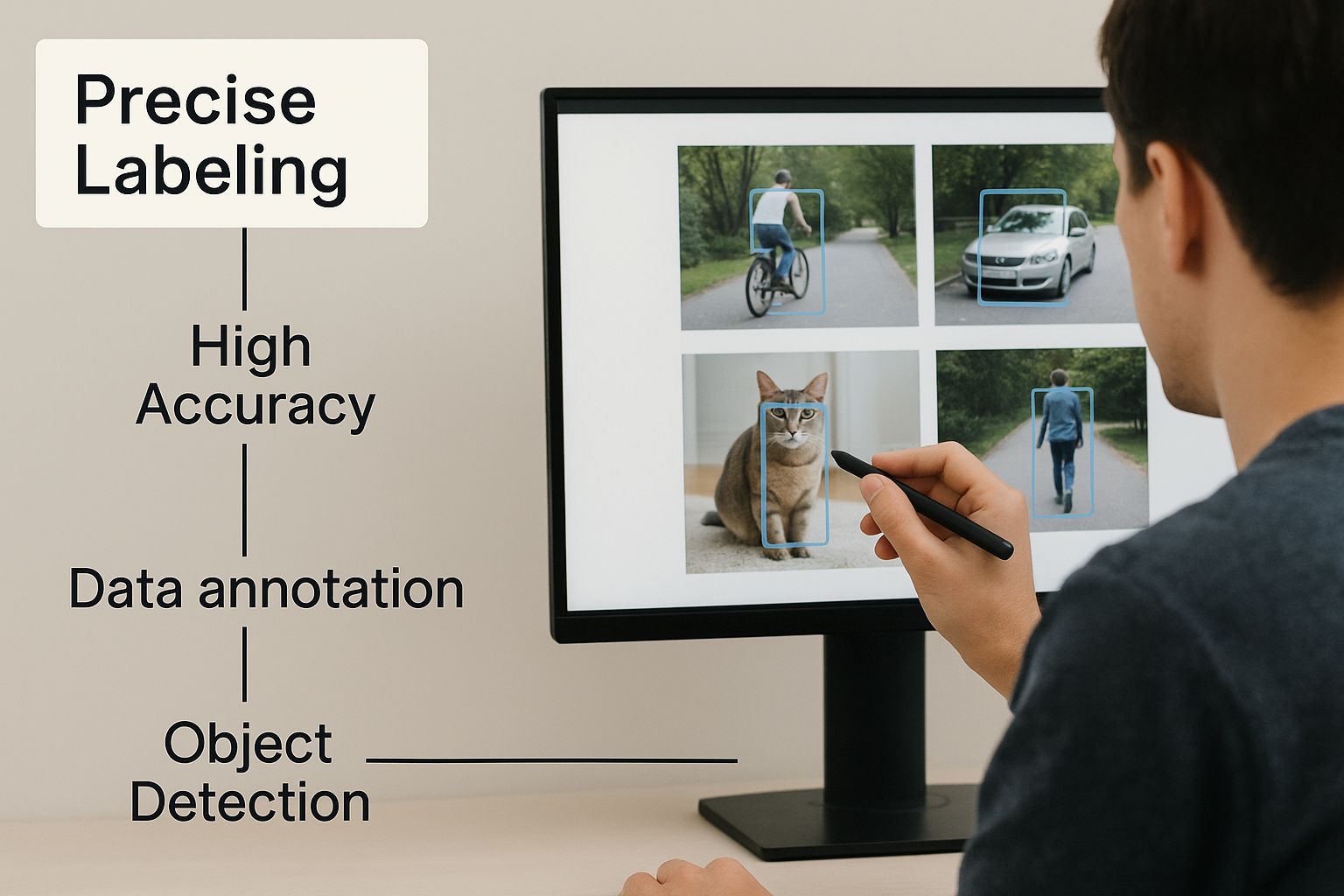

The picture below shows a professional annotator doing this precise labeling work, which is absolutely essential for creating high-quality visual data.

This image really drives home the point that human expertise is what turns raw pictures into smart data that can power real-world computer vision.

To make an image or video make sense to a machine, human annotators have to add context using a few core techniques:

- Bounding Boxes: This is the most common starting point. Annotators simply draw a rectangle around objects of interest. For a self-driving car's AI, this would mean drawing boxes around every single car, person, and stop sign so the AI learns to "see" them.

- Polygonal Segmentation: Sometimes a simple box just doesn't cut it, especially for irregularly shaped objects. Here, annotators trace the exact outline of an object, pixel by pixel. This level of precision is crucial for things like an AI that needs to identify the exact shape of a cancerous tumor in a medical image.

- Keypoint Annotation: This technique is all about marking specific points on an object. Imagine an AI designed to analyze an athlete's form. It would be trained on images where key joints—like shoulders, elbows, and knees—are marked with individual dots.

Text Data: Words and Sentences

Text is everywhere, making it one of the most plentiful data sources we have. It’s the fuel for natural language processing (NLP), the magic behind chatbots, translation apps, and spam filters. But to a computer, raw text is just a meaningless jumble of letters and numbers. Annotation is what gives it meaning.

Think of text annotation as adding emotional and contextual metadata to language. Without it, an AI can read words but can't understand the intent, emotion, or relationships behind them.

Some of the most common text annotation services include:

- Sentiment Analysis: This is where annotators label text—like a product review or a tweet—as positive, negative, or neutral. It’s how an AI learns to understand public opinion or spot an unhappy customer. A brand could use this to monitor its reputation in real time.

- Named Entity Recognition (NER): This involves spotting and tagging key bits of information, like people's names, company names, locations, or dates. Law firms use this to have an AI scan thousands of documents to find relevant names and places, saving an incredible amount of time.

- Text Classification: Here, annotators assign predefined labels to whole chunks of text. A customer support system, for example, could use a model trained on this data to automatically sort incoming emails into the right buckets, like "Billing," "Tech Support," or "Sales Inquiry."

Audio Data: Sounds and Speech

From the voice assistants in our homes and phones to services that automatically transcribe meetings, audio data is a huge part of how we interact with technology. Preparing this data means turning spoken words and other sounds into a structured format an AI can process.

The main task here is audio transcription, where a person listens to an audio file and types out exactly what was said. But it usually goes a step further. Annotators often add timestamps to sync the text with the audio and use speaker diarization to label who is speaking and when. This detailed work is what allows speech recognition models to work reliably, even in noisy rooms with multiple people talking.

Choosing the right partner for this kind of detailed work is key. If you're looking for support in this area, exploring a list of the top 10 data annotation service companies in India for 2025 can provide valuable insights into potential vendors.

To put it all together, here’s a quick look at how these different data types, their uses, and annotation methods line up.

Common AI Data Types and Their Applications

| Data Type | Common Use Cases | Annotation Techniques |

|---|---|---|

| Images | Object detection, facial recognition, medical imaging | Bounding boxes, polygonal segmentation, semantic segmentation |

| Video | Autonomous vehicles, action recognition, security surveillance | Object tracking, video classification, keypoint annotation |

| Text | Sentiment analysis, chatbots, language translation, spam filters | Named Entity Recognition (NER), text classification, intent analysis |

| Audio | Voice assistants, speech-to-text transcription, speaker identification | Transcription, speaker diarization, sound event detection |

| Sensor Data | IoT predictive maintenance, activity recognition, weather forecasting | Time-series labeling, event tagging, anomaly detection |

This table shows just how specialized data preparation needs to be. The right approach always depends on what you’re trying to achieve with your AI model.

What's Behind the AI Training Data Boom?

The incredible explosion we're seeing in artificial intelligence didn't just happen on its own. Behind every single smart assistant that answers your questions, every self-driving car navigating a complex street, and every medical AI that helps diagnose diseases, there's a mountain of carefully prepared data. This simple fact has sparked a massive demand for professional AI training data services, turning a niche technical job into a huge global industry.

This isn't just a quick trend. It's a fundamental change in how businesses operate. Companies in every field imaginable—from medicine and banking to car manufacturing and retail—are waking up to a critical reality: you can't build a powerful, reliable AI model without a top-tier dataset. The algorithm gets all the attention, but the data it learns from is the real star of the show.

Because of this, the market for preparing that data is growing at an incredible speed.

The Numbers Tell the Story

The growth we're seeing isn't just gradual; it's explosive. Companies are funneling billions into data preparation because they've learned that the quality of their data directly impacts the performance of their AI. This spending spree shows a real shift in thinking—high-quality data is no longer a luxury but an essential investment for any company that wants to compete.

Just look at the numbers. The global market for AI training data hit an estimated USD 2.60 billion in 2024. Forecasts predict it will jump to USD 3.19 billion just next year. Looking further out, the market is on track to rocket to USD 8.60 billion by 2030, fueled by a compound annual growth rate (CAGR) of nearly 22%. You can dive deeper into these market dynamics to see the full picture of this expansion.

This isn't just about big numbers on a chart. It tells a story about how deeply businesses now rely on these specialized services.

Why is This Happening Now?

A few key things are driving this incredible demand. The biggest factor is just how complex and massive modern AI projects have become. To build a cutting-edge computer vision system or a natural language model that truly understands human nuance, you need millions of individual data points, and every single one has to be labeled with precision.

Trying to handle this internally is a beast of a task. It requires:

- Niche Skills: Data annotation isn't just clicking boxes. It’s a specialized skill that demands training and a sharp eye for detail.

- A Lot of People: Labeling huge datasets can easily require a team of hundreds, or even thousands, of human annotators.

- Serious Infrastructure: You need robust systems to manage quality control, keep data secure, and organize the workflow for a project of that scale.

For most companies, trying to build all of that from the ground up is completely impractical and wildly expensive. It just makes more sense to partner with a dedicated AI training data service provider.

This simple reality has created a vibrant ecosystem where companies can hand off the foundational work of preparing their data. This frees up their internal teams to focus on their core mission: designing and deploying game-changing AI models. It’s this symbiotic relationship that’s pushing the whole market forward, giving companies of all sizes a way to get the high-quality data they need to make their AI goals a reality.

How to Choose the Right AI Data Partner

Picking the right partner for your AI training data services is one of the most critical decisions you'll make on your entire AI journey. It's not just about finding the cheapest quote; it's about finding a strategic ally who can protect your data, deliver impeccable quality, and scale with you.

The right partner becomes a true extension of your team. The wrong one can sink your project with sloppy data, security nightmares, and blown deadlines. So, how do you tell them apart?

You have to look beyond the price tag and really dig into how they operate. Treat the process like you're hiring a key team member—focus on their expertise, their processes, and whether you can trust them for the long haul. A great partner doesn’t just hand over a dataset; they give you confidence. This methodical approach is a core part of effective, data-driven decision making for your business.

Evaluating Data Security and Compliance

Before you even think about data quality, your first conversation has to be about security. You're about to hand over potentially sensitive information, and a data breach could be a disaster for your company's reputation and bottom line. This part is completely non-negotiable.

Start your vetting process by asking for hard proof of their security protocols and compliance certifications.

Look for these established international standards:

- ISO 27001 Certification: This is the global benchmark for information security. It proves a company has a rock-solid system for managing and protecting sensitive data.

- GDPR and CCPA Compliance: If your data involves people from Europe or California, your partner absolutely must be compliant with these strict privacy laws.

- Secure Infrastructure: Ask them how your data will be handled. Do they use encrypted platforms? How do they control who gets to see the data?

If a potential partner gets vague or can't provide clear, documented answers to these questions, walk away. Security isn't a feature; it's the foundation.

Assessing Quality Assurance and Workflow

Once you’re confident your data will be safe, it’s time to focus on what matters most for your model: data quality. Inaccurate or inconsistent labels will poison your AI, no matter how brilliant your algorithm is. You need to know exactly how a potential partner ensures every piece of data is labeled correctly.

Ask them to walk you through their entire workflow, step by step. A professional service will have a multi-stage process for quality control, not just a quick check at the end.

Here are some key questions to ask:

- How do you train your annotators on our specific project rules?

- What tools do you use to track annotator accuracy and performance?

- Do you use a consensus method, where several people label the same data to cross-check for accuracy?

- Who reviews the work? Is there a separate, dedicated QA team?

- Can we see a sample of your work before we sign anything?

A transparent partner will be eager to show you their entire quality assurance pipeline. They’ll explain how they catch mistakes, provide feedback to their annotators, and maintain consistency across millions of data points. This is the difference between professional AI training data services and just hiring someone from a freelance site.

Confirming Scalability and Industry Expertise

Finally, think about the future. Your first project might be a small pilot, but what happens when your AI model takes off? Your data needs could explode overnight. You need a partner who can handle that growth without breaking a sweat or letting quality slip.

Ask them about their capacity. How quickly can they add more people to your project if you suddenly need to double your data volume? Do they have a bench of trained, vetted annotators ready to go?

Just as important is their experience in your specific field. A vendor that has labeled thousands of medical images will understand the subtle details in a way a generalist provider never could. Ask for case studies or references from companies in your industry, whether it's retail, automotive, or finance. Their past experience means they'll get up to speed faster and make fewer mistakes, because they already speak your language.

The world of AI never sits still, and the way we prepare data is evolving right alongside it. As AI models grow more sophisticated, the old ways of getting data ready just won't cut it. The future of AI training data services isn't just about labeling more data faster; it’s about making the entire pipeline smarter, more efficient, and fundamentally better.

We're starting to see a few key trends that are completely reshaping data preparation. The industry is moving away from brute-force manual labeling toward a more strategic, tech-driven approach. Getting a handle on these changes is key to keeping your AI strategy ahead of the curve.

The Rise of Synthetic Data

One of the toughest bottlenecks in AI has always been getting enough high-quality, varied data. This is especially true in privacy-sensitive fields like healthcare. Synthetic data is emerging as a powerful answer to this problem. Instead of relying purely on real-world information, we can now use AI to generate brand-new, artificial data that mirrors the statistical patterns of a real dataset.

This solves two massive problems at once:

- Privacy: Because the data is completely fabricated, it contains zero personally identifiable information. This makes it much safer to use and share without running afoul of regulations like GDPR.

- Filling Gaps: Real-world data often lacks examples of rare but critical events—think of a self-driving car encountering a bizarre obstacle on the road. Synthetic data lets us create these edge cases on demand, leading to far more robust and reliable AI systems.

AI-Assisted Labeling

There’s a certain irony in needing enormous amounts of human labor just to teach a machine. The next wave of innovation is all about using AI to help with the labeling itself. New tools can now pre-label data, automatically suggesting annotations that a human expert simply needs to verify and adjust.

This "human-in-the-loop" model is a game-changer. It dramatically accelerates workflows and frees up human annotators from mind-numbingly repetitive tasks. Instead of drawing every single bounding box from scratch, an expert can guide the AI, focusing their valuable time on the tricky, nuanced cases where their judgment truly matters. It's a win-win for both speed and quality.

The future of data preparation is a partnership between human intelligence and machine efficiency. The focus is shifting toward a 'data-centric' approach, where improving the dataset itself is recognized as being more impactful than just tweaking the algorithm.

This fundamental shift highlights just how valuable and complex modern data preparation has become. Market forecasts tell the same story, projecting the AI training dataset industry to rocket from USD 2.6 billion in 2024 to an incredible USD 18.9 billion by 2034. Right now, North America is at the forefront, holding over 35.5% of the global market share thanks to its rapid adoption of new tech.

For a closer look at what's driving this explosive expansion, you can dive into a deeper analysis of this booming AI training data market. And if you're looking to get hands-on, mastering data preprocessing techniques for machine learning is a non-negotiable first step.

Answering Your Questions About AI Training Data

Diving into any new technology brings a wave of practical questions, and AI is certainly no exception. When you get down to the bedrock of any AI system—the training data—most businesses bump into the same concerns around scope, cost, and timelines. Getting straight answers is the first step in building an AI strategy that actually works.

One of the first things leaders want to know is, "How much data do we really need?" The truth is, there's no magic number. The right amount is completely tied to the complexity of the job you want the AI to do. For instance, a model that just needs to tell cats from dogs might get by with thousands of examples. But a sophisticated medical AI designed to spot tumors in scans could need millions of incredibly detailed images to be reliable.

A good ai training data services partner won't just give you a number; they'll help you figure out a reasonable starting point and a plan to scale up. They know it's all about finding that sweet spot between having enough data for solid accuracy and not blowing your budget.

What Are the Key Cost and Time Factors?

Once you have a ballpark idea of data volume, the conversation naturally turns to budget and deadlines. "What's this going to cost, and how long will it take?" The answers to these questions hinge on a few critical variables.

The biggest things that shape your cost and timeline are:

- Data Volume: Simply put, the more data you need to label, the more it will cost. This is usually the largest factor.

- Annotation Complexity: There’s a world of difference between drawing simple boxes around cars and performing pixel-perfect segmentation on a medical MRI. The more detailed the work, the more time and money it takes.

- Required Expertise: If you're labeling everyday objects, general annotators work great. But if you're working with legal contracts or medical charts, you need subject matter experts, which comes at a premium.

A straightforward image classification project with 10,000 images might take a couple of weeks to complete. In contrast, a complex video annotation project could easily stretch over several months. Your data partner must be transparent about these factors from the start.

Thinking about the investment also means seeing where the market is headed. The AI training dataset market isn't just growing; it's exploding. It shot up from USD 1.9 billion in 2022 to USD 2.7 billion in 2024, and it's projected to hit USD 11.7 billion by 2032. You can learn more about these AI training dataset statistics to grasp the full scale of this trend.

This tells us that putting money into high-quality data isn't just an expense—it's a core strategic investment for anyone serious about building a competitive edge.

Ready to build a powerful AI model with flawless data? Zilo AI provides expert data annotation and manpower solutions to ensure your project succeeds from day one. Get started with Zilo AI today.