At its core, AI data annotation is the human-guided process of labeling raw data—like images, text, or audio—so that a machine learning model can understand it. It's the secret sauce that turns a chaotic jumble of information into a structured lesson for an AI.

Without this step, an AI has no way to check its work or learn from its mistakes. It’s like giving a student a final exam without ever providing the textbook or an answer key.

What Is AI Data Annotation, Really?

Think about teaching a toddler what a “dog” is. You wouldn’t just say the word over and over. You’d show them pictures—a Golden Retriever here, a Poodle there, maybe a German Shepherd—and point to each one saying, “That’s a dog.”

That simple act of pointing and naming is exactly what AI data annotation accomplishes for machines. It’s the foundational, and often painstaking, process of adding specific, meaningful labels to raw data. This is what transforms messy, unstructured information into something a machine learning (ML) model can actually use to train.

Even the most sophisticated algorithm is useless without high-quality labeled data. It’s like a brilliant student trying to learn from a smudged, unreadable textbook. The potential is there, but the raw material just isn't good enough to learn from.

Turning Data into Intelligence

So, what does this look like in practice? It usually involves a human annotator using specialized software to identify and tag important features within a dataset.

Here are a few real-world examples:

- For an image: An annotator might draw a tight bounding box around every car.

- For a block of text: They could tag each sentence as having a "positive" or "negative" sentiment.

- For an audio clip: They would transcribe the spoken words into text, maybe even identifying different speakers.

Each of these labels creates a piece of ground truth—a concrete, correct example the AI uses to build its understanding of the world. This meticulous work is the backbone of countless AI systems we use daily, from the "portrait mode" on your smartphone to advanced medical imaging tools. For startups breaking into the AI space, getting this right is non-negotiable. You can find out more by reading why data annotation is critical for AI startups in 2025.

This foundational work is what turns vast, chaotic datasets into the smart, structured fuel required for everything from self-driving cars to the recommendation engines that power modern e-commerce.

The Exploding Demand for Labeled Data

The value of high-quality data annotation is obvious when you look at the market. The industry for data annotation tools was valued at around USD 2.33 billion in 2024 and is expected to hit USD 2.99 billion by 2025.

That's a staggering compound annual growth rate (CAGR) of 28.3%. This boom is directly fueled by the insatiable appetite for high-quality training data needed for today's complex AI models. To grasp the sheer scale, consider this: by 2025, experts estimate that 463 exabytes of data will be created every single day, underscoring the massive and growing need for efficient annotation.

Exploring the Core AI Data Annotation Techniques

So, we know AI data annotation is all about adding labels to data. But what does that actually look like in practice?

Think of it like this: you wouldn't use a paintbrush to write a letter or a pen to paint a mural. The tool has to fit the job. It's the same with annotation. The specific technique you use depends entirely on what you want the AI to learn.

Let's dive into the most common methods used for images, text, and audio. You'll quickly see that these aren't just abstract concepts—they're the hands-on work that powers the AI features we interact with every day.

Image and Video Annotation

This is probably the easiest type of annotation to visualize. When an AI needs to "see" and make sense of the world, it learns from visual data that humans have carefully labeled.

Here are the go-to techniques:

- Bounding Boxes: This is the bread and butter of object detection. An annotator simply draws a rectangle around a specific object. It’s the method used to teach an autonomous vehicle to spot every car, pedestrian, and traffic light in its path.

- Polygonal Segmentation: Objects in the real world rarely fit into perfect rectangles. For things with irregular shapes, like a person's silhouette or a specific type of plant, annotators use polygons, clicking around the object’s precise outline for a much tighter fit.

- Semantic Segmentation: This one is incredibly detailed. Instead of just drawing a box around an object, this technique classifies every single pixel in the image. Every pixel gets a label, like "road," "sky," "building," or "tree." Have you ever used the "portrait mode" on your smartphone? That's semantic segmentation at work, separating the person (pixel by pixel) from the background.

- Keypoint Annotation: This technique is all about marking specific points of interest on an object. It’s crucial for things like human pose estimation, where an AI tracks the location of joints—shoulders, elbows, knees—to understand body language and movement.

These methods essentially transform a simple picture into a rich, detailed map that an AI can use to perceive and interpret its surroundings with surprising accuracy.

Text Annotation

We are swimming in text data—from customer reviews and emails to medical reports and legal contracts. Text annotation adds the crucial layer of context that allows an AI to grasp meaning, intent, and connections within the words.

Common tasks include:

- Sentiment Analysis: This is about labeling a chunk of text as positive, negative, or neutral. Businesses rely on this to get a real-time pulse on customer feedback from thousands of reviews or social media comments.

- Named Entity Recognition (NER): Here, the goal is to find and categorize key pieces of information, like names of people, companies, locations, dates, or dollar amounts. This is how AI systems can pull structured data from a messy block of text, like extracting a patient’s name and diagnosis from a doctor’s notes.

- Text Classification: This involves assigning a whole document or a piece of text to a predefined category. It's used to automatically sort customer support tickets into buckets like "Billing Question" or "Technical Issue," getting them to the right person much faster.

By tagging text with these labels, we give language models the framework they need to not just read words, but to comprehend intent, extract facts, and organize information effectively.

Audio Annotation

For any AI that needs to listen—think voice assistants or automated transcription services—audio annotation is the critical first step. It's the process of converting sounds and speech into a structured format that a machine can actually analyze.

The key techniques are:

- Audio Transcription: The most basic task is simply converting spoken words into written text. This is the foundation for tools like Apple's Siri and Amazon's Alexa, not to mention the automatic captions you see on videos.

- Speaker Diarization: This answers the question: "Who spoke, and when?" Annotators go through an audio file and label the different speakers. It’s essential for creating accurate transcripts of meetings or multi-person customer service calls.

- Sound Event Detection: Not all important sounds are speech. This technique involves identifying and timestamping non-speech events—like glass breaking, a dog barking, or a siren wailing—for things like home security systems or environmental sound monitoring.

To pull this all together, here’s a quick summary of how these techniques map to different data types and real-world uses.

Common AI Data Annotation Techniques and Use Cases

| Annotation Technique | Data Type | Primary Use Case Example |

|---|---|---|

| Bounding Box | Image/Video | Training a self-driving car to detect pedestrians and other vehicles. |

| Semantic Segmentation | Image/Video | Enabling "portrait mode" in smartphone cameras by separating people from the background. |

| Sentiment Analysis | Text | Analyzing thousands of customer reviews to gauge overall brand perception. |

| Named Entity Recognition | Text | Extracting patient names and conditions from unstructured medical notes. |

| Audio Transcription | Audio | Powering voice assistants like Siri and providing automatic video captions. |

| Speaker Diarization | Audio | Transcribing a business meeting with multiple speakers accurately. |

Ultimately, each of these core AI data annotation techniques is chosen for a specific reason. Whether it’s drawing boxes on a screen, highlighting parts of a sentence, or noting who spoke when, this detailed human effort is what breathes intelligence into artificial intelligence.

The Data Annotation Project Lifecycle

You can't just throw a pile of raw data at a machine learning model and hope for the best. Building a high-quality dataset is a craft, and successful AI data annotation follows a well-defined, cyclical process that turns that raw, unstructured information into a powerful asset.

Thinking of it as a lifecycle isn't just a metaphor; it's a practical framework. It helps teams manage complexity, maintain quality, and establish a repeatable workflow for consistent results. This structured approach is what prevents the common pitfalls that derail AI projects, like inconsistent labels or confusing instructions. Following this roadmap is how you turn a messy collection of files into the high-octane fuel your AI model needs.

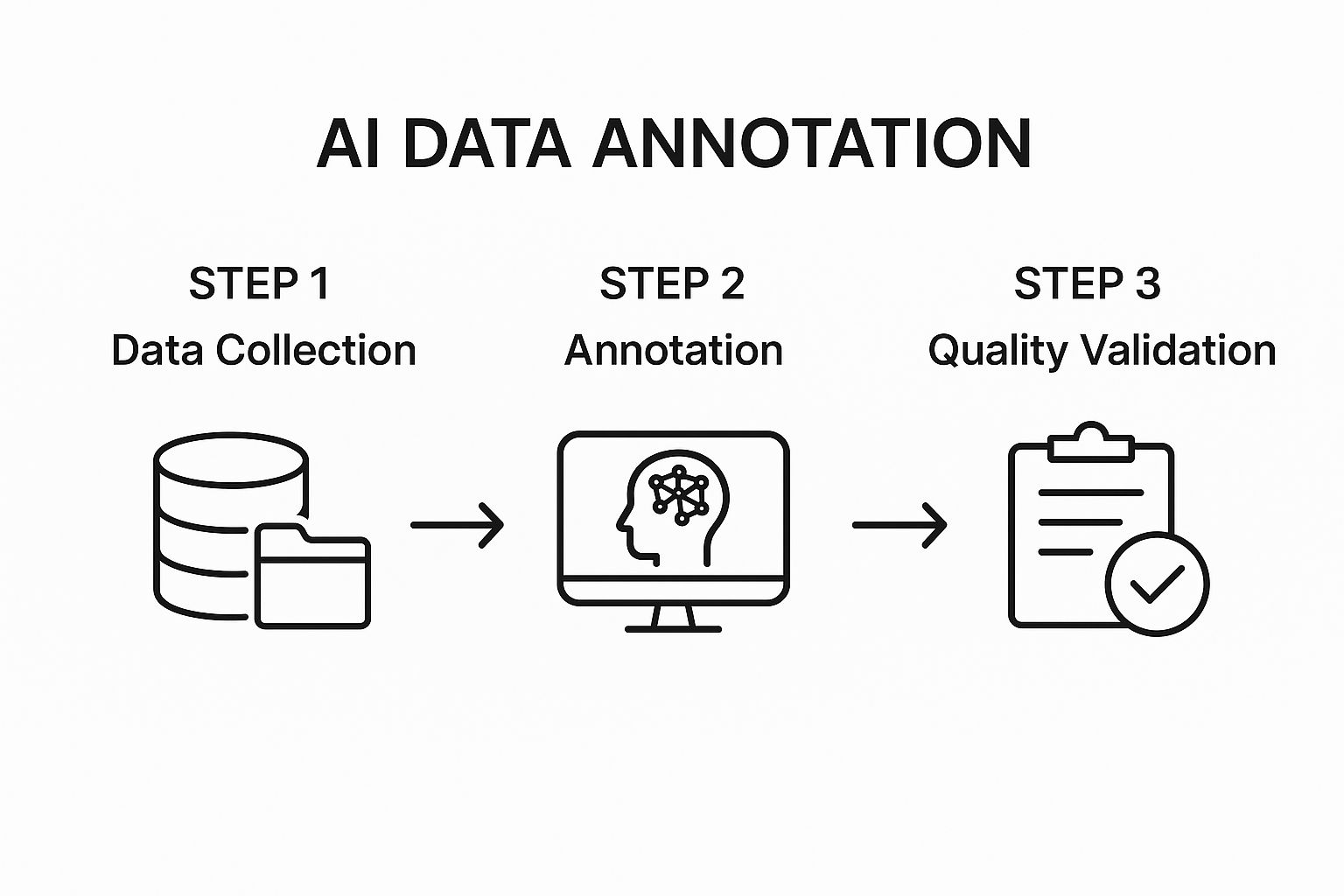

This infographic breaks down the fundamental stages.

As you can see, the journey moves from gathering the initial data all the way through to that crucial final quality check. It really drives home that proper annotation is a structured, multi-stage effort.

Stage 1: Data Collection and Preparation

The lifecycle actually starts long before anyone applies a single label. First, you have to collect the raw data. This could be anything—thousands of street-view images for a self-driving car, countless hours of customer service calls for a sentiment analysis tool, or a mountain of medical scans for a diagnostic AI.

Once you have it, the data needs to be prepared. This means cleaning out the junk—removing irrelevant or low-quality files—and standardizing formats so everything is consistent. Skipping this step is like trying to build a house on a shaky foundation; any problems you ignore here will only get bigger and more expensive to fix later on.

Stage 2: Annotation and Labeling

This is the heart of the AI data annotation process, where the real labeling work happens. But before a single click, the most important task is to create crystal-clear annotation guidelines. These guidelines are the project’s official rulebook, defining exactly what gets labeled and how.

A great set of guidelines is the single most important factor for ensuring label consistency. It must include precise definitions, visual examples of right and wrong, and clear instructions for handling those tricky "edge cases."

With solid guidelines in hand, the annotation can finally begin. This work typically falls into a few buckets:

- Human Annotation: A team of human experts manually labels the data. This approach delivers the highest accuracy but is also the most time-consuming and expensive.

- Automated Annotation: An existing AI model does a first pass at labeling the data, which is then reviewed and corrected by people. This "human-in-the-loop" (HITL) system can be a game-changer for productivity. Considering that up to 80% of the time on AI projects is spent just preparing data, this kind of automation is key.

- Hybrid Approach: A mix of both methods. You might start with manual labeling to train an initial "auto-label" model, which then assists with the rest of the dataset.

Stage 3: Quality Assurance and Iteration

Let's be realistic: no annotation project is perfect on the first try. The final stage is all about quality assurance (QA) and getting better with every pass. This isn't just a quick check at the end; it's a continuous process baked into the workflow.

The QA process usually involves having a senior annotator or project manager review a sample of the labeled data. They hunt for errors, inconsistencies, and any deviations from the guidelines. Often, this review process uncovers gray areas or confusing points in the original guidelines, which can then be clarified for the whole team.

This creates a powerful feedback loop:

- Annotate: The team labels a batch of data.

- Review: The QA team spots errors or inconsistencies.

- Refine: The guidelines are updated to fix the ambiguity.

- Iterate: The team goes back to annotating, now armed with clearer instructions and improved accuracy.

This iterative cycle is what truly separates good annotation from great annotation. It’s how you ensure the final dataset isn't just labeled, but labeled correctly and consistently—giving your AI model the best possible shot at success.

How Data Annotation Powers Industry Breakthroughs

While it's easy to get lost in the technical details of AI data annotation, the real magic is what it makes possible in the world around us. This meticulous, behind-the-scenes work isn't just a dry academic exercise. It’s the engine that fuels real progress across nearly every major industry.

From the doctor's office to the supermarket, high-quality labeled data is the essential ingredient that turns ambitious AI ideas into practical, world-changing applications. Let’s look at a few powerful examples of how annotation is directly enabling the intelligent systems that are reshaping our lives.

Revolutionizing Healthcare with Labeled Medical Data

In medicine, where precision can literally be a matter of life and death, AI offers a powerful new set of tools. Diagnostic AI models, trained on millions of carefully annotated medical images, are helping doctors spot diseases earlier and more accurately than ever before.

Imagine trying to find a tiny tumor in a detailed CT or MRI scan. Radiologists and other medical experts painstakingly annotate these images, often using techniques like semantic segmentation to highlight every single pixel of a potential malignancy. This process creates an incredibly rich dataset that teaches an AI to recognize the subtle, often invisible patterns associated with a disease.

The result is AI systems that can:

- Flag abnormalities on scans for a human expert to review, acting as a crucial second set of eyes.

- Analyze cellular structures in pathology slides to help grade the severity of cancers.

- Predict disease progression by identifying minute changes in tissue over time.

This isn't about replacing doctors. It's about giving them a tireless digital assistant that can sift through enormous amounts of visual data, empowering them to make faster, more informed decisions. Every one of these life-saving advancements is built on a foundation of expert medical annotation.

Powering the Future of Autonomous Vehicles

The dream of a self-driving car navigating a chaotic city street is completely dependent on its ability to see and understand the world around it. This perception is built on a constant flood of data from cameras, LiDAR, and radar—and all of it needs to be flawlessly annotated.

Every object a self-driving car "sees"—from other vehicles and pedestrians to traffic cones and lane markings—has been defined for it through millions of hours of human-led data annotation.

Annotators use a combination of techniques, like 3D cuboids and video object tracking, to label every relevant entity in a vehicle's environment. This teaches the car's AI not just to identify objects but to predict their behavior. That cyclist up ahead? The AI knows to give them a wide berth because its training data included countless examples of annotated cyclists in different scenarios. This comprehensive approach to making decisions is key. Learn more about improving your team's choices in our guide to data-driven decision-making.

Transforming Retail and Agriculture

Beyond high-stakes fields like medicine and autonomous driving, data annotation is also quietly changing industries like retail and agriculture in profound ways.

In e-commerce, those strangely accurate product recommendations are powered by AI models trained on annotated data. Every time you click on an item, that interaction helps label the product with certain attributes, like "casual," "summer," or "formal wear." This tagged data feeds the recommendation engines that create a personalized shopping experience for millions.

Meanwhile, in agriculture, drones with specialized cameras fly over fields, capturing images that are later annotated to identify signs of crop stress, disease, or pest infestation. This allows farmers to apply water, fertilizer, or pesticides with surgical precision, boosting crop yields while reducing waste and environmental impact.

This widespread adoption is fueling incredible market growth. In 2024, the data annotation tools market hit an estimated USD 2.11 billion. It’s projected to grow at a compound annual growth rate (CAGR) of 20.71% through 2033, a surge driven by industries worldwide demanding high-quality datasets for their AI projects.

Even everyday tools we take for granted depend on this process. For example, powerful applications like speech-to-text technology simply wouldn't exist without meticulously labeled audio datasets, where human transcribers have converted millions of hours of spoken words into text to teach an AI how to understand human language.

Choosing Your Annotation Tools and Strategy

Getting your AI data annotation project off the ground involves a critical choice that will ripple through your timeline, budget, and the quality of your final model. You're faced with a market full of options, from building everything yourself to partnering with a team of specialists. Making the right call starts with being honest about your project's unique demands.

Think of it like deciding whether to cook a huge holiday dinner from scratch or hire a professional caterer. Doing it yourself gives you absolute control over every ingredient and recipe, but it also requires a ton of time, skill, and the right kitchen gear. Hiring a pro saves you the work and brings expertise to the table, but it comes at a different cost and means you're not personally stirring every pot.

In-House Team vs. Outsourced Provider

The first big question is whether to keep your annotation work inside your company or hand it off to an expert.

An in-house team puts you in the driver's seat, giving you complete control over data security and quality. This is often the go-to approach for companies handling highly sensitive or proprietary information—think healthcare records or financial data, where privacy is paramount.

But don't underestimate the commitment. Building and managing an in-house team is a major undertaking. You’re responsible for hiring, training, and managing people, not to mention all the overhead costs. For a startup or any team with a tight budget, this can be a huge hurdle.

On the other side of the coin, outsourcing to a specialized data annotation provider gives you instant access to a skilled workforce and proven quality control systems. This frees up your core team to focus on what they do best—building the AI model—instead of getting bogged down in managing a small army of labelers. To get a feel for what's out there, you can explore data annotation services and see how they might fit your project.

Key Insight: The choice between in-house and outsourcing isn't just about money. It’s a strategic decision that balances control, security, scalability, and how fast you can get your model to market.

Selecting the Right Annotation Platform

Once you've settled on your workforce strategy, you need to pick your tools. The annotation platform is the digital workbench where your labelers will do their job, so its features are absolutely crucial for both efficiency and accuracy.

Here are the must-have features you should demand from any tool you consider:

- Robust Quality Control (QC) Features: The platform needs to support review workflows, consensus scoring (where multiple annotators’ labels are compared for agreement), and simple feedback loops.

- Data Security Protocols: Look for solid security, like role-based access control, secure data handling, and compliance with standards like GDPR or HIPAA if they apply to your industry.

- Scalability: Your tool must be able to grow with your project, handling bigger datasets and more annotators without slowing down.

- Support for Your Data Type: Make sure the platform is built to handle your specific data, whether it's images, video, text, audio, or even complex 3D sensor data.

Ultimately, the best strategy is the one that fits your reality. A scrappy startup might kick things off with an open-source tool and a small team of freelance annotators. In contrast, a large corporation launching a critical computer vision system will likely partner with a full-service provider that offers a secure platform and a fully managed workforce. If you're looking for potential partners, our list of the https://ziloservices.com/blogs/top-10-data-annotation-service-companies-in-india-2025/ is a great place to start your research.

Best Practices for Flawless Data Annotation

In AI, the old saying “garbage in, garbage out” is more than a cliché—it’s a law. You can have the most sophisticated model in the world, but its performance will always be capped by the quality of the data it learns from. Getting AI data annotation right isn't just another project expense; it's the single best way to make sure your model actually works when it matters.

Following a few proven best practices is what really separates successful AI projects from the ones that end up stuck in endless cycles of expensive rework. This means thinking of annotation as a core discipline, not a one-off task. It's about building a solid foundation of clear rules, rigorous quality checks, and a commitment to getting better over time. Investing upfront in this process saves a massive amount of time and money down the road.

Create Ironclad Annotation Guidelines

The number one reason for bad labels? Ambiguity. Before a single image gets touched, you absolutely must develop a detailed set of annotation guidelines. This document becomes the single source of truth for your entire team, making sure everyone is on the same page and applying labels the exact same way.

Think of it as the constitution for your project. Vague instructions like "label all cars" just won't cut it. Your guidelines need to be incredibly specific and, most importantly, visual.

Your guidelines should always include:

- Precise Definitions: Clearly define every single label. What qualifies as a "pedestrian"? Does it count if they're partially hidden by a lamppost? Spell it out.

- Visual Examples: Include plenty of clear pictures showing exactly how to label objects correctly. Just as important, show examples of common mistakes and how to avoid them.

- Edge Case Instructions: You have to tackle the grey areas from the start. How should your team handle blurry images, objects cut off at the edge of the frame, or things that rarely appear?

Well-defined guidelines are the bedrock of quality. In fact, research shows that even top-tier benchmark datasets can have label error rates of 3.4% or more. These errors often creep in because the instructions for handling tricky edge cases weren't clear enough.

Implement a Multi-Stage Quality Workflow

Putting all your faith in one person to get everything perfect is a recipe for disaster. For any serious annotation project, a solid quality assurance (QA) workflow isn't optional—it's essential. This process builds checks and balances directly into your labeling pipeline, catching mistakes early before they have a chance to poison your entire dataset.

A simple yet powerful multi-stage QA process often looks something like this:

- Initial Annotation: A primary annotator labels a batch of data based on the guidelines.

- Peer or Expert Review: A second, often more senior, annotator reviews a sample of the work. They're looking for accuracy, consistency, and whether the primary annotator stuck to the rules.

- Consensus Scoring: For the most critical tasks, you can have several people label the same piece of data without seeing each other's work. The final label is decided by a majority vote, a great way to filter out individual bias or simple human error.

This layered approach means multiple sets of eyes are on the data, which dramatically cuts down on the number of bad labels that sneak into your final training set.

Establish a Continuous Feedback Loop

Your annotation guidelines shouldn't be carved in stone. They need to be living documents that evolve with your project. As your QA process gets going, you’ll inevitably find new edge cases or realize some of the original instructions were confusing. This is where a tight feedback loop becomes so important.

When a reviewer catches a mistake, they shouldn't just fix it and forget it. That feedback needs to get back to the original annotator and the project manager. This turns a single error into a learning opportunity for the whole team and a chance to make the guidelines even clearer for everyone. This cycle of label, review, and refine is the engine that drives continuous improvement, pushing your final dataset closer and closer to perfection.

Your Questions About AI Data Annotation Answered

When teams first dip their toes into AI data annotation, the same set of questions almost always pops up. Let's tackle them head-on, so you can move forward with a clear plan and avoid those early, expensive stumbles.

One of the biggest questions is always about the price tag. The truth is, there's no single answer. The cost of data annotation can swing wildly based on a few key things.

Think of it like this: a simple task, like basic image classification, might only set you back a few cents per image. But for something incredibly complex, like 3D sensor fusion annotation for a self-driving car, you could be looking at hundreds of dollars for a single, detailed scene. It all comes down to the expertise required.

Ultimately, your final cost is a balancing act between the complexity of the task, the sheer volume of data you need processed, and the level of accuracy your AI model absolutely must have.

Can AI Help with Annotation Itself?

Absolutely, and it’s becoming the go-to method for a reason. This approach, often called semi-automated or AI-assisted annotation, is a game-changer for efficiency.

Here’s how it typically works: Humans start by carefully labeling a small, initial set of data. This "starter set" is then used to train a preliminary AI model.

That model then takes a first pass at labeling the rest of your massive dataset, what we call "pre-labeling." Human annotators then circle back to review, tweak, and approve the AI's work. This human-in-the-loop (HITL) setup is worlds faster than doing everything by hand from the start. You get the raw speed of a machine combined with the nuanced judgment only a human can provide.

You’ll hear the terms 'data annotation' and 'data labeling' used almost interchangeably. While some purists might argue 'annotation' is for complex tasks like video segmentation and 'labeling' for simple classification, in the real world, they both mean the same thing: getting your data ready for an AI model.

This hybrid approach transforms a grueling manual effort into a much more efficient review process, letting you scale up big projects without compromising on quality.

At Zilo AI, we provide both the skilled human workforce and the expert data annotation services you need to fuel your AI projects. Whether it's image, text, or audio labeling, we deliver high-quality, scalable solutions to get you to your goal faster. See how we can power your next breakthrough.