AI data annotation services are a cornerstone of modern artificial intelligence. In simple terms, they are the professional services that label raw data—like images, text, and audio—so that machine learning models can actually make sense of it. Think of it as creating a detailed, structured textbook for an AI. Without it, raw information is just noise; with it, that noise becomes invaluable training material.

What Exactly Are AI Data Annotation Services?

Let’s use a simple analogy. Imagine you're teaching a toddler what a "car" is. You wouldn't just say the word over and over. You'd point to cars on the street, show them toy cars, and flip through picture books, pointing out their wheels, doors, and windows. Each time you point and name a feature, you're labeling data for a tiny human brain.

AI data annotation services do this exact same thing, but for machines and at an incredible scale. These services provide the essential, human-powered effort needed to meticulously label, tag, or categorize data, which allows AI and machine learning (ML) models to learn how to make accurate predictions on their own.

An AI model without properly labeled data is like a brilliant student locked in an empty room—all potential, but no knowledge to act on.

The Core Purpose of Annotation

At its heart, data annotation is all about adding context. A raw photograph of a busy city street is just a meaningless collection of pixels to a computer. It can't distinguish a person from a lamppost. An annotation service changes that by identifying and labeling every object that matters.

For example, in that street photo:

- Cars might be identified with bounding boxes.

- Pedestrians could be segmented out, pixel by pixel.

- Traffic lights would be classified by their color and status (red, yellow, or green).

This process turns a chaotic, unstructured image into a structured "answer key." The AI model then studies this answer key to learn what to look for in the future. The better the labels, the smarter the AI. A good primer on the fundamental concepts and uses of these services can be found in this guide to AI Annotation Service.

This painstaking work is the secret sauce behind so many of the AI systems we rely on daily, from the voice assistant on your smartphone to the algorithms that help guide self-driving cars.

To quickly summarize what these services entail, here’s a simple breakdown of their core components.

Quick Overview of AI Data Annotation Services

This table provides a high-level summary of the core components of AI data annotation services, breaking down the purpose, key inputs, and primary outputs.

| Component | Description |

|---|---|

| Purpose | To add contextual labels to raw, unstructured data, making it understandable and useful for training machine learning models. |

| Inputs | Raw data such as images, videos, audio clips, text documents, or sensor data (e.g., LiDAR). |

| Outputs | Structured, labeled data where objects or attributes are identified, classified, or tagged according to project specifications. |

In essence, these services take messy, real-world information and transform it into the organized, high-quality fuel that powers intelligent systems.

Why This Human-Led Effort Is So Important

Even with today's advanced automation, human intelligence is still indispensable in the annotation process. Why? Because people bring nuance, context, and the ability to handle ambiguity—all things that algorithms still find challenging. This is why high-quality data annotation is the bedrock of any successful AI model.

The quality of your annotated data directly dictates how well your model will perform in the real world. In many ways, the labeled data defines the boundaries of what an AI system can and cannot learn.

It’s no surprise, then, that the demand for these services is exploding. The global data annotation tools market was valued somewhere between USD 2 and 2.9 billion in 2024 and is expected to grow at a compound annual rate of over 20%. This reflects an intense, industry-wide need for expertly labeled data.

For any company entering the AI space, understanding this process isn't just a technical footnote—it's a core strategic priority. As our guide explains, it's a key reason why data annotation is critical for AI startups in 2025. Ultimately, when you invest in quality data annotation, you're really investing in the accuracy, safety, and reliability of your final AI product.

Exploring the Core Types of Data Annotation

Just as a mechanic has specialized tools for different parts of a car, AI data annotation services use specific techniques for different kinds of data. You can't label an image the same way you label a customer review, and the method you choose directly impacts what your AI model can learn.

At a high level, the work falls into three main buckets: visual data (images and video), text, and audio. Understanding the differences is key, because the right annotation technique is what turns raw, meaningless data into a powerful training asset for your machine learning project. Let's break down what this looks like in the real world.

Visual Data Annotation for Computer Vision

This is probably the type of annotation people are most familiar with. It’s the magic behind self-driving cars, medical imaging analysis, and automated checkout systems. In short, it’s how we teach machines to “see” and understand the visual world.

A couple of common techniques you’ll see are:

- Bounding Boxes: This is the most straightforward approach. An annotator simply draws a rectangle around an object of interest. Think of an e-commerce company that needs to count its inventory. It can use a model trained on images where every product in a warehouse photo is enclosed in a bounding box.

- Semantic Segmentation: This is much more granular. Instead of just a box, every single pixel in an image gets a label, like "road," "sky," "person," or "car." This pixel-perfect detail is what allows a self-driving car’s AI to know the precise edges of the road, separating it from the sidewalk or a patch of grass.

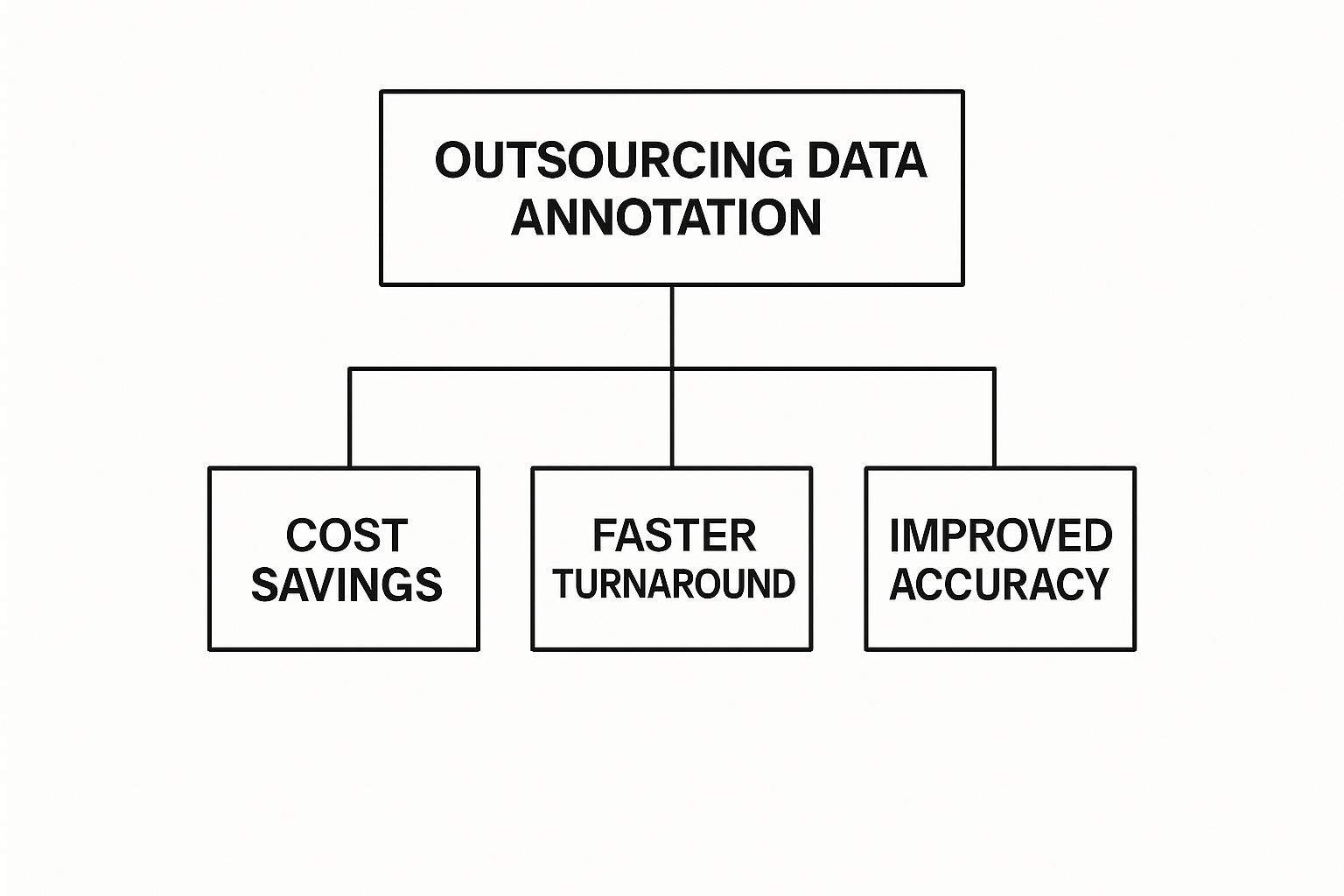

This kind of detailed work is a big reason why companies choose to outsource. The infographic below highlights the main drivers for seeking outside help, which often boil down to needing higher accuracy and faster project completion.

As the diagram shows, it’s not just about cutting costs. It's about tapping into specialized expertise to build a better, more accurate AI model from the ground up.

Text Annotation for Language Models

Text annotation is all about teaching machines to grasp the meaning, intent, and nuances of human language. It’s the foundation that powers everything from the chatbot on your bank's website to the spam filter in your email.

You can think of text annotation as giving an AI a crash course in grammar, context, and slang. Without it, a machine just sees a string of characters. Labeled text helps it spot patterns, understand relationships, and figure out what someone actually means.

Here are a couple of key methods:

- Named Entity Recognition (NER): This involves spotting and tagging key pieces of information in a block of text—things like people's names, company names, locations, dates, or product IDs. A customer service bot uses NER to instantly pull an order number and product name from a complaint to look up the customer’s history.

- Sentiment Analysis: This is exactly what it sounds like. Annotators label text as positive, negative, or neutral. Brands use this at a massive scale to track social media chatter, giving them a real-time pulse on how the public is reacting to a new ad campaign or product launch.

Audio Annotation for Sound and Speech AI

Audio annotation takes sound waves and turns them into structured data that a machine can actually analyze. This is the critical first step for building voice assistants, automated transcription software, and even advanced security systems.

The field is also expanding quickly. As of 2025, the scope of data annotation is moving well beyond just images and text. It now includes more complex data like full-motion video, 3D point clouds from LiDAR sensors, and a huge variety of audio and sensor data. This shift is happening because new AI applications need more diverse and precisely labeled data to work. You can get a deeper look into these future developments by exploring the emerging trends in data annotation on mindy-support.com.

This growth includes very specific audio-related tasks:

- Audio Transcription: The most common form is simply converting spoken language into written text. This is the core technology that allows assistants like Alexa and Siri to understand what you're asking them.

- Sound Event Detection: This goes beyond just words to identify other important sounds. For instance, a smart home security system could be trained to distinguish the sound of breaking glass from a dog barking, then send a specific alert to the homeowner.

How Annotation Powers Real-World AI Applications

The theory behind data annotation is one thing, but its real power comes to life in the everyday applications it drives. Labeled data is the invisible engine behind some of the biggest leaps in technology, turning abstract AI concepts into tools that can genuinely change our lives. From diagnosing diseases earlier to making our roads safer, high-quality data annotation is where the magic begins.

Think of an AI model as an incredibly bright, but completely inexperienced, apprentice. It has all the potential in the world, but without clear, detailed examples to learn from, it’s useless. AI data annotation services are what provide that critical on-the-job training, giving the model the structured "experience" it needs to perform its job reliably.

Transforming Healthcare Outcomes

In medicine, accuracy isn't just a goal—it's everything. AI models are quickly becoming indispensable partners for doctors and radiologists, but their performance is directly tied to the quality of the data they learned from. This is where meticulous image annotation is absolutely vital.

Specialists carefully annotate medical images like MRIs and CT scans, outlining tumors, highlighting fractures, and flagging other anomalies. An AI trained on thousands of these precisely labeled scans starts to recognize subtle patterns the human eye might miss, especially after a long and tiring shift. This creates a system that can act as a reliable second set of eyes, flagging potential issues for a doctor's final review and ultimately improving diagnostic accuracy.

Making Autonomous Vehicles a Reality

The entire concept of self-driving cars navigating our complex streets is built on a foundation of expertly annotated data. An autonomous vehicle's sensors—its cameras, LiDAR, and radar—are constantly generating a staggering amount of information. Without labels, that data is just a flood of meaningless noise.

Data annotation gives a self-driving car its "situational awareness." It's the difference between seeing a collection of pixels and understanding, "That's a pedestrian, that's a stop sign, and that's an approaching vehicle."

The massive data needs of this industry are a key reason why North America currently leads the global data annotation market, holding a share of approximately 36.7%. The region’s major investments in autonomous driving and advanced healthcare are fueling an enormous demand for these specialized annotation services.

Powering Modern E-commerce and Beyond

Annotation’s influence is all over our daily digital lives, too. Ever used a "visual search" on a shopping site, where you upload a photo of a chair to find similar ones? You're interacting with an AI that was trained on millions of annotated product images. Human annotators have tagged those images with labels like "armchair," "mid-century modern," or "blue velvet," teaching the AI to understand visual characteristics and styles.

The same idea works for audio. Consider the convenience of voice typing tools, which rely on AI to turn your speech into text. These systems become accurate by training on huge libraries of audio files that have been carefully transcribed and annotated. You can see a practical example in action with AI-powered voice typing in Microsoft Outlook).

At the end of the day, behind every "smart" application is a mountain of well-labeled data. It’s the essential, often-unseen groundwork that makes modern AI not just possible, but safe, reliable, and truly helpful in our world.

How to Choose Your Data Annotation Partner

https://www.youtube.com/embed/YJnnxitraac

Picking the right partner for your AI data annotation services is one of the most important calls you'll make for your entire AI project. This isn't just about finding the cheapest service; it's about trusting a company with the very DNA of your AI model. A bad choice here can lead to junk models, wasted money, and major setbacks.

Think of it like building a skyscraper. You wouldn't hire just any contractor to pour the foundation—you'd find a specialist who lives and breathes structural engineering. Your data annotation partner is that specialist. They are building the foundation your AI stands on, so any cracks or mistakes at this stage will compromise everything you build on top of it.

To make a smart choice, you need to look past the price tag. The best partners stand out in four key areas: Quality, Scalability, Security, and genuine Domain Expertise.

The Four Pillars of a Great Annotation Partner

When you're vetting potential vendors, use these four pillars as your guide. It's a simple framework that helps you cut through the sales pitches and get to what really counts.

Quality Assurance Processes

This is non-negotiable. It's the most critical pillar. Ask them straight up: "Walk me through your quality control workflow." Do they have multiple annotators label the same piece of data to check for agreement? Do they have review stages? What about automated checks? A serious provider will have a multi-layered system to ensure the labels are accurate and consistent.

Scalability and Flexibility

Your data needs are going to change. They always do. You need a partner who can handle it when you suddenly need to process 10x more data than last month. Can they bring on more annotators quickly without letting quality slide? A partner who can't scale with you today will become a massive bottleneck tomorrow.

Robust Security and Compliance

Your data is valuable, and often sensitive. How will they protect it? You should be looking for real security credentials, like SOC 2 certification. Ask them to detail their data handling procedures, especially if you're dealing with personal information. For anyone in healthcare or finance, compliance with regulations like HIPAA and GDPR isn't just a nice-to-have, it's a deal-breaker.

Verifiable Domain Expertise

Does the vendor actually understand your industry? Annotating medical scans is a world away from labeling legal contracts. A partner with proven experience in your field will get the nuances right away, saving you from endless back-and-forth and producing much cleaner data from day one.

A Practical Checklist for Vendor Evaluation

To make this framework more actionable, here’s a simple checklist of questions to bring into your vendor interviews. The answers—or lack thereof—will tell you everything you need to know about their true capabilities.

Vendor Evaluation Checklist

Here’s a quick-and-dirty checklist to help you compare potential partners side-by-side.

| Evaluation Criteria | Key Questions to Ask | Why It Matters |

|---|---|---|

| Quality Assurance | Can you describe your quality control and validation process? What is your typical inter-annotator agreement (IAA) score? | This reveals their commitment to accuracy and gives you a real number to measure them by. |

| Scalability | How do you handle sudden increases in data volume? What is your process for scaling the annotation team for our project? | This tests their operational agility and shows whether they can keep up as your project grows. |

| Security Protocols | What are your data security and privacy measures? Are you compliant with regulations relevant to our industry (e.g., HIPAA, GDPR)? | This confirms they can be trusted with your sensitive data, protecting you from huge legal and business risks. |

| Domain Expertise | Have you worked on similar projects in our industry before? Can you provide case studies or references? | This proves they have the contextual understanding needed to handle the specific details of your data. |

Choosing the right vendor is a strategic decision that directly impacts your model's performance and your project's timeline. While there are tons of companies offering these services, it's worth the effort to find one that truly aligns with your standards. For a deeper dive into specific providers, check out this guide on the top 10 data annotation service companies in India for 2025.

Ultimately, you want to avoid the "race to the bottom" on price. Chasing the lowest cost often leads to hidden problems, like poor data quality or unethical labor practices, which can poison your entire AI initiative.

By focusing on these four pillars and asking tough questions, you can find a partner who will deliver the high-quality, secure, and scalable data you need. It’s this upfront diligence that ensures your investment actually pays off with a powerful, reliable AI model.

Best Practices for Successful Annotation Projects

Successfully pulling off an AI annotation project takes more than a massive dataset and a vendor. It requires a smart playbook—a set of best practices that turns a potential money pit into a high-value asset for your business. Without a clear plan, even the most promising AI initiatives can get bogged down by bad data.

Think of it like building a house. You wouldn't start pouring concrete without detailed blueprints, a solid foundation, and constant communication with your construction crew. The same logic applies here. Following these battle-tested practices is essential for any team using AI data annotation services and is the key to ensuring your final AI model is reliable, accurate, and built to spec.

Create Crystal-Clear Annotation Guidelines

Your annotation guidelines are the single most important document you will create. They are the absolute source of truth for your annotators, spelling out precisely what a correct label looks like. I've seen it time and again: vague or incomplete instructions are the number one cause of inconsistent, low-quality data.

These guides need to be loaded with visual examples of both correct and incorrect labels, especially for those tricky edge cases. For instance, if you're labeling pedestrians, your guide must clarify what to do with a person who is partially hidden behind a car or only seen in a window's reflection. The more ambiguity you can eliminate upfront, the less cleanup you'll be forced to do later.

Run a Pilot Project First

Before you even think about committing to your entire dataset, always start with a small pilot project. This is your chance to test-drive your guidelines on a small, manageable batch of data, usually around 1-2% of the total volume. A pilot project is a crucial stress test that will immediately expose weaknesses in your instructions and uncover confusing scenarios you never even considered.

A pilot project is the best, most cost-effective way to find flaws in your process. Fixing an issue in your guidelines after labeling 100 images is easy. Fixing it after labeling 100,000 images is a disaster.

This initial run gives you invaluable feedback, allowing you to fine-tune your guidelines based on how they perform in the real world. It also helps calibrate expectations with your annotation partner and sets a firm quality benchmark for the rest of the project.

Maintain a Constant Feedback Loop

Data annotation is not a "set it and forget it" activity. To get it right, you need a continuous, open line of communication between your internal team and your annotation partner. It's a guarantee that edge cases and weird data variations will pop up, and you need a system in place to address them quickly.

Set up a simple way for your annotators to ask questions and for your team to provide clear, timely answers. This back-and-forth process of feedback and refinement has a compounding effect on quality. The more your annotators learn about your specific needs, the better and more consistent their work gets, which directly improves your model's performance.

Measure What Truly Matters

You can't improve what you don't measure. Establishing key performance indicators (KPIs) is fundamental to monitoring the health and effectiveness of your annotation project. These metrics give you objective data to assess quality and make sure you're actually getting the value you're paying for.

Here are the key metrics you absolutely have to track:

- Inter-Annotator Agreement (IAA): This measures consistency by seeing how well different annotators' labels match up on the same piece of data. A high IAA score is a great sign that your guidelines are clear.

- Label Accuracy: This is a direct measure of correctness, usually checked by comparing the work against a "gold standard" set of labels that an expert has reviewed.

- Throughput: This simply tracks the volume of data being annotated over time, which helps you keep an eye on your project timeline.

Keeping a close watch on these numbers helps turn raw data into actionable insights—a core principle of building effective AI. If you want to dig deeper into this, you can learn how to master data-driven decision making to get even more out of your projects. And, of course, a solid legal framework is crucial for any outsourced work; looking at essential software development contract samples can help you understand what protections to put in place.

Answering Your Top Annotation Questions

Once you've wrapped your head around the different types of annotation and what to look for in a partner, the practical questions start to surface. It's time to talk about the nitty-gritty: money, deadlines, and why we still need people in the loop.

Getting straight answers on these topics is what separates a good plan from a great one. Let's tackle the questions that project managers, engineers, and business leaders always ask before kicking off an annotation project.

How Are Data Annotation Services Priced?

Everyone wants to know what this is going to cost. But pricing for data annotation isn't a simple, off-the-shelf number. It depends entirely on the complexity of your data and the sheer volume you need to get through.

Think of it like this: hiring someone to paint a single, flat wall is a straightforward job with a predictable cost. But asking them to paint an ornate cathedral ceiling with intricate details? That's a completely different project, requiring more skill and time, and the price will reflect that.

Most pricing models fall into one of these buckets:

- Per-Item or Per-Annotation: This is your most basic model. You pay a set price for every single image, document, or label. It’s perfect for high-volume, straightforward tasks like drawing simple bounding boxes around cars in thousands of photos.

- Hourly Rate: For more complex or evolving projects, you pay for the annotator's time. This works best when the guidelines are still being refined or when you need a genuine subject matter expert, like a board-certified radiologist, to analyze medical scans.

- Project-Based Fee: You and your vendor agree on a single, fixed price for the entire project. This gives you budget certainty, which is great, but it requires you to have an iron-clad scope of work defined from day one.

The single biggest factor driving cost is complexity. A simple image classification might only be a few cents per image. In contrast, detailed semantic segmentation on a high-resolution MRI could run you several dollars or more. The annotator's skill level matters, too—a generalist is always going to be more affordable than a trained specialist.

What Is a Realistic Turnaround Time for a Project?

Project timelines can swing wildly, from just a few days to several months. The timeline really comes down to three things: the total amount of data you have, how difficult the annotation is, and how many people are working on it.

A common mistake is underestimating the time needed for the initial setup and quality assurance cycles. Rushing these early stages almost always leads to poor data quality and costly rework down the line.

Here’s a rough breakdown of how a project usually flows:

- Pilot Phase (1-2 weeks): This is where the magic starts. You’ll finalize the instructions, train the team, and run a small test batch to iron out all the kinks before scaling up.

- Main Annotation Phase (Varies): This is the heavy lifting. A project with 10,000 images needing moderately complex polygon annotations could take a dedicated team about 3-5 weeks.

- Quality Review (Ongoing): Good teams check for quality constantly. However, there’s usually a final review stage that can add another week or so to the end of the project.

A reliable vendor will give you a detailed project plan with clear milestones. And remember, trying to rush data annotation is a false economy. Industry research consistently shows that up to 80% of the time spent on an AI project is on data preparation—and annotation is a huge chunk of that. Take the time to get it right.

Why Is a Human-in-the-Loop Still So Critical?

With all the buzz around automation, it's a fair question: why are people still at the center of AI data annotation services? The truth is, even the smartest AI models get tripped up by nuance, context, and ambiguity—all things that human brains handle with ease. This is where a "human-in-the-loop" (HITL) approach shines, blending the speed of machines with the keen judgment of a person.

For example, a model might be able to pre-label 80% of your data with high confidence, which is a fantastic head start. But you still need a human expert to:

- Handle Edge Cases: Sort out that tricky 20% of the data where the model was confused or made a mistake.

- Validate AI Output: Quickly scan the machine-generated labels to catch and fix subtle errors that an algorithm would miss.

- Refine Guidelines: Spot ambiguous examples and provide feedback to make the annotation instructions clearer for both the human team and the AI.

This hybrid model is far more efficient than doing everything by hand and infinitely more accurate than letting a machine run wild. Humans act as the ultimate quality control, making sure your final dataset is solid enough to build a truly high-performing AI.

Ready to build a powerful AI model with clean, accurate, and scalable data? Zilo AI offers end-to-end data annotation and manpower solutions tailored to your project's unique needs. Partner with us to accelerate your AI development with confidence. Learn more about our annotation services.