Here’s the simple truth: AI data labeling is the process of adding descriptive tags or labels to raw, unstructured data—think images, text files, or audio clips. It’s what makes that data understandable for a machine learning model.

Think of it like this: you're creating a detailed answer key for an AI that's about to study for a huge exam. This "answer key" provides the ground truth, the correct answers it needs to learn from.

Why AI Data Labeling Is Your Most Critical Ingredient

Imagine trying to teach a child what a "cat" is by giving them a library full of unlabeled photos. Without anyone pointing and saying, "That's a cat," they'd have no context. They’d have no way to connect the furry, four-legged creature in a picture to the concept you're trying to teach.

AI models face the exact same problem. They are incredibly powerful learners, but they start as a complete blank slate. This is where data labeling becomes the invisible engine driving so much of modern technology.

For an AI to learn a task, it needs to be trained on vast amounts of carefully labeled data. If you want a self-driving car to recognize stop signs, it first needs to be shown thousands—even millions—of images where a human has meticulously drawn a box around every stop sign and tagged it with the label "stop sign."

The quality of an AI model is almost always limited by the quality and quantity of its training data. It's a simple but profound principle. Without high-quality labels, even the most sophisticated algorithms will stumble.

The Foundation of Modern AI

This process isn't just a minor technical step; it's the very foundation on which entire AI applications are built. The accuracy of the labels you create translates directly into the real-world performance and safety of the final system.

Let's look at a few examples:

- In Healthcare: AI models that analyze medical scans to detect diseases rely entirely on labels created by radiologists who carefully outline tumors or other anomalies. The stakes are incredibly high, as these labels are critical for the AI to provide reliable diagnostic support.

- In E-commerce: Those recommendation engines that seem to know exactly what you want? They learn your preferences because products are labeled with categories, styles, and other attributes. Good labeling means you see relevant suggestions; bad labeling means you get shown things you'd never buy.

- In Customer Service: Chatbots understand what you're asking because they've been trained on mountains of text data that has been labeled for intent (e.g., complaint, question) and sentiment (positive, negative, neutral).

This meticulous work forges the essential link between raw, chaotic information and intelligent, actionable insights. For any organization looking to build effective AI, getting this right is non-negotiable. It's often one of the most important early decisions, which is why we explore why data annotation is critical for AI startups in 2025.

Ultimately, data labeling is what transforms abstract data into the structured knowledge that allows machines to see, hear, and understand the world around them.

Understanding the Core Data Labelling Methods

When it comes to AI data labelling, there’s no single “right” way to do it. Think of it less as a rigid procedure and more as a spectrum of techniques, each with its own trade-offs in speed, cost, and accuracy. The best approach for you will hinge entirely on your project's complexity, the size of your dataset, and how much precision you truly need.

Let’s walk through the main ways data gets its context, starting with the most hands-on approach and moving toward full automation. Getting this choice right is the first step in building a successful AI model.

Manual Labelling: The Human Touch

This is the original, foundational method of data labelling. It’s exactly what it sounds like: human annotators sit down and painstakingly apply labels to every single piece of data, following a strict set of guidelines. Think of a master watchmaker assembling a timepiece by hand—every component is placed with deliberate care and expertise.

This hands-on approach is the undisputed gold standard for quality. It's simply irreplaceable for tasks that require deep contextual understanding, specialized knowledge, or subjective judgment. For example, you’d want trained radiologists labelling MRI scans to spot subtle tumors, or linguists to correctly identify sarcasm in customer feedback. An algorithm just can't replicate that level of nuance.

Manual labelling delivers the highest quality but is also the most time-consuming and expensive method. It’s best reserved for creating foundational "ground truth" datasets or for projects where even a small margin of error is unacceptable.

The incredible demand for this level of quality is why the AI data labelling services market is exploding. Projections show the market reaching an estimated $10 billion in 2025 and are on track to soar past $40 billion by 2033. This growth, detailed in recent market analysis, highlights just how critical human expertise remains in fields like healthcare and automotive development.

Semi-Automated Labelling: A Human-Machine Partnership

Semi-automated labelling strikes a powerful balance between human precision and machine efficiency. It’s a "human-in-the-loop" model where an AI takes the first pass at labelling the data. Then, human annotators review, correct, and validate the AI’s work.

It’s like a skilled carpenter using a power saw. The saw does the heavy, repetitive cutting with incredible speed, but the carpenter guides it, makes the fine adjustments, and ensures the final piece is perfect. This blend makes the whole process vastly more efficient than doing everything by hand.

This approach really shines in large-scale projects where you can tolerate some initial errors. For instance:

- An e-commerce site could use an AI to pre-label thousands of product images with categories like "dresses" or "handbags."

- Human reviewers would then quickly scan the results, correcting the few instances where the AI mistook a skirt for a dress.

- The best part? This feedback loop actively teaches the model, making its next batch of predictions even more accurate.

Automated Labelling: Speed at Scale

At the far end of the spectrum is fully automated labelling, where algorithms handle the entire annotation process without any direct human input. This is where you see advanced techniques like programmatic labelling and synthetic data generation, both designed for working at an absolutely massive scale.

Programmatic labelling uses scripts or simple rules (heuristics) to apply labels automatically. A basic example would be a script that labels any customer review containing words like "amazing" or "perfect" as "positive sentiment." It's fast, but can be blunt.

Synthetic data generation is even more sophisticated. It involves creating brand-new, artificial data that is born perfectly labelled. This is a game-changer for training models on rare or dangerous scenarios. Instead of trying to find thousands of real-world examples of a self-driving car narrowly avoiding an accident, you can generate endless variations of that scenario synthetically, creating a much more robust and safe AI.

Comparing Data Labelling Methods

To help you decide which path is right for your project, here’s a quick side-by-side comparison of the primary data labelling approaches. Choosing the right one is about balancing your need for quality against your constraints on time and budget.

| Method | Key Characteristic | Best For | Primary Challenge |

|---|---|---|---|

| Manual | Human-driven, high precision | Creating "ground truth" data; complex, nuanced tasks (e.g., medical imaging, sentiment analysis) | Slow, expensive, and difficult to scale |

| Semi-Automated | Human-in-the-loop (AI assists) | Large-scale projects where speed and quality need to be balanced; iterative model improvement | Requires managing both human workforce and AI models effectively |

| Automated | Machine-driven, massive scale | Huge datasets with simple patterns; generating data for rare edge cases (synthetic data) | Can lack nuance; quality is highly dependent on the algorithm or rules used |

Ultimately, many modern AI projects use a hybrid approach, perhaps starting with manual labelling to create a high-quality seed dataset, then moving to semi-automated methods to scale up. The key is to understand the strengths and weaknesses of each so you can build the most effective workflow.

Building Your AI Data Labelling Workflow

Putting together a successful AI data labelling project takes more than just good intentions. You need a structured, repeatable process. Think of this workflow as your playbook, guiding your team from a jumble of raw files to a polished, high-value dataset ready to train a powerful model. Trying to wing it is a surefire way to blow your budget, miss deadlines, and end up with a poorly performing AI.

The whole thing boils down to a fundamental truth in machine learning: garbage in, garbage out. The quality of your final AI system is a direct reflection of the care you put into every stage of the labelling workflow. Each step builds on the last, and a weak link anywhere along the chain can jeopardize the entire project.

Phase 1: Data Collection and Preparation

Before a single label even gets applied, your first job is to gather and organize your raw data. This isn't just about downloading files; it's about curating a dataset that truly mirrors the real-world problem you want your AI to solve. For example, if you're training a model to spot defective products on an assembly line, you need images of all possible defects, from every angle, and under different lighting conditions.

Once you have your data, it needs a good cleaning. This involves practical tasks like:

- Removing duplicates to keep your model from getting biased.

- Resizing images so they're all a consistent format.

- Transcribing audio to text before you can run sentiment analysis on it.

This initial housekeeping is crucial. A clean, well-organized dataset makes the actual labelling phase dramatically faster and more accurate.

Phase 2: Annotation Guidelines and Labelling

With your data prepped and ready, it's time to create crystal-clear annotation guidelines. These guidelines are the constitution for your project, spelling out exactly how each label should be applied. They have to be specific and unambiguous to get rid of any guesswork. For instance, instead of vaguely saying "label the cars," a solid guideline would say, "Draw a tight bounding box around all four-wheeled passenger vehicles, excluding trucks and buses."

Only when these rules are set in stone can the real labelling begin. This is where annotators—whether they're human, machine-assisted, or fully automated—get hands-on and apply the tags based on your guidelines. Consistency is everything here. Every single annotator must interpret the rules in the exact same way for every piece of data.

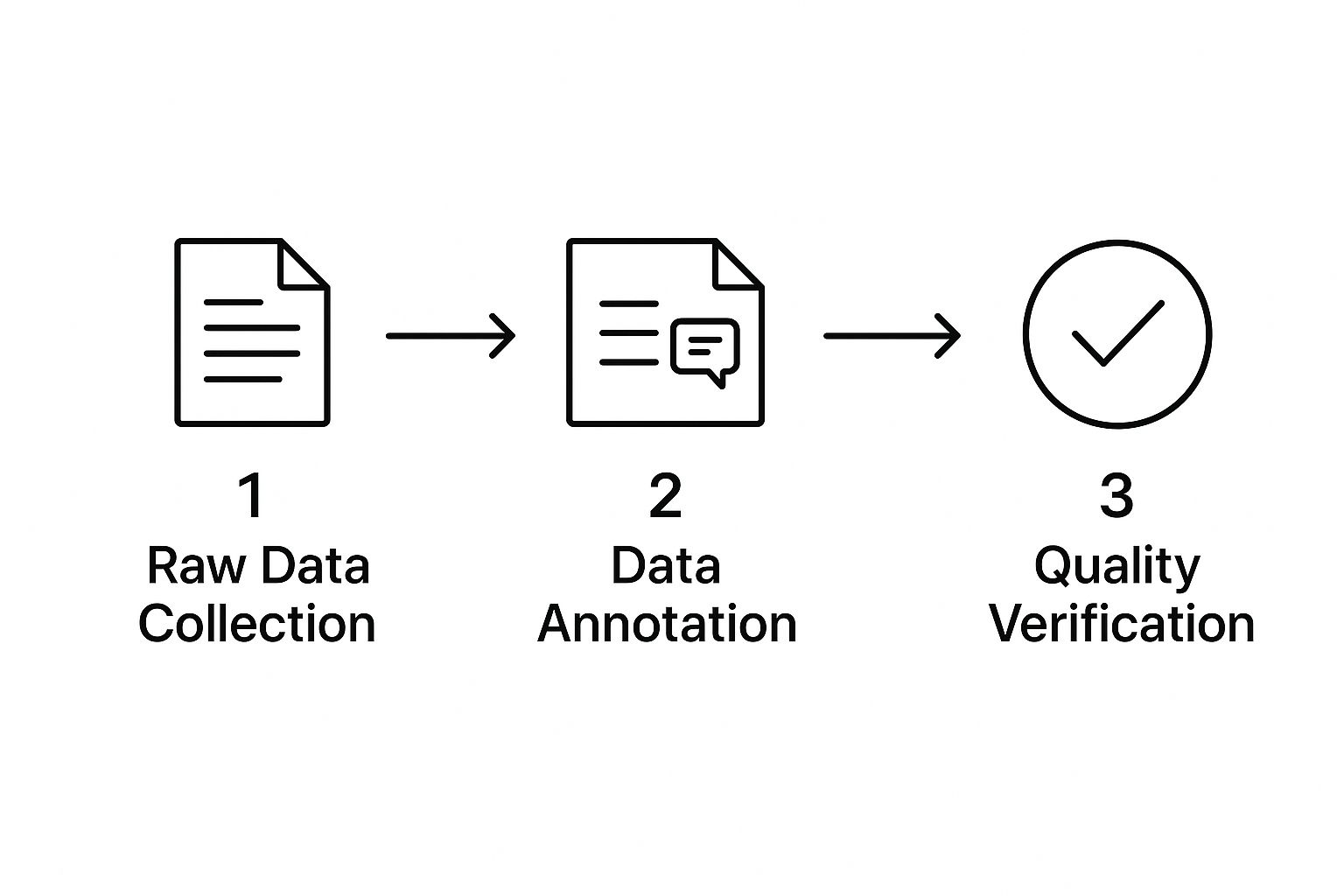

This simple flow shows how raw data is transformed into a verified asset.

As you can see, annotation isn’t the end of the road. Quality verification is an equally important stage that makes sure the labels are reliable.

Phase 3: Quality Assurance and Iteration

After the first pass of labelling is done, you absolutely must have a robust quality assurance (QA) process. High-quality datasets aren't just labelled; they're verified.

Quality assurance isn't about finding fault; it's about building confidence. It’s the process that ensures your "ground truth" is truly true, giving you a reliable foundation for model training.

There are a few solid techniques for enforcing quality and consistency during the AI data labelling process:

- Consensus Scoring: Have multiple annotators label the same piece of data. Then, compare the labels. Any disagreements get flagged for a senior annotator to review. A high consensus score is a great sign that your guidelines are clear.

- Audit Trails: A project manager or expert reviews a random sample of the labelled data (say, 5-10%) to catch any systemic errors or misunderstandings of the rules.

- Benchmark Testing: You can slip "gold standard" assets—items with pre-established, correct labels—into the workflow to discreetly test how well individual annotators are performing.

Once the data clears QA, it's exported into a format your model can digest, like JSON or CSV. But the job isn't over. The final, crucial step is to embrace the iterative loop: you train your model, test its performance, see where it struggles, and then use those insights to refine your guidelines or add more challenging examples to your dataset for the next round of labelling.

Exploring Common Types of Data Annotation

Once you've got your workflow sorted, you can dive into the core task of AI data labeling. But what does "labeling" actually look like? It's definitely not a one-size-fits-all job. The specific method you choose depends entirely on your data and what you need your AI model to learn.

Think of it like giving instructions to a person. Just telling someone to "look at this picture" is pretty vague. But telling them to "find the red car" is much more specific and useful. Data annotation gives machines that same level of clarity, turning raw, jumbled data into a structured lesson plan.

Let's break down the most common ways this is done.

Annotation for Images and Videos

Visual data is everywhere, and its role in AI is only getting bigger. The market reflects this, with image data labeling making up a huge piece of a global market projected to hit $3.84 billion in 2025. This market is expected to grow at a compound annual rate of over 28% through 2033, driven largely by all the new ways we're using visual AI.

Here are the go-to methods for teaching AI to see:

-

Bounding Boxes: This is the simplest way to get an AI to spot things. An annotator just draws a rectangle around an object of interest. For example, in an e-commerce dataset, you'd put a box around every shirt or pair of shoes. It’s a straightforward way to teach the AI, "This is a thing, and it's located right here."

-

Polygons (Segmentation): Sometimes, a simple box just isn't precise enough. That’s where polygons come in. Annotators carefully trace an object's exact outline, pixel by pixel. This is absolutely critical for tasks that demand precision, like a medical AI learning to identify the exact shape of a tumor in a scan.

-

Keypoint Annotation: This method is all about marking specific points of interest on an object. It’s a favorite for pose estimation, where an AI needs to understand how a body is positioned. For instance, annotators might mark the joints—shoulders, elbows, knees—on athletes in a video to train an AI that analyzes form and technique.

Annotation for Text Data

From customer reviews to internal reports, text is another massive source of data. Labeling text helps AI models get a handle on the meaning, context, and relationships hidden within the written word.

Text annotation is about adding a layer of semantic meaning that a machine can process. It’s how an AI learns to read between the lines, understanding not just the words but the intent and emotion behind them.

Common text annotation tasks you'll run into include:

-

Named Entity Recognition (NER): This involves spotting and classifying key bits of information, or "entities." For example, an annotator might tag "Apple" as an

ORGANIZATION, "Tim Cook" as aPERSON, and "Cupertino" as aLOCATION. -

Sentiment Analysis: Here, the goal is to label a piece of text with its emotional tone. A customer review gushing, "I love this product!" would be tagged

Positive. On the other hand, "The shipping was a nightmare" would get taggedNegative. -

Text Classification: This is about assigning an entire sentence or document to a predefined category. An incoming customer support ticket, for example, could be classified as

Billing Issue,Technical Support, orFeature Requestto make sure it gets to the right person. Finding a team to handle this meticulous work can be a challenge, but our guide to the top 10 data annotation service companies in India for 2025 can give you some great options.

Annotation for Audio Data

Finally, audio annotation is the key to training voice assistants, transcription services, and any other AI that relies on speech. The work usually involves turning spoken language into a format that machines can understand.

The two main types are Audio Transcription, which is simply converting speech into text, and Speaker Diarization. This second one is all about identifying who is speaking and when—a vital task if you want an accurate transcript of a meeting with multiple people talking.

How to Navigate Common Labelling Challenges

On paper, launching an AI data labelling project seems simple enough. In practice, it's a minefield of potential issues. The goal is always a perfect, high-quality dataset, but the real world throws curveballs related to quality, cost, and the quirks of human judgment that can easily derail a project. Getting ahead of these common hurdles is the secret to keeping things running smoothly.

The single biggest obstacle you'll face is maintaining data quality and consistency. This gets exponentially harder with large, distributed teams, where everyone brings their own subtle biases and interpretations. A "pedestrian" label from one annotator has to mean the exact same thing as a label from another. If it doesn't, you’re basically feeding your AI a dataset full of noise.

Without a rock-solid quality control process, your "ground truth" starts to crumble. The result? An AI model that just doesn't work reliably when it matters. The time you spend upfront creating detailed guidelines and a rigorous review process will pay for itself many times over.

Managing Subjectivity and Ambiguity

What do you do when two annotators look at the exact same data point and draw different conclusions? This is the classic problem of subjectivity. It’s especially tricky in tasks that require a human touch, like figuring out the sentiment of a customer review or moderating online content. One person’s "neutral" can easily be another’s "slightly negative."

Your best weapon against this is a set of incredibly detailed annotation guidelines. Think of this document as the project's bible—the ultimate source of truth.

- Define Everything: Don’t just tell them to "label the damaged car." Get specific. What counts as damage? Is a tiny scratch included, or are you only looking for dents bigger than a fist?

- Use Visual Examples: Show, don't just tell. Nothing beats clear, visual examples of correctly and incorrectly labeled data. It’s almost always more effective than words alone.

- Keep a Q&A Log: Create a shared document where annotators can post questions about confusing edge cases. When you provide an official answer, everyone on the team can see it, ensuring that tricky decisions are handled the same way by everyone.

By systematically stripping out the ambiguity, you empower your team to make consistent, confident decisions, which is the foundation of trustworthy AI data labelling.

Balancing Cost, Time, and Scale

Let’s be honest: data labelling can get expensive, fast. The cost of a skilled workforce, coupled with the sheer time required to label tens of thousands—or even millions—of data points can quickly spiral out of control. It often feels like you're stuck in a tug-of-war between speed, budget, and quality.

Managing a labelling project is a constant balancing act. Cutting corners on quality to save time and money almost always costs more in the long run when you have to retrain a poorly performing model.

Going from a small pilot to a massive, production-level operation brings its own set of headaches. A workflow that’s perfect for a team of five can completely fall apart with a team of fifty. This is where having the right tools and a scalable process becomes non-negotiable.

For many organizations, the most practical solution is to work with a specialized data labeling partner like Zilo AI. This approach lets you tap into a pre-vetted workforce and proven quality systems without the immense overhead of building it all from scratch.

The Future of Data Labeling and Emerging Trends

If you think AI data labeling is a static field, think again. The whole discipline is in constant motion, shaped by new tech and smarter approaches. The slow, manual annotation work that once defined the industry is making way for a future where humans and machines work hand-in-hand. Honestly, this evolution is the only way to keep up with the world's insatiable appetite for high-quality training data.

A huge part of this shift is the move toward AI-assisted labeling. Think of it as a partnership. An AI model takes a first crack at annotating the data, and then a human expert steps in to review, correct, and finalize the work. This "human-in-the-loop" process isn't just a small improvement; it's a massive accelerator, letting teams power through enormous datasets that would have been impossible before. The machine does the heavy lifting, while the human provides the nuanced oversight and final sign-off.

The Rise of Synthetic Data

Another game-changer making waves is synthetic data generation. This is exactly what it sounds like: using algorithms to create brand-new, perfectly labeled data from scratch. Instead of spending months trying to capture real-world photos of a rare product defect, developers can now generate thousands of flawless, photorealistic examples on a computer.

This solves two major headaches at once:

- It Fills Critical Data Gaps: You can train models on dangerous or uncommon scenarios—like near-miss car accidents for autonomous vehicles—that are impractical or unethical to capture in real life.

- It Safeguards Privacy: Companies can build powerful models without ever using sensitive personal data, neatly sidestepping a minefield of privacy regulations and ethical concerns.

The point of synthetic data isn't to completely replace real-world data, but to supercharge it. It's a controlled and scalable way to build diverse datasets that are simply too difficult or expensive to gather otherwise.

Having the ability to create targeted data is fundamental to building better AI, and it's a vital skill for anyone committed to true data-driven decision making.

Reinforcement Learning and the Human Touch

Perhaps the most profound trend is how Reinforcement Learning from Human Feedback (RLHF) has taken center stage. This is the secret sauce behind today's most advanced large language models (LLMs). With RLHF, skilled human annotators do more than just apply labels; they provide detailed feedback, ranking different AI responses based on their quality, helpfulness, and safety.

This has created an explosion in demand for human expertise. For example, the need for detailed, multi-frame video annotation for self-driving cars and advanced AI surveillance is driving a staggering 32% compound annual growth rate in that sector alone.

This trend, along with the rise of RLHF, shows that the future of AI data labeling isn't about replacing people. It's about elevating their role. Humans are becoming the essential teachers, guides, and quality guardians for the next generation of intelligent systems. You can explore more of these AI data labeling market trends to see just how integral the human touch has become.

Got Questions About AI Data Labeling? We’ve Got Answers.

As you get your hands dirty with AI, you’re bound to have some questions. It’s only natural. This section is designed to give you clear, no-nonsense answers to the most common things people ask about data labeling—from the lingo to the tools and even career paths.

What’s the Real Difference Between Data Labeling and Data Annotation?

Honestly, in the real world, there isn't one. The terms data labeling and data annotation are used interchangeably across the AI industry. Both describe the same core job: adding meaningful tags to raw data so a machine learning model can make sense of it.

You might find a few academic purists who argue that "annotation" is for more complex tasks, like drawing fiddly polygons on an image. But for all practical purposes, if you hear someone talking about one, they mean the other. It’s the same process.

How Do I Pick the Right Data Labeling Tool?

Choosing the right tool is a big deal, and it all boils down to your specific project needs. There’s no magic "best" tool out there. The first step is to sit down and map out exactly what you need.

Think about these key questions:

- What kind of data are you working with? Images? Video? Audio? Text? Tools are often built for specific data types.

- How complex is the labeling? Do you just need simple bounding boxes, or are you getting into detailed semantic segmentation and tracking relationships between objects?

- Who is doing the work? Is it just you, or a large team that needs collaboration features, project management, and built-in quality checks?

- What’s your budget? Are you looking for something free and open-source, or do you need the robust support and features of a paid, enterprise-grade platform?

For a small test run or a simple project, an open-source tool can be perfect. But if you're scaling up and need tight quality control and management oversight, investing in a comprehensive platform usually pays off. My advice? Always run a small pilot with a few top contenders before you go all in.

Can I Actually Get a Job in Data Labeling Without a Tech Degree?

Yes, absolutely. A career in AI data labeling is one of the most accessible entry points into the AI world. Most entry-level roles don't require a computer science degree at all. What they do require are certain personality traits.

What really matters is a sharp eye for detail, a ton of patience, and the discipline to follow intricate guidelines without cutting corners. I’ve found these skills are far more valuable than a formal technical education for this line of work.

Most companies provide thorough, on-the-job training for each new project, so you’ll learn what you need to know. Starting as a data labeler is a fantastic way to get your foot in the door of the AI industry. From there, you can often move into quality assurance, team leadership, project management, or even transition into more technical ML roles down the line.

At Zilo AI, we provide the expert workforce and managed services you need to produce high-quality, accurately labeled datasets at scale. Stop worrying about quality control and start building better models. Learn how we can accelerate your AI projects.