At its core, AI training data is the raw material—images, text, audio, you name it—that we use to teach a machine learning model how to think. It's the information the model studies to learn how to make predictions or decisions on its own.

You can have the most sophisticated algorithm in the world, but if the data you feed it is junk, the results will be junk. It's the single most important factor for success. Think of it like a student's curriculum; the quality of the books and lessons determines how well they learn the subject.

Why AI Training Data Is Your Most Critical Asset

Let's imagine you're training an AI for a self-driving car. What if you only showed it pictures of perfectly clear, empty highways on a sunny day? It might get really good at that one specific task, but it would be completely lost—and frankly, dangerous—the second it encounters city traffic, a rainstorm, or a deer at dusk.

This simple idea cuts right to the chase: AI training data isn't just one piece of the puzzle. It's the entire foundation of any machine learning project. The quality, sheer volume, and diversity of your data will directly dictate what your model can and can't do.

If you train a model on a narrow or biased dataset, the AI will inherit those exact same flaws. This leads to frustratingly poor performance and decisions you just can't trust. It all comes back to that old computer science mantra: "garbage in, garbage out."

The Fuel for Intelligent Systems

An algorithm is just a set of rules on paper. Data is what brings it to life, giving it the context and "experience" it needs to understand the real world. By churning through thousands, or even millions, of labeled examples, the model starts to spot patterns and make connections all on its own.

This is a fundamental shift from traditional software development. Instead of a programmer writing explicit "if-then" rules for every possible scenario, the logic is learned directly from the data itself.

This hunger for data has exploded. According to the AI Index Report from Stanford HAI, the computational power needed for training models doubles roughly every five months, while the datasets themselves are growing by a factor of 10 every eight months.

From Data to Decisions

At the end of the day, the goal is to build an AI that can make accurate, reliable judgments when it encounters new information. Getting there means your data strategy can't be an afterthought; it has to be priority number one.

Putting in the work upfront to carefully collect, clean, and label your training data is what separates a powerful, effective system from a failed experiment. This focus is at the heart of making smarter business moves, which you can learn more about by exploring the principles of data-driven decision making.

The Raw Materials of AI: A Look at Different Training Data Types

Not all data is created equal. Think about the difference between a meticulously organized spreadsheet of customer sales and a raw, chaotic feed of Twitter comments. Both are packed with potential insights, but an AI can't process them in the same way. This is why we need to understand the fundamental types of AI training data.

Getting a handle on these differences is the first real step in picking the right fuel for your AI model. The data's inherent structure dictates everything that comes next—how you prepare it, how you label it, and ultimately, how you use it to build something intelligent.

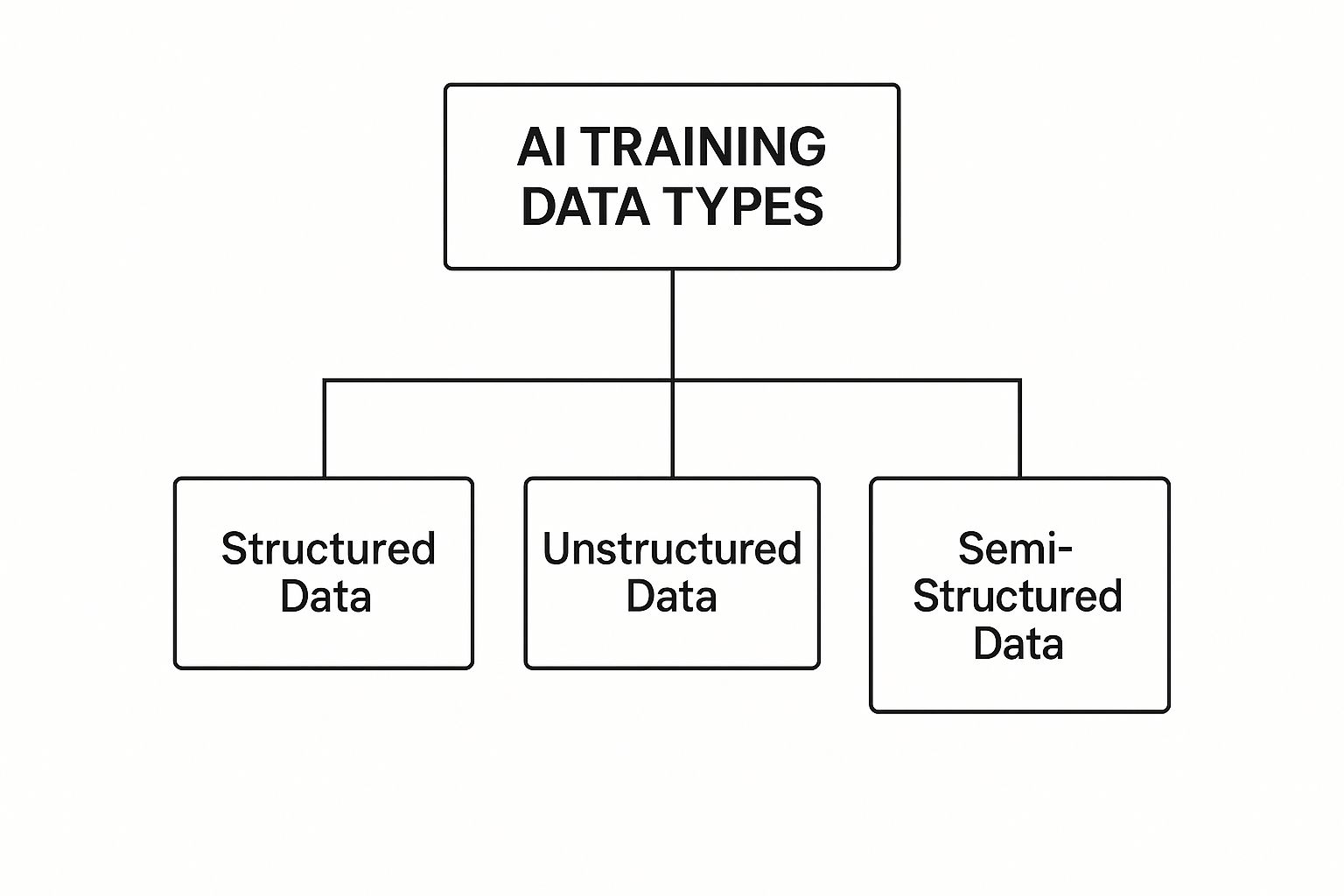

This image breaks down the main categories data typically falls into.

As you can see, data exists on a spectrum. It ranges from the highly organized to the completely free-form, and each type has its own distinct role to play in machine learning.

Structured vs. Unstructured Data

Structured data is the easy stuff. It’s what most people picture when they think of data—neat, tidy, and perfectly organized. Everything fits into a predefined format, like a SQL database or an Excel spreadsheet, where information like names, dates, and dollar amounts sits in its designated row and column. It's clean and simple for a machine to parse.

Then there's unstructured data, which is… well, everything else. It has no predefined format or organization, and it makes up a staggering 80% of the data businesses have. This is the messy, human-generated information that fills up the digital world.

- Examples of unstructured data include:

- Text: Emails, Slack messages, customer reviews, news articles.

- Rich Media: Photos from a smartphone, security camera footage, podcast audio files.

- Sensor Data: Readings from IoT devices or industrial equipment.

To make sense of unstructured data, you almost always need to add a layer of human intelligence first—a process called annotation. For an AI to learn to spot a "bicycle" in a photo, a person has to draw a box around it and apply that label. Professional image annotation services specialize in this crucial step, turning raw images into something a model can actually learn from.

Key Takeaway: Structured data is simple for machines, but the real gold is buried in unstructured data. The magic of modern AI is its ability to find meaning in all that mess.

How AI Learns: Labeled and Unlabeled Data

Beyond its format, we also classify training data by how it’s presented to the AI. This really comes down to the "teaching method" we use for the machine learning model.

Comparison of Data Labeling Approaches

The table below breaks down the three primary ways models learn, highlighting the differences in their data needs, applications, and the hurdles you might face with each.

| Approach | Data Requirement | Example Use Case | Primary Challenge |

|---|---|---|---|

| Supervised Learning | Fully labeled dataset | Email spam detection (emails labeled "spam" or "not spam") | Requires massive amounts of high-quality, human-labeled data, which can be expensive and time-consuming. |

| Unsupervised Learning | No labels required | Grouping customers into market segments based on purchasing behavior | It can be difficult to validate the accuracy of the discovered patterns without human oversight. |

| Semi-Supervised Learning | Small amount of labeled data, large amount of unlabeled data | Identifying faces in a photo collection after labeling a few key people | Finding the right balance between the labeled and unlabeled data to guide the model effectively. |

Let's dig a little deeper into what each of these approaches means in practice.

-

Supervised Learning: Think of this as studying for a test with a detailed answer key. The AI is fed a dataset where every single item is labeled with the correct answer. For instance, you’d give it thousands of animal photos, each one explicitly tagged as either a "cat" or a "dog." It’s the go-to method for tasks where you have a clear, predictable outcome, like classification.

-

Unsupervised Learning: This is the opposite. The AI gets a pile of data with zero labels and is told to find interesting patterns on its own. It's like dumping a giant box of Legos on the floor and asking a child to sort them into groups without any instructions. They might group them by color, by shape, or by size. This is perfect for tasks like customer segmentation, where you don't know the groupings ahead of time.

-

Semi-Supervised Learning: This is the pragmatic, real-world compromise between the two. You start with a small, manageable set of labeled data and combine it with a much larger pool of unlabeled data. The model learns the core concepts from the labeled examples and then uses that initial understanding to make educated guesses about the rest. This approach can drastically cut down on the time and cost of data labeling.

How Quality Data Drives Real Business Growth

It’s easy to think of AI training data as just a technical prerequisite, but that’s missing the bigger picture. In reality, it's the engine that drives measurable business growth. When you move past the theory, you see how well-prepared datasets give companies a serious competitive advantage. It's what separates an AI that just works from an AI that actually makes money.

Think about any e-commerce site you've used. Their recommendation engines are everything, and they're trained on mountains of customer data—what you've bought, what you've clicked on, and what you've ignored. An AI fed with clean, detailed data can make shockingly accurate suggestions, often leading to a 10-30% revenue bump for online stores.

This isn't just a retail thing, either. In manufacturing, sensor data from factory equipment is gold. It’s used to train predictive maintenance models that learn to spot trouble before it starts. By analyzing tiny shifts in temperature or vibration, the AI can flag a machine that’s about to fail, preventing a costly shutdown.

Real-World Examples of Data in Action

The real impact of great data comes alive when you see it applied to specific problems across different industries. Each use case is a story of how a carefully crafted dataset helped achieve a clear business goal.

-

Financial Services: Banks are constantly fighting fraud. Their secret weapon? AI models trained on millions of transaction records. These systems learn the subtle fingerprints of illegal activity and can flag a suspicious charge in milliseconds, protecting both the bank and its customers from huge losses.

-

Healthcare: In medical imaging, AI is a powerful ally for doctors. Models are trained on vast libraries of expertly annotated X-rays, CT scans, and MRIs. This allows the AI to help radiologists spot tumors or other anomalies faster and more accurately, which can lead to earlier diagnoses and dramatically better patient outcomes.

To see how this works with unstructured data, look at the field of conversation intelligence, where AI helps businesses analyze customer calls and chats to uncover powerful insights.

The Financial Impact of Data-Driven AI

The incredible growth of AI is tied directly to the value of high-quality training data. The global AI market, valued at around $391 billion, is expected to grow fivefold in the next five years. That explosion is happening because companies are relying on massive, diverse datasets to build models that power everything from personalization to automation.

A classic example is Netflix, which reportedly creates about $1 billion in value each year from its recommendation engine alone. That system is constantly learning from huge amounts of viewer data to keep people watching, and more importantly, subscribed.

The message here is clear. Investing in high-quality datasets isn't just an expense; it’s a direct investment in growth, efficiency, and staying ahead of the competition. The companies winning with AI are the ones that first learned how to win with their data.

Where to Find and How to Prepare Your Data

So, you’re sold on the need for high-quality data. The big question now is, where do you actually find it? Getting your hands on the right raw materials for an AI project is a major strategic decision, one that hinges on your budget, timeline, and what you’re trying to build.

There’s no magic bullet or a single "best" source for AI training data. Instead, most teams go down one of a few common paths, and each one comes with its own trade-offs. The route you take will have a huge impact on your model's entire development journey.

Exploring Your Data Sourcing Options

When it comes to acquiring data, you generally have three main options: use public datasets, buy them from a vendor, or build your own from the ground up.

-

Public Open-Source Datasets: These are free collections of data, often curated by universities or big tech companies. They’re a fantastic starting point for research or a proof-of-concept, giving you access to huge amounts of information at no cost. The catch? They might be too generic for your specific business problem.

-

Commercial Data Vendors: These are companies that specialize in gathering, cleaning, and selling ready-to-use datasets for specific industries, like finance or healthcare. This route can save you a ton of time and usually comes with a quality guarantee, but it’s a direct hit to your budget.

-

Proprietary Data Creation: This is the most hands-on approach. You generate a completely unique dataset by collecting information directly from your own users or internal systems. It’s a lot of work, but it gives you a massive competitive advantage—nobody else has your data.

Key Insight: While creating proprietary data is the toughest path, it’s often the secret to building a truly standout AI model. Your unique data captures the nuances of your business, giving your model an edge that’s nearly impossible for competitors to copy.

The Critical Step of Data Annotation

Just getting the data is only half the battle. For supervised learning models, which are the most common type, raw data is essentially meaningless until it’s labeled. This process is called data annotation. It’s the meticulous, human-driven work of adding the context that a machine needs to make sense of it all.

Think of it like teaching a toddler to recognize animals. You don't just flash a picture book at them; you point to the dog and say, "dog." Data annotation is that same fundamental act of pointing and naming, just for a machine. A person has to go through the data, piece by piece, and attach the right labels.

For any AI startup, nailing this process is non-negotiable. You can dig deeper into why data annotation is critical for AI startups to see just how much it influences performance.

Some common annotation methods include:

- Bounding Boxes: Drawing a simple box around an object in an image to teach a model where things are.

- Semantic Segmentation: Coloring in every single pixel of an image with a specific category (e.g., all pixels that are "road" get one color, all "trees" another).

- Text Classification: Assigning a tag to a chunk of text, like labeling a customer review as "positive" or "negative."

This human-in-the-loop effort is what turns a mountain of raw information into structured, intelligent AI training data. It’s the true foundation that allows a model to learn.

The "Garbage In, Garbage Out" Problem: Why Your AI Is Only as Good as Its Data

There's an old saying in computer science: "garbage in, garbage out." It’s a simple idea, but it’s never been more critical than in the age of AI. Think of an AI model as a student—it learns exactly what you teach it. If you hand it flawed, biased, or just plain messy information, you can't expect it to make smart, reliable decisions.

This single principle is the biggest hurdle for any AI project. You can have the most sophisticated algorithm in the world, but if its "educational material"—the AI training data—is poor, the entire system will fail. The only way to build a trustworthy AI is to be militant about data quality from the very beginning.

What's Really Hiding in Your Data?

Bad data isn't always easy to spot. It often lurks just beneath the surface, quietly poisoning your model and undermining its ability to perform in the real world. Recognizing these hidden dangers is the first and most important step toward building an AI you can actually count on.

Here are three of the most common culprits you need to watch out for:

-

Hidden Biases: Data often carries the ghosts of past prejudices. For instance, if you train a hiring AI on decades of company hiring records, it might learn to unfairly penalize qualified candidates from certain backgrounds, simply because that's what the historical data reflects. The AI doesn't know it's being discriminatory; it's just repeating the patterns it was shown.

-

Noisy Data: This is just a catch-all for messy, incorrect information. Think of typos, mislabeled images, or completely irrelevant details. Imagine trying to teach an AI to recognize fruit, but half the pictures of "apples" are actually labeled "oranges." The model will just learn to be confused.

-

Lack of Diversity: An AI trained on a narrow, uniform dataset is like a person who has never left their hometown—it will be completely lost when it encounters anything new. A voice assistant trained only on American English will stumble over different accents, and a self-driving car trained only in sunny weather will be a hazard in the snow.

The concept is straightforward: your AI can’t learn what it has never seen, and it will learn all the wrong lessons from bad examples. This is why data validation and cleaning aren't just boxes to check; they are foundational to the entire process.

Building a Strong Defense with Quality Control

To break the "garbage in, garbage out" cycle, you need a solid plan for maintaining data quality. This isn't a one-and-done task; it's an ongoing commitment that starts on day one.

One of the most effective strategies is to implement a human-in-the-loop (HITL) system. This approach brings human expertise into the machine's workflow. The AI can do the heavy lifting—like performing the initial data labeling—but a human expert steps in to review its work, fix errors, and handle the tricky, ambiguous cases that stump the algorithm. This creates a powerful feedback loop where the AI gets smarter and more accurate under expert supervision.

This dedication to quality isn't just a technical detail; it delivers real business results. For instance, companies are seeing huge gains from AI built on well-curated data. AI-powered tools have been shown to slash training video production time by a staggering 62%. In fact, 42% of Learning & Development managers now rely on AI-assisted production, which has helped boost course completion rates by 57%. You can see the full story in these statistics on AI's impact on business training.

The "garbage in, garbage out" principle also applies after the model is built. When you interact with it, applying the best practices for prompt engineering is essential for getting the high-quality outputs you need. At every stage, high standards for your inputs are what guarantee your AI performs accurately, reliably, and responsibly.

Got Questions About AI Training Data? We’ve Got Answers.

As you start working with AI, you’ll quickly find that the real work begins and ends with data. It’s the foundation of everything. It's also where most of the tricky, practical questions pop up. Let's tackle some of the most common ones I hear from teams just getting started.

Think of this as your field guide for the most frequent data hurdles. We'll clear up the confusion so you can move forward with confidence and build AI that actually works in the real world.

How Much Data Do I Really Need to Train an AI?

This is the million-dollar question, and the honest, non-technical answer is: it depends entirely on what you’re trying to teach the AI. There's no magic number.

A simple model, say, one that sorts customer emails into "urgent" and "not urgent," might get pretty good with just a few thousand examples. But for a self-driving car that has to recognize everything from a pedestrian to a plastic bag blowing across the road in a split second? You're talking about millions, or even billions, of data points.

The best approach is to start small. Build a proof-of-concept with a modest dataset to see if your idea has legs. If it works, you can scale up from there. The goal isn't just volume; it's providing enough variety for the model to learn the patterns, not just memorize the specific examples you fed it.

Should I Buy a Dataset or Build My Own?

This is a huge strategic decision, and there are good arguments for both sides. It really boils down to a trade-off between speed, cost, and competitive advantage.

-

Buying Data: This is your fast track. You can get clean, pre-labeled datasets from commercial vendors and get a model up and running quickly. The catch? It costs money, and more importantly, it might not be a perfect fit for your specific problem. It's like buying a suit off the rack—it's fast, but it might not fit you perfectly.

-

Creating Your Own Data: This path takes more time and resources, but the payoff can be massive. When you collect and label your own data, you create something no one else has. This proprietary dataset captures the unique details of your business and customers, giving you a serious edge that’s incredibly hard for competitors to copy.

Many of the most successful AI projects I've seen do both. They'll buy a dataset to get a baseline model working, then continuously feed it with their own unique data to make it smarter and more specialized over time.

Expert Insight: Think of a proprietary dataset as a core piece of your company's intellectual property. It’s not just a project expense; it's a long-term asset that appreciates in value and becomes a major competitive moat.

What is Data Drift and Why Should I Care?

Okay, this is a big one. Data drift is what happens when the world changes, but your AI model doesn't. The data your model sees "in the wild" starts to look different from the AI training data it learned from, and its performance starts to tank.

Imagine a model trained to predict fashion trends using data from 2019. It would be completely useless trying to understand what people wear in a post-pandemic, work-from-home world. Fashions change, customer habits shift, economies fluctuate—the world is constantly moving.

If your model's knowledge is frozen in time, its predictions will get worse and worse. This is why you can't just "train and forget." You have to constantly monitor for data drift and retrain your model with fresh data to keep it relevant, accurate, and trustworthy.

Ready to build powerful AI models with high-quality, perfectly annotated data? Zilo AI provides the expert manpower and data services you need to succeed. From text and image annotation to multilingual transcription, we deliver the clean, reliable data that fuels growth.