Think of artificial intelligence training data as the curriculum an AI model studies to become "smart." It's not just a random pile of information; it's a massive, carefully selected collection of data—like images, text, or sounds—that has been painstakingly labeled. These labels teach the model what it's looking at, listening to, or reading, so it can eventually recognize patterns and make accurate predictions on its own.

The Blueprint for Building Smarter AI

The performance of any AI is a direct reflection of the data it was trained on. It all comes down to a classic principle in computing: Garbage In, Garbage Out (GIGO). If you feed an AI model bad data—information that's biased, irrelevant, or just plain wrong—you're going to get flawed and unreliable results. This isn't just a minor technical issue; it has serious real-world consequences.

Let's say a factory wants to use an AI to spot defects on its production line. If the training dataset is full of blurry, poorly lit images with incorrect labels, the system is doomed from the start. It will constantly flag perfectly good products as defective while missing the actual flaws. The result? Product recalls, wasted money, and a brand reputation in tatters.

Why Data Quality Is Non-Negotiable

Every successful AI project is built on a foundation of high-integrity data. It's not just about quantity; it’s about quality. You need a dataset that is accurate, diverse, and truly representative of the environment your AI will operate in.

Here’s what truly defines high-caliber training data:

- Accuracy: Every single label matters. If you label a picture of a cat as a "dog," you’re teaching the AI a falsehood, which chips away at its overall reliability.

- Diversity and Representation: The data has to mirror the real world. A facial recognition model trained only on images of a single demographic will fail miserably—and unfairly—when it encounters faces from other groups.

- Relevance: The data must be directly related to the task at hand. You wouldn't use stock market data to train a weather forecasting model; it's just not relevant.

- Consistency: The same rules and standards for labeling must be applied across the entire dataset. Inconsistent labeling confuses the model and leads to unpredictable behavior.

Simply put, AI training data isn't just raw material. It’s the meticulously crafted knowledge base that shapes an AI's competence, fairness, and overall value.

Investing in high-quality data is the single most important step in building an AI that works. It's what turns a simple algorithm into a powerful tool capable of making precise, valuable decisions. This commitment to curating excellent data is a cornerstone of effective, data-driven decision-making and is what separates successful AI projects from costly failures. Without this blueprint, even the most sophisticated algorithm is set up to fail before it even gets started.

A Look at the Core Types of AI Training Data

To really get a handle on how AI works, you have to start with its fuel: the data. Think of it like a chef choosing ingredients. Different recipes call for different things, and in the world of AI, developers need specific kinds of artificial intelligence training data to build models that can perform specialized tasks.

These data types aren't just theoretical. They're the digital foundation for the apps and services we rely on every day. That voice assistant on your smart speaker and the fraud alert from your bank are both powered by very specific kinds of data that have been carefully gathered and labeled.

H3: Text Data: Giving AI a Voice

Text is everywhere, making it one of the most common and vital data types for AI. We're talking about everything from social media chatter and customer reviews to massive digital libraries. This is the stuff that teaches machines to understand and generate human language.

A sentiment analysis tool, for example, learns to pick up on happy, angry, or neutral tones by sifting through millions of product reviews that have been labeled with those emotions. It's the same principle behind large language models (LLMs), which are fed colossal amounts of text to learn how to write emails, answer complex questions, and even spit out code. You can dive deeper into this subject in our guide covering https://ziloservices.com/blogs/the-power-of-natural-language-processing-nlp-applications-use-cases-and-impact-in-2025/.

H3: Image and Video Data: Teaching AI to See

When you want an AI to understand the visual world, you need visual data. This is the realm of computer vision, and it relies on still images and video footage to give machines a sense of sight.

Take self-driving cars. They learn to navigate safely by processing huge video datasets where every pedestrian, stop sign, and other vehicle in every frame has been meticulously labeled. In medicine, AI models are trained on medical scans to spot early signs of disease. In fact, some studies show AI can classify cancer subtypes from tissue images with 78% accuracy, a job that once fell solely to highly trained pathologists.

The concept is pretty straightforward: to get an AI to see, you have to show it the world first. That means high-quality, diverse visual data is non-negotiable for building models that can reliably identify objects or analyze a busy scene.

Video adds the crucial elements of time and motion. This allows AI to learn about actions and behaviors, which is essential for applications like security surveillance or analyzing plays in sports.

H3: Audio Data: The Building Blocks of Voice AI

Audio data is what powers any AI system that listens and speaks. This includes everything from the voice commands you give your phone to recorded customer service calls and podcast episodes. Voice assistants like Alexa and Siri became so good at understanding you because they were trained on thousands upon thousands of hours of human speech.

For a clearer picture, consider how transcription services work. They're built on datasets filled with audio clips that are paired with perfect, human-verified transcripts. To see this in action, check out these practical applications of speech data. This process is what teaches the model to recognize different sounds, accents, and speech patterns.

H3: Tabular Data: The Engine of Analytics

It might not be as flashy as images or audio, but tabular data is a true workhorse in the AI world. This is your classic structured data, organized neatly into rows and columns just like a spreadsheet. It’s the perfect format for prediction and classification tasks.

Ever wonder how your bank flags a weird transaction? Its AI was trained on a massive table of transaction histories, with every row labeled "legitimate" or "fraudulent." By finding patterns in the transaction amount, location, and timing, the AI learns to spot suspicious activity instantly.

Tabular data is also the driving force behind:

- Sales Forecasting: Using historical sales data to predict future revenue.

- Customer Churn Prediction: Analyzing user behavior to see who might be about to cancel a service.

- Loan Approval Systems: Evaluating risk by looking at an applicant's financial history.

To tie this all together, here’s a quick overview of these data types and where you’ll commonly find them being used.

AI Training Data Types and Common Applications

| Data Type | Common Formats | Example Application |

|---|---|---|

| Text | .TXT, .CSV, .JSON, HTML | Sentiment Analysis, Chatbots, Language Translation |

| Image | .JPG, .PNG, .TIFF, DICOM | Object Recognition, Facial Recognition, Medical Imaging |

| Video | .MP4, .MOV, .AVI | Autonomous Driving, Action Recognition, Security |

| Audio | .MP3, .WAV, .FLAC | Voice Assistants, Speech-to-Text, Speaker ID |

| Tabular | .CSV, .XLSX, SQL Database | Fraud Detection, Financial Forecasting, Customer Analytics |

Ultimately, each of these data types gives an AI a unique way to learn about our world. That's why choosing and preparing the right data is the most important first step in any AI project.

Sourcing and Collecting High-Quality Data

Knowing what kind of data you need is one thing; actually getting your hands on it is where the real work begins. There's no single magic bullet for acquiring artificial intelligence training data. The right approach really hinges on your project's scope, budget, and what you’re ultimately trying to achieve.

For many teams just starting out, the journey kicks off with open-source datasets. These are publicly available collections, often put together by universities or big tech companies, and they’re a fantastic resource for getting a general-purpose AI project off the ground.

Think of something like ImageNet. It’s a legendary library of labeled images that’s been the proving ground for countless computer vision models. Using a resource like this can shave weeks or even months off your initial development timeline without costing a dime. But they have their limits. An open-source dataset might be great for building a generic object detector, but it's not going to cut it if you're training a specialized model to spot rare bird species or identify unique manufacturing defects on an assembly line.

What to Do When Off-the-Shelf Data Isn't Enough

So, what happens when you hit a wall and existing datasets just don't fit your needs? This is where you have to get creative with data generation and augmentation. These are indispensable tools for filling the gaps that pre-packaged options can't.

Data augmentation is essentially a way to get more mileage out of the data you already have. You take your existing examples and tweak them slightly to create new, unique variations. It's a go-to technique in computer vision. Let’s say you have a small set of product photos. You could:

- Rotate or flip the images by a few degrees.

- Play with the brightness, contrast, or color.

- Zoom in or crop them differently.

- Add a bit of digital "noise" to mimic real-world camera imperfections.

Each tiny change becomes a brand-new data point. It teaches your model to be more robust and recognize the product in all sorts of different conditions—all without you needing to book another expensive photoshoot.

On the other hand, synthetic data generation is about creating completely new, artificial data from scratch. This is a game-changer when you need to train a model on scenarios that are rare, dangerous, or just impossible to capture in the real world. Imagine you're building an AI to predict equipment failure in a factory. You can't just sit around and wait for multi-million dollar machines to break. Instead, you can run simulations to generate data that perfectly mimics the sensor readings of a machine in the moments before it fails, giving your model the exact patterns it needs to learn.

Collecting Your Own Proprietary Data

Sometimes, there's just no substitute for rolling up your sleeves and collecting the data yourself. This is known as proprietary data collection, and it gives you ultimate control over the quality and relevance of your artificial intelligence training data. It’s definitely more resource-intensive, but for highly specific or competitive applications, it often delivers the best results.

A common method here is web scraping, where you use automated scripts to pull information from websites. A retail company, for example, might scrape competitor websites to gather pricing and product specs for market analysis. It's powerful, but you're walking a fine ethical and legal line. You absolutely must respect a website's terms of service, privacy policies, and copyright laws. Getting this wrong can land you in serious legal trouble.

At the end of the day, proprietary data collection must be built on a foundation of ethical sourcing. Always put user privacy and transparency first. If you're collecting data from users, get their explicit consent and be diligent about anonymizing any personally identifiable information (PII) to comply with regulations like GDPR and CCPA.

The hunger for high-quality, specialized training data is only growing. The global AI market is currently valued at around $391 billion and is on an explosive growth trajectory. This is being driven by the 83% of companies that now see AI as a top business priority, pushing them to invest heavily in the unique datasets that give them an edge. You can dig into more of these numbers by checking out the latest AI industry statistics.

Ultimately, your sourcing strategy—whether it’s using open data, creating your own, or a hybrid of the two—is one of the most critical decisions you'll make. It will directly shape how well your AI performs.

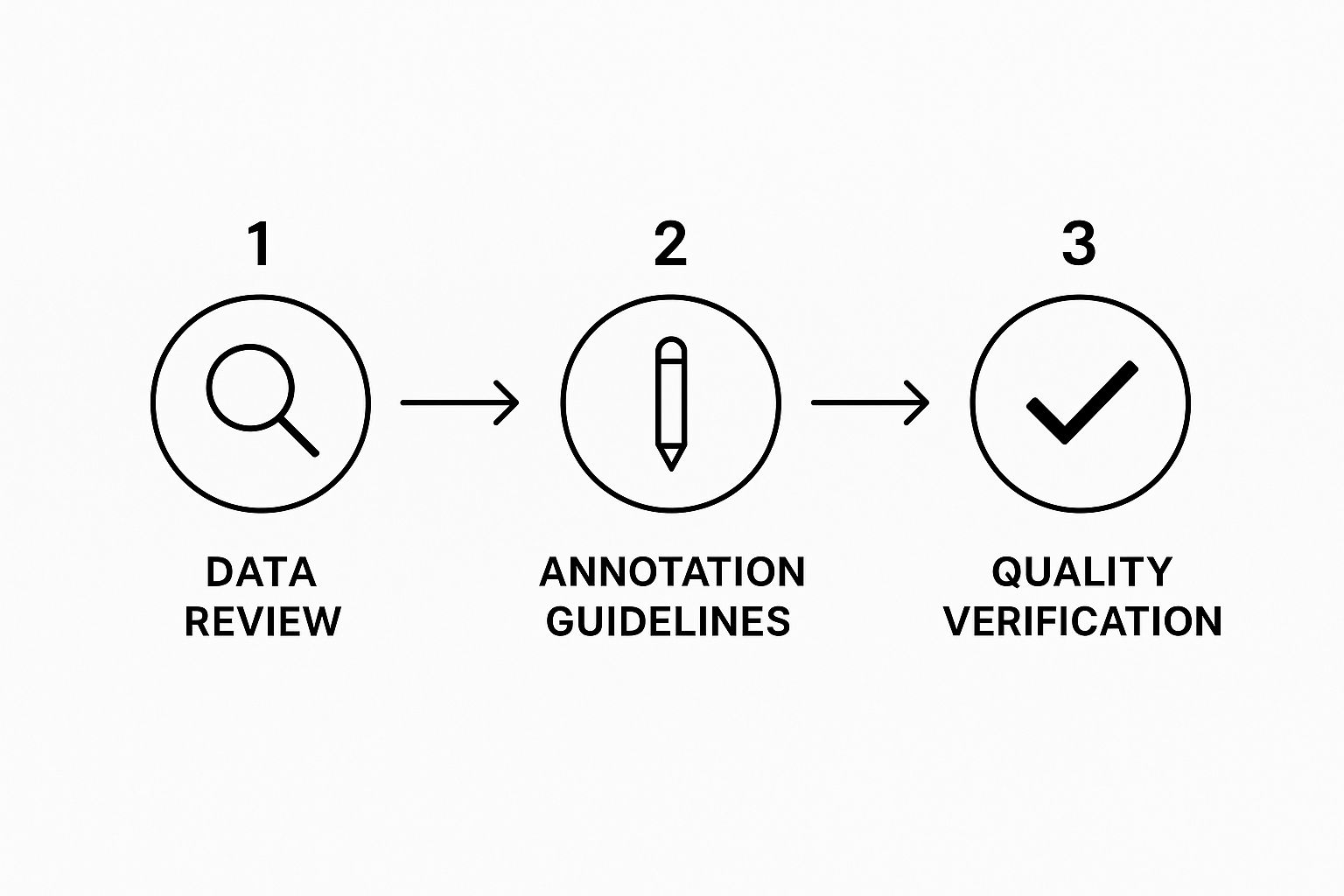

The Critical Process of Data Annotation

Raw data is full of potential, but for an AI model, it’s just noise. Think of it like a manuscript with a great story but no punctuation, paragraphs, or character labels. The plot is in there somewhere, but it's impossible to follow. That's where data annotation comes in—it's the editing process for your data.

By meticulously adding labels, tags, and other metadata to raw artificial intelligence training data, we create the structured "answer key" the model needs to learn. Without it, a computer vision model staring at a street scene has no idea that one blob of pixels is a car and another is a pedestrian. This labeling stage isn't just a box to check; the quality of your annotations will directly dictate how well your model performs in the real world.

Common Annotation Techniques in Action

The right annotation technique always depends on the problem you're trying to solve. An AI built to spot subtle anomalies in medical scans needs a much finer level of detail than one designed to sort customer support tickets into "urgent" and "non-urgent" buckets.

Here are a few of the go-to methods I see most often:

- Bounding Boxes: This is the bread and butter of object detection. Annotators draw simple rectangles around specific objects in an image. It's straightforward, efficient, and perfect for teaching a model to find and identify things.

- Semantic Segmentation: When you need a deep, contextual understanding of an entire scene, this is the technique to use. Instead of just boxing an object, you assign every single pixel in the image to a class—like "road," "building," or "sky." It’s essential for complex tasks like training autonomous vehicles.

- Named Entity Recognition (NER): Moving over to text, NER is all about finding and classifying key pieces of information. It involves tagging things like people's names, company names, locations, and dates. This is the magic behind how chatbots understand what you're asking about.

Take a look at this image. It shows a simple bounding box drawn around a bicycle, a perfect example of isolating and labeling an object for an AI model to learn from.

This single green box tells the AI exactly what and where the bicycle is. After seeing thousands of examples like this, it learns to spot bicycles in completely new images.

Choosing the Right Annotation Workforce

Once you've nailed down your technique, the next big question is: who’s going to do the labeling? Your choice of workforce has major implications for your project's budget, timeline, and data security.

You've really got three main paths to choose from:

- In-House Teams: Having your own employees handle annotation gives you unparalleled control and security. This is often the best choice when you're working with highly sensitive or proprietary data, like medical records or confidential financial documents.

- Managed Teams or BPOs: Partnering with a specialized third-party service gives you a great balance of quality and scale. You get access to a team of trained, professional annotators and expert project oversight without the headache of managing them yourself. Our guide on why data annotation is critical for AI startups dives deeper into why this is such a popular option.

- Crowdsourcing Platforms: For huge projects with less sensitive data, platforms like Amazon Mechanical Turk can be a game-changer. You can distribute tiny annotation tasks to a massive global workforce, which is fantastic for speed and cost. Just be prepared to implement very strict quality control measures.

No matter who does the work, a successful annotation project lives and dies by its workflow. If your guidelines are fuzzy or your quality checks are inconsistent, you're just baking errors directly into your artificial intelligence training data.

Ultimately, the quality of your annotations directly maps to the intelligence of your final model. It's the one part of the process where you absolutely cannot afford to cut corners.

How to Ensure Data Quality and Mitigate Bias

Let's be blunt: a powerful AI model is worthless—or worse, dangerous—if it's built on a foundation of bad or biased artificial intelligence training data. This isn't just some academic problem. We've all seen the real-world consequences, from PR disasters to genuine harm. If your data foundation is cracked, everything you build on top of it is unstable.

History is littered with cautionary tales. Remember when early facial recognition systems were notoriously bad at identifying women and people of color? That wasn't a malicious algorithm. It was a direct result of a dataset that was overwhelmingly trained on images of white men. The system was biased from its very first byte of data, proving a hard lesson: your AI is only as fair as the data you feed it.

Building ethical and effective AI isn’t something that just happens. It requires a deliberate, proactive commitment to data quality and fairness right from the project's kickoff.

Conducting Thorough Data Audits

Before you even think about training, you need to put your dataset under a microscope. A data audit is more than just error checking; it's a systematic review to dig up the hidden biases, inconsistencies, and gaps that will inevitably trip up your model. Think of it as investigative work to understand the story your data is really telling.

Start by grilling your dataset with some tough questions:

- Where did it come from? What were the exact collection methods? Could the way it was gathered have introduced a slant right from the start?

- Who is represented? Does the data actually reflect the diverse world it will operate in? Look for obvious underrepresentation across gender, ethnicity, age, or even geography.

- How good are the labels? Are the annotations consistent and accurate? Did the human labelers have clear, objective guidelines, or were they left to their own interpretations?

Answering these questions early on helps you spot the weak points in your artificial intelligence training data before they become permanent, hard-to-fix flaws in your live model.

"An area where AI has shown superhuman performance is in pattern recognition and scalability… AI has the potential to quickly review thousands of H&E images, recognize established histologic patterns, and even identify novel, clinically relevant patterns."

This highlights AI's incredible power, but it also hints at its Achilles' heel. If the patterns it learns are based on a skewed sample, it will apply those biased patterns at an alarming scale and speed.

Strategies for Mitigating Hidden Bias

Spotting bias is one thing; actively rooting it out is another. You can't just cross your fingers and hope for fairness—you have to design for it. This means making a conscious effort to balance and enrich your datasets from day one. For building truly robust models, it's essential to start understanding how to enhance data quality early.

Here are a few practical techniques I've seen work well:

-

Oversampling and Undersampling: If one group is massively overrepresented, you can simply remove some of its data points at random (undersampling). On the flip side, if a group is underrepresented, you can duplicate its data points (oversampling) or—even better—use augmentation to create new, slightly varied examples to teach the model from.

-

Synthetic Data Generation: Sometimes you just can't find enough real-world data for minority groups or rare events. This is where generating synthetic data comes in. It's a fantastic way to fill demographic gaps or create edge-case scenarios without touching sensitive personal information.

-

Algorithmic Fairness Tools: There's a growing ecosystem of open-source tools designed to audit your datasets and models for bias. These can quantify fairness metrics and help you pinpoint exactly which features are driving discriminatory results.

In the end, this isn't a one-and-done task. Ensuring data quality and fighting bias is an ongoing responsibility. It takes constant vigilance, a diverse team that can spot blind spots others might miss, and a genuine commitment to building AI that works for everyone.

Common Questions About AI Training Data

Getting into AI development always brings up a ton of practical questions, especially around the data you need to make it all work. When you're moving from a theoretical idea to actually building something, you'll inevitably run into the same challenges everyone else does. Let's tackle some of the most common ones I hear all the time.

How Much Training Data Do I Actually Need?

This is the big one, and the honest-to-goodness answer is, "it depends." There's no single number that fits every project.

A simple model, say, one that just has to tell the difference between two types of product images, might perform great with just a few thousand examples. But if you're building something complex like a large language model, you're talking about massive amounts of text—terabytes of it, pulled from huge swaths of the internet—to grasp all the subtleties of how people talk and write.

A solid starting point is to look up benchmark datasets for similar tasks. This gives you a ballpark figure. But remember this crucial tip: quality and diversity almost always trump sheer quantity. A smaller, cleaner, and more representative dataset will deliver better results than a massive, messy one every time.

What's the Difference Between Data Annotation and Data Labeling?

People throw these terms around as if they mean the same thing, but there's a small difference that's actually pretty important.

Think of data annotation as the broad, catch-all term. It covers any process where you add metadata to raw data to help an AI understand it.

Data labeling is a type of annotation, usually a much simpler one. It’s when you assign a single, straightforward tag to an entire piece of data.

- Labeling is like this: Seeing a picture and tagging it "cat." Simple.

- Annotation is like this: Meticulously drawing boxes around every single car in a traffic photo, highlighting the exact pixels of a tumor in an MRI, or transcribing every word from a 30-minute podcast.

So, all labeling is annotation, but not all annotation is just simple labeling.

Can I Use Synthetic Data to Train My AI Model?

You bet. Synthetic data—data generated by algorithms instead of collected from the real world—is a game-changer. It's a lifesaver when real-world data is hard to come by, super sensitive, or just too expensive to gather.

For example, imagine you're building a model to detect rare but critical events, like a specific type of bank fraud or a particular kind of industrial equipment failure. You can't just wait around for those things to happen. With synthetic data, you can generate thousands of realistic examples to train your model on these crucial edge cases.

There is a catch, though. For synthetic data to work, it has to be a very convincing copy of the real world, complete with all its messiness and variation. If your generated data is too clean or perfect, you'll end up with a model that aces its tests but falls apart the second it faces real-world scenarios.

How Do I Handle Data Privacy and Compliance?

This isn't just a "nice-to-have"—it's a legal and ethical requirement. Getting this wrong can result in massive fines and destroy the trust you have with your users.

Your first move should always be to figure out which regulations apply to your project, like GDPR in Europe or CCPA in California.

Make it a non-negotiable rule to anonymize or pseudonymize any personally identifiable information (PII) before you even think about using it for training. That means scrubbing names, addresses, or anything else that could link back to a real person. You'll also need to lock down access to the data so only authorized people can ever see it. And if you're bringing in an outside company for annotation, do your homework. Vet their security certifications and data handling policies to make sure they're just as serious about compliance as you are.

At Zilo AI, we provide the high-quality, ethically sourced data annotation and manpower services you need to build powerful and reliable AI models. From text and image annotation to multilingual transcription, our experts ensure your data is accurate and ready for any challenge.

Learn how our skilled teams can support your AI initiatives at Zilo AI.