Think of AI data annotation as the process of teaching an AI system, much like you'd teach a child. It’s all about labeling raw data—like images, text, or sound clips—so that a machine learning model can actually understand what it's seeing, reading, or hearing. This isn't just a technical step; it's the fundamental process that turns a sea of unstructured information into the high-octane fuel that powers virtually every AI application we use today.

The Bedrock of Artificial Intelligence

Let's stick with the child analogy. If you wanted to teach a toddler the difference between fruits, you wouldn't just silently show them a fruit basket. You'd point to an apple and say, "This is an apple." You'd pick up a banana and say, "This is a banana." Each label you provide connects the object to a concept, helping the child build a mental map of their world.

Data annotation works the exact same way for an AI. A powerful machine learning model starts as a blank slate. It has no built-in knowledge to tell a pedestrian from a fire hydrant in a photo or to distinguish a happy customer review from a frustrated one. That's where we come in.

By carefully labeling the data, we give the AI the "ground truth" it needs to learn. This process transforms raw, messy information into a structured, easy-to-follow lesson plan for the machine. Without this crucial work, even the most brilliant algorithms are just making wild guesses. The quality of your data annotation is, without a doubt, the biggest factor in how well your AI will perform in the real world.

Turning Raw Data into Actionable Insight

At its core, data annotation is all about adding context. It closes the gap between what a machine sees and what it needs to understand to perform a specific, valuable job.

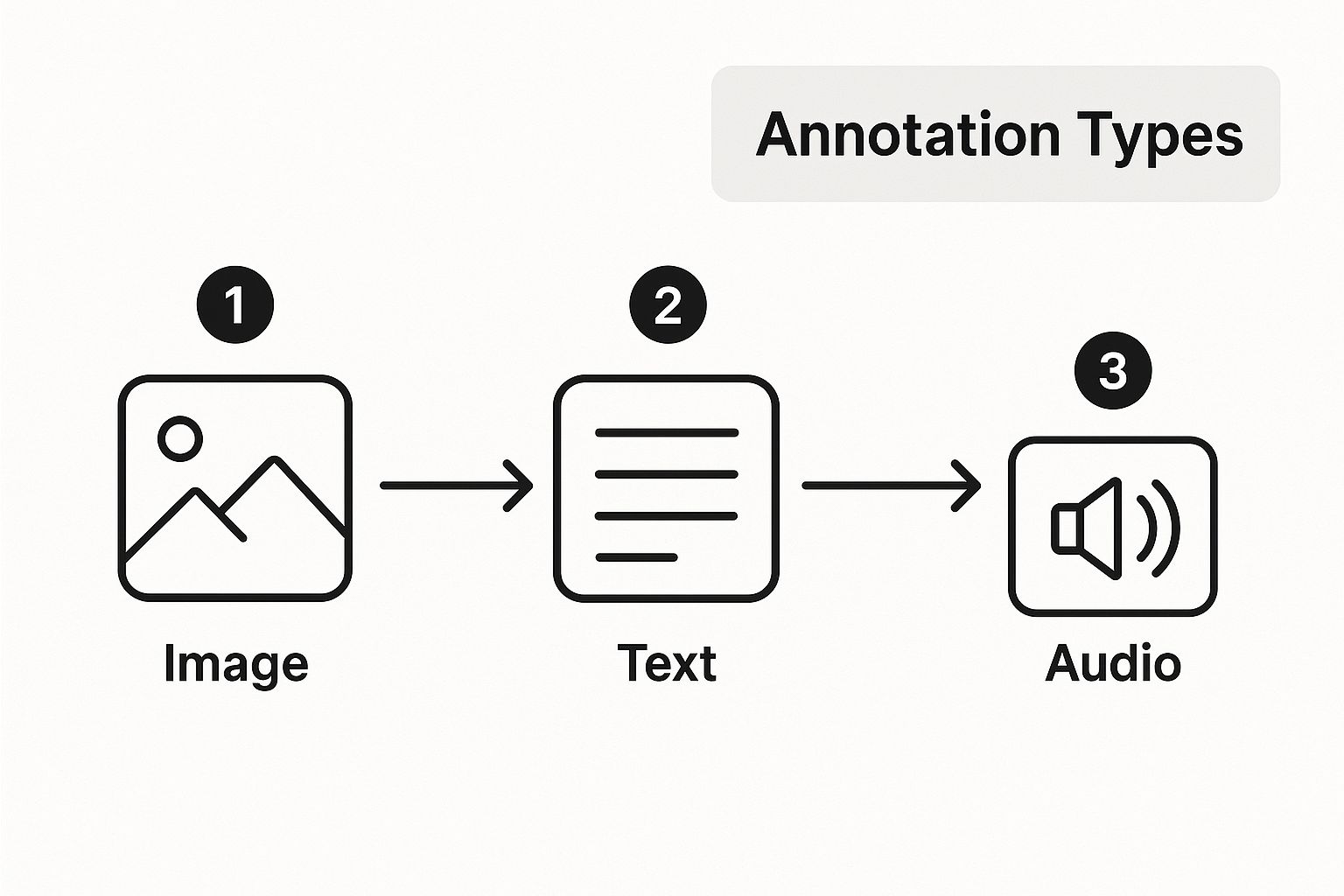

To make this tangible, let's look at a few examples of common data types that require annotation and the AI systems they help build.

Core Data Types and Their AI Applications

| Data Type | Annotation Example | AI Application |

|---|---|---|

| Images/Video | Drawing boxes around cars or outlining pedestrians in a street scene. | Autonomous vehicles, security surveillance, medical imaging analysis. |

| Text | Highlighting names, tagging a review as "positive," or classifying an email as "spam." | Chatbots, sentiment analysis tools, content moderation systems. |

| Audio | Transcribing spoken words or identifying specific sounds like a "siren" or "glass breaking." | Voice assistants (like Siri or Alexa), speech-to-text software, audio security systems. |

As you can see, annotation gives raw data purpose and enables the AI to take meaningful action.

The quality of your training data directly dictates the quality of your AI model. An algorithm trained on poorly or inconsistently annotated data will inevitably produce unreliable results, no matter how sophisticated its architecture.

This growing reliance on high-quality data has sparked a massive industry boom. The global data annotation market was valued at USD 2.11 billion and is expected to explode to USD 12.45 billion by 2033, climbing at an incredible compound annual growth rate of 20.7%. This surge is happening because industries like healthcare, autonomous driving, and e-commerce are going all-in on AI.

It’s now clearer than ever why data annotation is critical for AI startups in 2025. Building a truly effective AI isn't just about finding a clever algorithm; it's about feeding that algorithm with impeccably prepared data.

The Different Types of Data Annotation

If we think of AI as a student, then data annotation is the process of creating its textbooks. The first step is realizing the AI needs a teacher. Now, we can get into the actual teaching methods—the different types of annotation for different kinds of data.

You wouldn't use the same lesson plan to teach art history and calculus, right? In the same way, AI needs specialized techniques to learn from images, text, and sound. These methods are what turn a mountain of raw, useless information into a structured curriculum for a machine learning model. Each technique helps an AI develop a specific skill, whether that’s spotting a pedestrian in a photo or understanding the frustration in a customer review.

Image Annotation: Teaching AI to See

Image annotation is probably the easiest type to picture in your head. It’s all about labeling visual data so a computer can learn to recognize and understand what’s in an image. This is the fundamental work behind everything from self-driving cars navigating a busy street to medical AI that can spot anomalies in an X-ray.

We have a few core techniques in our toolkit for this:

- Bounding Boxes: This is the bread and butter of image annotation. Annotators draw a simple rectangle around an object. Think about an e-commerce AI—you'd draw a box around every "shoe" in a product photo. For a self-driving car, that means a box around every "car," "cyclist," and "stop sign." It’s quick and effective.

- Polygonal Segmentation: Sometimes, a simple box just isn't good enough. When an object has an irregular shape, we use polygonal segmentation to trace its exact outline, almost pixel by pixel. This level of precision is non-negotiable in fields like medical imaging, where an AI needs to distinguish a cancerous lesion from healthy tissue. Every single pixel matters.

- Keypoint Annotation: This technique is all about marking specific points of interest on an object, like creating a dot-to-dot puzzle for the AI. It's heavily used for tracking human movement, where we place key points on joints like elbows, knees, and wrists. This is how an AI learns to understand body language, analyze an athlete's form, or guide you through a workout in a virtual fitness app.

These methods build on each other to give the AI a rich understanding of the visual world. A bounding box tells the AI what something is and roughly where it is. Segmentation gives it a precise map of its shape and boundaries.

Text Annotation: Helping AI Understand Language

Text is everywhere, but to an AI, it’s just a meaningless string of characters. Text annotation is what gives the written word structure and context, which is absolutely essential for training Natural Language Processing (NLP) models.

Here are a couple of key methods:

- Named Entity Recognition (NER): This is like highlighting important nouns in a sentence and putting them into categories. For example, in the sentence, "Zilo AI is headquartered in New York," an annotator would tag "Zilo AI" as an Organization and "New York" as a Location. This is how chatbots and virtual assistants can pull key details out of your requests.

- Sentiment Analysis: This one is all about emotion. We label a piece of text as positive, negative, or neutral. Businesses find this incredibly powerful for sifting through thousands of customer reviews or social media comments to see what people really think. A tweet saying, "My order arrived late and the box was crushed," would get tagged as Negative.

Audio Annotation: Enabling AI to Listen

Finally, we have audio annotation. This involves transcribing and labeling sound files so that AI models can understand spoken language and recognize distinct sounds. This is the magic behind voice assistants, but it also powers security systems that can identify the sound of breaking glass.

There are two main approaches here:

- Audio Transcription: At its simplest, this is converting speech from an audio file into written text. Crucially, the text is time-stamped, linking each word to the exact moment it was said. This is the foundational work needed to train voice-to-text models like Siri and Alexa.

- Speaker Diarization: This technique answers the question, "Who is speaking, and when?" Annotators go through an audio file and label the different speakers—"Speaker A," "Speaker B," and so on. It's essential for creating accurate transcripts of meetings or analyzing conversations between a customer and a support agent.

Ultimately, picking the right annotation method comes down to what you want the AI to do. The complexity of these tasks also underscores the need for skilled human annotators, a core principle that many of the top data annotation service companies in India build their entire business model on.

The Data Annotation AI Project Lifecycle

Kicking off a data annotation project is a lot like building a high-performance engine. Every part has to be perfectly machined and assembled in the right order. Skip a step, and the whole thing fails. The journey from a messy pile of raw data to a clean, AI-ready dataset follows a structured lifecycle that’s crucial for success.

This process turns a chaotic jumble of files into a strategic asset. It’s all about ensuring precision, consistency in every label, and an output that’s perfectly tuned to train an accurate machine learning model.

Starting with Data Collection and Preparation

It all begins with gathering the raw materials. This means collecting the images, text files, or audio clips that will eventually become your training dataset. This data could come from anywhere—public databases, your own internal records, or even a custom data-gathering effort.

But you can't just throw this raw data into the annotation process. It almost always needs a good "cleaning" first. This prep work might involve deleting duplicate files, standardizing formats, or weeding out low-quality or irrelevant data. Think of it like a chef prepping ingredients before cooking—a clean start prevents a lot of headaches down the road.

The image below shows how different types of data—images, text, and audio—all feed into this same project lifecycle.

It’s a clear illustration of how fundamentally different data formats are all funneled into the same annotation pipeline to power all sorts of AI models.

Crafting Unambiguous Annotation Guidelines

Once you have clean data, the next step is to write the project’s rulebook: the annotation guidelines. This is arguably the most important stage of the entire process. These guidelines are the definitive source of truth that tells annotators exactly how to label the data, leaving nothing to chance or personal interpretation.

Let's say you're annotating pedestrians for a self-driving car. The guidelines need to answer very specific questions:

- Do you label a person who is partially hidden behind a parked car?

- How do you handle someone carrying a huge umbrella that blocks most of their body?

- What's the rule for labeling reflections of people in storefront windows?

Clear instructions, packed with examples of these tricky edge cases, are the only way to get consistent labels across your entire dataset. Without them, every annotator makes slightly different judgment calls, creating "noise" that will only confuse your AI model.

Choosing the Right Annotation Approach

Next, you need to decide how the work gets done. This usually comes down to choosing the right software and the right workforce strategy. The choice of data annotation tools is a big one; these platforms are the digital workbenches for the entire project. This market was valued at USD 1.02 billion and is expected to hit USD 5.33 billion by growing at a CAGR of roughly 26.5%, which really shows how central these tools are. You can get a deeper look into this trend in the full data annotation tools market research.

The actual annotation can be handled in a few different ways:

- Manual Annotation: A human annotator labels every single data point by hand. It's the slowest method but often delivers the best quality, especially for complex jobs.

- AI-Assisted Annotation: A pre-trained model takes the first shot at labeling, and then human annotators review and fix its mistakes.

- Human-in-the-Loop (HITL): This is a more dynamic, cyclical approach where the AI model and human annotators are constantly teaching each other, improving both speed and accuracy over time.

Implementing a Multi-Stage Quality Assurance Process

Annotation isn't a one-and-done job. After a batch of data gets labeled, it has to go through a tough quality assurance (QA) process. After all, high-quality data is the entire point, and QA is how you guarantee you get it.

A solid QA framework is the safety net that catches human error and stops ambiguous labels from poisoning your dataset. It’s what ensures the final product is clean, consistent, and ready to build a reliable AI model.

This process often has several layers of review. Some common QA methods include:

- Consensus Scoring: Several annotators label the same piece of data. If their labels match, it passes. If there’s a disagreement, a manager or senior annotator steps in to make the final call.

- Gold Sets: A pre-labeled "answer key" of data is secretly mixed into an annotator's work. How well they do on these "gold standard" items is a great way to measure their performance and see if they need more training.

- Expert Review: For highly specialized fields like medical imaging, you bring in a subject matter expert for a final check. They can spot nuanced errors that a generalist annotator would likely miss. This constant feedback loop is vital for improving quality and making the project a success.

How Data Annotation Is Making a Real-World Impact

Let's move beyond the theory. The true value of AI data annotation really clicks when you see it in action. This isn't just a technical step in a process; it's the fundamental work that powers real business results across almost every industry you can think of. By carefully labeling data, we're essentially teaching AI models to perform incredibly specific and valuable jobs that were once far beyond the reach of automation.

From helping doctors save lives to changing the very way we shop, high-quality data annotation is the essential ingredient that connects a smart algorithm to a tangible, real-world solution. Different industries, of course, lean on different annotation methods to solve their unique problems.

Healthcare: Where Precision Labeling Saves Lives

In the world of medicine, "close enough" is never good enough. AI models used in healthcare demand an extraordinary level of accuracy, which all begins with perfect annotation. This is where annotators with deep medical knowledge step in to meticulously label images, training AI systems to become powerful assistants for clinicians.

Take the challenge of finding diseases in medical scans like MRIs or CTs.

- Spotting Tumors: Using polygonal segmentation, an annotator will trace the exact border of a tumor. This teaches an AI not just to flag a potential issue, but to define its precise size and shape. For a radiologist, this means faster, more confident diagnoses.

- Analyzing Cells: In pathology, annotators use keypoint annotation to mark individual cells on a slide. This allows an AI to count certain cell types or spot abnormalities, a game-changer for grading cancer and pushing research forward.

This kind of detailed work turns a machine learning model into a reliable diagnostic partner, capable of seeing subtle patterns that might otherwise be missed.

Autonomous Vehicles: Building the Future of Mobility

Self-driving cars are one of the most ambitious AI projects out there. They have to make sense of a chaotic world and react in the blink of an eye. The safety of these vehicles comes down to one thing: the quality of the data they were trained on. That data is painstakingly created by annotating massive amounts of video and sensor feeds.

Think about it: for a self-driving car, a mislabeled pedestrian isn't just a data error—it's a potential disaster. The stakes are astronomically high, which is why world-class annotation is an absolute must for safety and success in the automotive space.

Annotators use a mix of techniques to give the car a complete picture of the road:

- 3D Bounding Boxes (Cuboids): These are placed around cars, pedestrians, and cyclists in LiDAR sensor data. It gives the AI a sense of an object's position, size, and even its orientation in 3D space.

- Semantic Segmentation: This method labels every single pixel in an image with a category like "road," "sidewalk," or "building." It provides the car with a perfect, pixel-by-pixel map of its environment, so it always knows where it's safe to drive.

Retail and E-Commerce: Crafting Smarter Shopping Experiences

In the hyper-competitive world of retail, data annotation is the secret sauce behind smarter, more personalized customer experiences. By labeling huge product catalogs and customer data, retailers are building AI systems that are completely changing how we find and buy things.

Data annotation is also the backbone for many other AI fields, including sophisticated areas like Natural Language Processing (NLP). For instance, analyzing the sentiment of customer reviews helps retailers get a clear pulse on how their products are being received.

Here are a couple of key ways it's making a difference:

- Visual Search: Ever snapped a photo of something to find a similar product online? That's powered by an AI trained on annotated images. Bounding boxes teach the model to pick out a specific handbag or pair of shoes from a cluttered photo.

- Automated Checkout: Those futuristic "grab-and-go" stores rely on cameras to track what you take. This only works because an AI has been trained on millions of annotated images of every single product, from every conceivable angle.

The following table breaks down how different sectors are putting data annotation to work to solve some of their biggest challenges.

Data Annotation Use Cases by Industry

| Industry | Annotation Type | Primary Use Case | Business Impact |

|---|---|---|---|

| Healthcare | Image Segmentation | Medical image analysis (MRI, X-ray) | Faster disease detection, improved diagnostic accuracy |

| Automotive | 3D Cuboids, Video Annotation | Training autonomous vehicles | Enhanced road safety, improved navigation systems |

| Retail | Bounding Boxes, Categorization | Visual search, inventory management | Increased sales, better customer experience |

| Agriculture | Semantic Segmentation | Crop monitoring, pest detection | Higher yields, reduced resource waste |

| Finance | Entity Recognition | Document analysis, fraud detection | Streamlined compliance, reduced financial risk |

As you can see, the impact is both broad and deep. From finance to farming, high-quality data annotation isn’t just a technical exercise—it’s a core driver of business innovation and growth. This is why we're seeing a surge of investment in AI solutions across every major industry.

Best Practices for High-Quality Annotation

Getting high-quality output from a data annotation AI project doesn't happen by accident. It's the direct result of a smart, well-executed strategy. Think of it this way: an architect can't build a stable skyscraper without precise blueprints. In the same way, an AI model can't perform reliably without impeccably annotated data.

Following a clear set of best practices is the only way to guarantee your labeled data is up to snuff. It turns a potentially chaotic labeling effort into a smooth, predictable production line for AI-ready data. The whole point is to build a system that minimizes confusion, catches mistakes early, and delivers a dataset that is clean, consistent, and perfectly aligned with your goals.

Develop Crystal-Clear Annotation Guidelines

The absolute cornerstone of any successful annotation project is a solid set of guidelines. This document becomes the single source of truth for your entire team, eliminating guesswork and personal interpretation. It's basically the project's constitution.

These guidelines need to be more than just basic instructions. They must dive into the nitty-gritty details and—most importantly—provide clear examples of edge cases. Those are the tricky, ambiguous scenarios that always pop up.

Let's say you're annotating vehicles for a self-driving car:

- A basic instruction might be: "Draw a bounding box around all cars."

- A better, edge-case guideline would be: "For cars partially blocked by a tree, draw the box around the visible part only. Don't guess the full size. If less than 25% of the car is visible, leave it unlabeled."

Without that level of detail, one annotator might label the hidden car while another ignores it. That kind of inconsistency can be fatal to your dataset.

Implement a Multi-Layered Quality Assurance Process

Quality assurance (QA) isn't something you just tack on at the end. It's a continuous process that should be woven into every stage of the annotation lifecycle. A strong QA strategy is your safety net, making sure small errors don't snowball into huge problems that tank your AI model's performance.

A multi-layered QA process is non-negotiable. It combines automated checks, peer reviews, and expert oversight to systematically identify and correct inconsistencies, ensuring the final dataset is as close to perfect as possible.

This kind of approach usually involves a few key stages:

- Peer Review: Annotators check a small sample of each other's work. This is great for catching obvious mistakes and making sure everyone is sticking to the guidelines.

- Consensus Scoring: You have multiple people label the same piece of data. If their labels all match, it's approved. If they don't, the disagreement gets flagged for a senior annotator to resolve.

- Gold Standard Sets: A pre-labeled set of "perfect" data is secretly mixed into an annotator's workflow. How they perform on these gold standard items gives you a hard metric for their accuracy and understanding.

A huge part of getting high-quality annotation comes down to solid data quality management, which ensures every piece of data you use to train your models is accurate and reliable.

Provide Continuous Feedback and Iteration

Data annotation isn't a task you can just set and forget. It’s a living process that gets better with feedback. You need to create a tight feedback loop between the annotators on the ground and the project managers. When a QA check flags a common mistake, don't just fix it and move on. Update the guidelines and retrain the team to prevent it from happening again.

This iterative cycle ensures that your quality gets better over time, making the whole operation more efficient. The more you learn, the more you can refine your methods—a key part of mastering data-driven decision-making in your AI development. This commitment to always getting better is what separates a decent dataset from a truly exceptional one.

How Zilo's Annotation Services Give Your AI an Edge

It’s one thing to understand what good data annotation looks like, but it's a whole different beast to actually produce it at scale. This is precisely where having the right partner can completely change the game, turning your ambitious AI goals into reality with the high-caliber data required to get there. At Zilo AI, we bring together smart technology and seasoned human experts to tackle the core challenges of data annotation AI projects.

Our entire process is built to break through the common bottlenecks that stall AI development. We make sure your models are trained on a bedrock of accurate, consistent data, freeing up your team to focus on innovation instead of getting bogged down in data prep.

A Smarter, Hybrid Approach to Annotation

We’ve found the sweet spot by blending machine speed with human intuition. Our platform uses AI-powered tools to do the first pass of heavy lifting, knocking out the repetitive labeling tasks that take up so much time. This frees up our human annotators to apply their skills where it matters most—on the tricky, nuanced judgments that machines just can't handle.

This human-in-the-loop (HITL) system is the core of what we do, and it delivers some serious benefits:

- Faster Turnaround: By letting AI handle the easy stuff, we deliver meticulously labeled datasets much faster than a purely manual team ever could.

- Rock-Solid Accuracy: Our trained experts review, fine-tune, and validate every single AI-generated label. This ensures the final dataset meets the highest quality standards.

- Smarter Spending: This two-pronged approach makes the most of every resource, offering a more budget-friendly path to large-scale annotation without ever cutting corners on quality.

Zilo's blend of AI assistance and human oversight creates a powerful feedback cycle. The AI gets smarter with every human correction, and our annotators become more efficient. The result? Your AI projects get to the finish line faster.

Our managed teams are ready for anything you can throw at them, from highly specific medical imaging analysis to complex sensor data for self-driving cars. With a strict quality control process built into every step, Zilo AI delivers the dependable, high-quality fuel your most important AI initiatives need to succeed.

Frequently Asked Questions About Data Annotation

Even after you get the big picture, a few practical questions always pop up when you're ready to start an AI data annotation project. Let's tackle some of the most common points of confusion to clear the air.

Getting these details straight is often the final step before you can confidently move from planning to execution.

Data Annotation vs. Data Labeling

You'll hear people use these terms interchangeably, but there's a small difference that actually matters. The easiest way to think about it is that data labeling is one specific action under the much bigger umbrella of data annotation.

Labeling is usually simple. It's about assigning a single class to a whole piece of data, like tagging an image "cat" or "dog."

Data annotation covers all the more complex, detailed stuff. This is where you're drawing precise bounding boxes around cars in a video, outlining a tumor with polygonal segmentation, or identifying verbs and nouns in a block of text. So, while all labeling is a form of annotation, not all annotation is simple labeling.

How Do You Guarantee High-Quality Annotated Data?

Great data doesn't happen by accident. It's the result of a deliberate, multi-layered strategy that's all about catching errors and maintaining consistency. There’s no single magic bullet; instead, the best workflows weave several tactics together.

Here’s what that looks like in practice:

- A Rock-Solid Rulebook: You need incredibly detailed guidelines with plenty of examples, especially for those tricky edge cases that are bound to appear.

- Serious Quality Assurance (QA): This isn't just a quick once-over. It might mean multiple annotators have to agree on a label (consensus scoring) or a senior expert gives every piece of data a final review.

- Creating a "Gold Standard" Set: Every so often, you test your team with a pre-labeled batch of perfect data. This is a great way to measure their accuracy and spot anyone who might need a bit more training.

- Keeping the Conversation Going: You have to build a feedback loop. This lets your team ask questions and helps you refine the guidelines when new challenges pop up.

Can Data Annotation Be Fully Automated?

The short answer is no, not really. At least, not for projects that need real accuracy and a deep understanding of context. AI is getting pretty good at handling simple, repetitive tasks, but it still fumbles when it comes to the kind of nuance and critical thinking a human expert brings to the table.

The gold standard today is a human-in-the-loop (HITL) system. This is a hybrid approach where an AI does the first pass, handling the bulk of the work, and then human experts step in to review, correct, and fine-tune the machine’s output.

This model gives you the best of both worlds: the incredible speed of a machine combined with the sharp, reliable judgment of a human. It makes the entire data annotation workflow way more efficient and trustworthy than a purely manual or a fully automated process could ever be on its own.

Ready to power your AI with impeccably annotated data? Zilo AI combines advanced technology with human expertise to deliver the high-quality, scalable data annotation services your projects demand. Visit us to learn how we can accelerate your AI development.