At its heart, a data annotation platform is the software you use to teach an AI. It’s where you take raw, unlabeled information—think mountains of images, audio clips, or customer reviews—and systematically label it so a machine learning model can understand what it's seeing, hearing, or reading.

It’s a bit like preparing a detailed study guide for a student. You wouldn't just hand them a stack of random books. Instead, you'd highlight key passages, add notes in the margins, and create flashcards. That's precisely what these platforms do for AI: they turn messy, raw data into the pristine, structured "study material" that algorithms need to learn effectively. Without this step, an AI model is essentially flying blind.

The Bedrock of Smart Technology

https://www.youtube.com/embed/iADgzNMudOU

A data annotation platform acts as the critical go-between, translating raw information into the language of machine learning.

Think about teaching a toddler to recognize a dog. You’d point to different dogs—big ones, small ones, fluffy ones—and say "dog" each time. You are actively labeling the world for them. A data annotation platform provides the digital tools to do the same for an AI, but on a massive scale with incredible precision. This process is what turns a simple photo into a valuable piece of training data, telling the model, "This specific cluster of pixels is a car," or "This sentence has a negative sentiment."

More Than Just Drawing Boxes

It's easy to think data annotation is just about slapping labels on things, but a professional platform manages a much more sophisticated workflow. It's the central command center for turning a chaotic flood of data into a high-quality asset.

Here’s a breakdown of what’s really happening inside one of these platforms:

- Data Management: It starts with corralling all your raw files, whether it's thousands of street-view images or hours of recorded conversations. The platform keeps everything organized and accessible.

- Precise Labeling Tools: You get a specialized toolkit for the job at hand. This could be anything from drawing complex polygons around tumors in medical scans (image segmentation) to categorizing customer support tickets (text classification).

- Team Coordination: Most serious annotation projects involve teams of people. The platform helps you assign tasks, monitor who is doing what, and track overall project progress without chaos.

- Quality Control: This is a big one. The platform provides built-in review and quality assurance (QA) loops. This allows senior annotators or project managers to double-check the work, catch mistakes, and provide feedback, ensuring the final dataset is accurate.

This structured, methodical approach is what makes or breaks an AI project. In fact, one of the main reasons AI initiatives stumble is due to "garbage in, garbage out"—using inconsistent or poorly labeled data. A solid platform is your best defense against that.

A data annotation platform provides the critical infrastructure for creating high-quality training data, which is the single most important factor in building a successful machine learning model.

To put it simply, these platforms bring order and quality control to the foundational step of AI development. Here’s a quick look at who they serve and what they accomplish.

Data Annotation Platforms at a Glance

| Core Function | Primary Users | Key Outcome |

|---|---|---|

| To provide the tools and workflow management for labeling raw data (images, text, video, etc.). | Data Scientists, ML Engineers, Project Managers, and professional Data Annotators. | High-quality, structured training data ready to be used for machine learning models. |

Ultimately, a good platform ensures that the data fueling your AI is reliable, consistent, and accurate, which is the only way to build a model you can trust.

The Engine of AI's Expansion

As AI becomes more common in just about every industry, the need for well-labeled data has skyrocketed, lighting a fire under the market for these tools.

The global data annotation platform market was valued at USD 1.875 billion and is expected to grow at a compound annual growth rate (CAGR) of 25% over the next few years. This isn't just a niche market anymore. This growth is directly fueled by major advancements in fields like autonomous driving, medical diagnostics, and automated customer service—all of which are completely dependent on enormous volumes of meticulously annotated data. You can dig deeper into these numbers by exploring research on global platform trends.

Inside the Modern Data Annotation Toolkit

To really get what a data annotation platform does, you have to look under the hood. It’s less like a single piece of software and more like a high-tech workshop, filled with specialized stations for every step of the process. A top-tier platform connects all these stations into a seamless production line, turning raw data into the high-quality fuel your AI models need to learn.

Let's walk through the different parts of this workshop.

The Annotation Toolkit

At the center of it all is the annotation toolkit. This is the digital workbench where the hands-on labeling actually happens. It’s not a one-size-fits-all hammer; it's a full set of precision instruments designed for different types of data.

If you're working with images, your toolkit will have features for drawing tight bounding boxes around cars in a street scene or using intricate polygons to trace the exact shape of a tumor in a medical scan. For a text project, the tools look more like digital highlighters for identifying key phrases (named entity recognition) or assigning emotional values (sentiment analysis). The tools you have dictate the kind of work you can do.

Workflow and Project Management

Having the right tools is one thing, but managing the actual work is another beast entirely. This is where workflow and project management features step in. Think of this component as the factory floor supervisor, making sure the entire annotation assembly line runs like a well-oiled machine.

This system is the brains of the operation, handling crucial logistics:

- Task Distribution: It automatically routes labeling jobs to the right annotators based on their skills, current workload, or availability. No more manual assignments.

- Progress Tracking: It gives you a bird's-eye view of the entire project in real time. You can instantly see how many files are labeled, which ones are pending review, and what your overall completion percentage is.

- Version Control: It meticulously tracks every change, so you never have to deal with the chaos of conflicting dataset versions.

Without solid workflow management, even a small project can quickly become a mess of missed deadlines and duplicated work. For large-scale operations with millions of data points and dozens of annotators, it's absolutely essential.

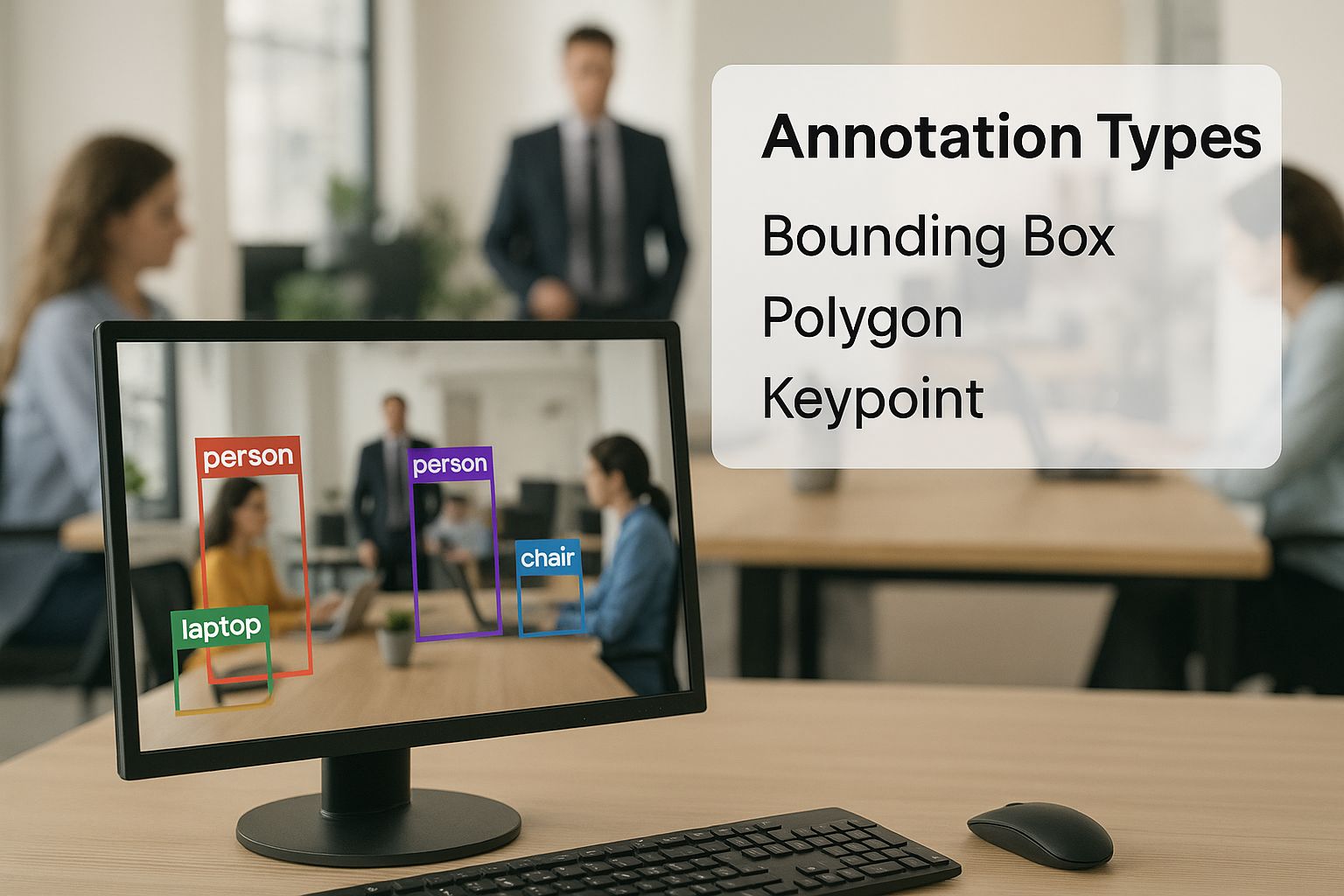

The image below gives a great visual of just how many different annotation types a powerful toolkit needs to support to handle the diverse world of AI.

As you can see, a modern platform has to be a jack-of-all-trades, offering a wide array of tools to tackle the unique challenges of different machine learning goals.

The Quality Assurance Gate

You could argue that the most critical station in the entire workshop is quality assurance (QA). This is the final inspection gate where every piece of labeled data is meticulously checked for accuracy and consistency before it gets anywhere near your AI model. Remember, low-quality data is the #1 reason AI projects fail, making this component completely non-negotiable.

A data annotation platform without strong QA features is like a factory with no final inspection. You might produce things quickly, but you have no idea if they actually work.

A good QA module allows for layered reviews. For instance, a junior annotator might do the first pass, and their work is then automatically sent to a senior reviewer for approval. If the reviewer spots an error, they can reject the label, add specific feedback, and send it back for correction—all without leaving the platform. This creates a powerful feedback loop that doesn't just fix mistakes but actively trains your annotators to get better over time.

Workforce Management and Collaboration

Finally, a truly modern platform has to manage its most valuable asset: people. Your workforce—whether it's an in-house team, a global network of freelancers, or a managed service from a partner like Zilo—needs a central hub to collaborate effectively.

The platform provides the tools for communication, performance tracking, and secure access to data. This integration means that no matter where your annotators are, they can all work together under the same guidelines and quality standards. It’s what makes large-scale, high-quality data annotation a manageable reality instead of a logistical nightmare.

Matching Annotation Types to Real-World Problems

Data annotation isn’t a one-size-fits-all job. It's a whole collection of different techniques, and the right one always depends on the specific problem you’re trying to solve with AI. Honestly, picking a data annotation platform that’s a perfect fit for your data type can make or break your entire project. It's that important.

Think of it like a mechanic's toolbox. You wouldn't grab a hammer to change a tire or use a wrench to smooth out a dent. It’s the same with AI. The tools you need to teach a self-driving car how to see the road are completely different from what you'd use to help a chatbot understand a frustrated customer. Each challenge demands its own specialized instrument.

For many machine learning projects, one of the most fundamental tasks is the need to efficiently categorize and classify data with no-code AI, which serves as the foundation for more complex applications.

Image and Video Annotation for Computer Vision

Computer vision is probably the most famous application of AI, and it’s completely dependent on incredibly detailed image and video annotation. The entire goal here is to translate what we see into a language a machine can actually process, one pixel at a time.

Here are a few of the core techniques and where you'll see them in action:

- Bounding Boxes: This is the classic approach. Annotators simply draw a rectangle around an object of interest. For an e-commerce site, this might mean tagging every single product in a lifestyle photo. In a self-driving car, it’s about drawing a box around every car, person, and traffic light to build a basic map of the road ahead.

- Semantic Segmentation: This technique goes much, much deeper. Instead of just a box, every single pixel in an image gets a category label like "road," "sky," or "building." This is absolutely essential for autonomous vehicles, which need to know the precise boundaries of the drivable area to operate safely.

- Polygon Annotation: When you're dealing with objects that have weird, irregular shapes, you need something more precise than a box. Medical AI relies on this to trace the exact outline of a tumor in an MRI scan. In agriculture, it might be used to pinpoint a specific disease on a plant's leaf.

These visual annotation methods are what give machines the ability to perceive and make sense of the physical world.

Choosing the right annotation type is fundamental. An AI model trained to identify products with simple bounding boxes will be completely ineffective at a medical task requiring pixel-perfect segmentation.

Text Annotation for Language Understanding

If computer vision teaches AI to see, then text annotation teaches it to read and understand what we write. It’s the magic behind every chatbot, virtual assistant, and sentiment analysis tool you’ve ever used. A good data annotation platform for this work must have tools designed specifically for picking apart human language.

Here are the key methods you'll encounter:

- Named Entity Recognition (NER): This is all about finding and labeling key bits of information in a block of text. A customer service bot uses NER to instantly spot a "product name," "order number," or "complaint type" in a support ticket, figuring out what the user needs without a human having to read it first.

- Sentiment Analysis: With this technique, annotators tag text with its emotional tone—positive, negative, or neutral. Brands use this to sift through thousands of social media comments and product reviews to get an instant read on how a new launch is being received.

- Intent Annotation: This goes beyond just understanding words; it’s about figuring out the user's ultimate goal. When you tell your phone, "Book a flight to New York," the intent is "booking," not just searching for information. This is critical for building conversational AI that can actually do things for you. For new companies in this space, getting this right is a huge deal, which highlights why data annotation is critical for AI startups.

Audio Annotation for Voice Technologies

Finally, audio annotation is the reason our voice-activated world works. From the smart speaker in your kitchen to the assistant in your car, every command gets processed by models trained on carefully labeled sound data. The main task here is audio transcription, which is just turning spoken words into written text.

But it's more than just typing out what was said. Annotators also perform speaker diarization (figuring out who is speaking and when) and tag non-speech sounds like "background noise" or "music." This level of detail is what allows a voice assistant to hear your command over the clatter of a busy kitchen and still get it right.

The Real-World Benefits of a Centralized Platform

Why bother with a dedicated data annotation platform when you can just juggle spreadsheets, shared drives, and a mix of other tools? It really comes down to a massive leap in performance and reliability. Without a central system, teams often get trapped in a frustrating cycle of inconsistent labels, version control headaches, and a total lack of visibility into how a project is actually progressing.

It’s a common scenario. One annotator labels a stop sign one way, another does it differently, and suddenly you have conflicting data that will only confuse your AI model. Files get overwritten, progress is tracked across a dozen different spreadsheets, and no one has a clue if the project is 50% done or 90% done. It’s messy, slow, and a surefire way to produce low-quality training data.

Now, imagine that same team using a proper data annotation platform. Suddenly, they’re managing millions of annotations from a single, unified environment. This isn't just a small tweak to their workflow; it’s a fundamental shift that delivers some very real business advantages.

Radical Gains in Efficiency

The most immediate and obvious win is the boost in pure operational efficiency. Trying to manage annotation manually is incredibly slow and labor-intensive. A good platform automates the mind-numbing, repetitive tasks that eat up your team's time, freeing them to focus on what matters: high-quality labeling.

This automation isn't just a buzzword. It means:

- Automated Task Distribution: The system is smart enough to assign tasks to available annotators, which gets rid of manual delegation and a ton of bottlenecks.

- Integrated Workflows: Every step—from the initial labeling to the review and final approval—happens in one smooth flow. No more bouncing between different apps.

- Performance Analytics: Dashboards give you an instant, clear picture of annotator speed and overall project velocity, so you can spot and fix slowdowns before they become major problems.

Measurable Improvements in Data Quality

A centralized platform is your best weapon against the "garbage in, garbage out" problem that derails so many AI projects. It creates a single source of truth for your annotation guidelines and quality standards, making sure every single label is consistent and accurate.

Think of it this way: with built-in QA checks and consensus features, a platform can automatically flag errors and disagreements that would be nearly impossible for a human to catch manually. This directly results in more accurate, more reliable AI models.

These platforms bake quality right into the process. They use features like reviewer consensus, where multiple annotators have to agree on a label before it's accepted, and automated checks that flag potential mistakes for a human to review. This systematic approach is the bedrock of building data-driven decision-making capabilities you can actually trust.

Streamlined Team Collaboration

Large-scale annotation is absolutely a team sport. You might have dozens of annotators, reviewers, and project managers working from different locations. A data annotation platform acts as the digital hub for the entire operation.

It’s a shared workspace where everyone is on the same page, working from the same playbook. Reviewers can drop feedback directly on a specific annotation, creating a powerful learning loop that helps the entire team get better over time. This collaborative core ensures your standards stay high, no matter how big the team gets.

Rock-Solid Data Security and Compliance

Finally, let’s talk security. When you're handling huge datasets—especially ones with sensitive or personal information—security has to be a top priority. A professional platform is built from the ground up with security in mind, offering features that cobbled-together solutions almost always lack. We’re talking about granular access controls, detailed audit trails, and robust encryption to help you meet strict compliance standards. A well-designed platform is also a key part of any practical guide to research data management, ensuring your data remains both secure and accessible.

The market itself shows just how critical these tools have become. The data annotation tools market was valued at USD 2.33 billion and is projected to skyrocket to USD 8.92 billion as everyone from autonomous vehicle developers to smart agriculture companies demands more high-quality, securely managed data. You can find more details on this growth in this report on the expanding data annotation market.

How to Choose the Right Data Annotation Platform

Picking a data annotation platform is one of the most critical decisions you'll make in any AI project. This isn't just about buying software; it's an investment in the very foundation of your model’s intelligence. The right platform becomes a powerful strategic asset, but the wrong one can saddle your project with friction, errors, and expensive delays.

To avoid that headache, you need a solid game plan to cut through the marketing fluff and focus on what actually matters for your project. Let's walk through how to build one.

Start With Your Data and Use Case

First things first: look inward. A platform that's brilliant for drawing bounding boxes on images might be completely useless for transcribing complex audio or segmenting 3D LiDAR scans. Before you even glance at a vendor's website, get crystal clear on your own requirements.

What kind of data are you working with? Is it images, video, text, audio, or something more specialized like medical DICOM files? Will you be dealing with just one data type, or do you need a multimodal platform that can juggle several? Get specific about the annotation techniques you need—are we talking simple classification, object detection with polygons, or pixel-perfect semantic segmentation?

This initial self-assessment acts as your compass. It will immediately help you filter out a whole category of platforms that simply aren't a good technical fit, saving you from countless wasted hours on demos.

Evaluate Core Annotation and QA Features

Once you have a shortlist of platforms that can handle your data, it's time to get your hands dirty and inspect the workshop—the core annotation and quality assurance (QA) tools. This is where your team will be spending most of their time, so the user experience and precision are everything.

When you're in a demo, pay close attention to the annotation interface. Is it intuitive? Are there keyboard shortcuts and clever automation features to speed up those repetitive tasks? A good video annotation tool, for instance, should have interpolation that automatically tracks an object between keyframes, saving someone from doing it manually.

Just as important are the quality control features.

- Review Workflows: Can you easily set up multi-step reviews where one person’s work is automatically sent to a senior reviewer for a second look?

- Consensus Scoring: Does the platform have a consensus feature that automatically flags annotations where multiple labelers disagree? This is invaluable for spotting and fixing ambiguous cases.

- Performance Analytics: Can you track how accurate and fast your annotators are? This helps you identify who might need a bit more training.

These aren't just nice-to-haves; they are the machinery that ensures your training data is consistent and accurate.

A platform's true value isn't just in its labeling tools, but in its ability to systematically enforce quality at scale. Without robust QA, you're just labeling faster, not better.

Consider Workforce and Integration Capabilities

A data annotation platform doesn't operate in a bubble. It has to fit into your bigger operational and technical world. A huge piece of that puzzle is your workforce. Do you have an in-house team ready to go, or will you be relying on a third-party managed workforce?

Some platforms are pure software-as-a-service (SaaS), designed for your team to use. Others offer a "platform-as-a-service" model that comes with a pre-vetted, managed workforce. If you plan on using an external team, you'll need a platform with solid user management and role-based permissions to keep everything secure. If you’re thinking about outsourcing the whole shebang, you might want to look into some of the top data annotation service companies to see how they pair their services with technology.

Finally, the platform must play nicely with your existing tools, especially your cloud storage (like AWS S3 or Google Cloud Storage) and your MLOps pipeline. A seamless API connection is non-negotiable for automating the flow of data from storage, through the platform, and into your model training environment. The demand for these integrated, high-quality solutions is fueling major market growth. Projections show the data annotation tools market, valued at USD 2.11 billion, is expected to skyrocket to USD 12.45 billion by 2033.

Platform Evaluation Checklist

To tie this all together, here’s a simple framework you can use when comparing different platforms. It’s designed to help you ask the right questions and focus on the features that will directly impact your project's success.

| Evaluation Criteria | Questions to Ask | Why It Matters |

|---|---|---|

| Data & Annotation Support | Does it handle my specific data types (e.g., DICOM, LiDAR)? Does it offer all the annotation tools I need (e.g., polygons, keypoints)? | The platform must be a perfect technical match for your project's core requirements. No workarounds. |

| Annotation Interface (UI/UX) | Is the interface intuitive? Are there hotkeys and automation features (like interpolation) to boost speed? | A clunky interface slows down your team, increases costs, and can lead to more errors. |

| Quality Assurance (QA) Tools | Can I create multi-level review workflows? Is there a consensus scoring feature? Can I track annotator metrics? | High-quality data is the goal. Robust QA features are the only way to systematically achieve and maintain it. |

| Workforce Management | Does it support my workforce model (in-house, outsourced)? Are the user roles and permissions granular enough? | You need a platform that aligns with how you staff projects, whether it's managing your own team or collaborating with vendors. |

| Integration & API | Does it offer seamless integration with my cloud storage? Is there a well-documented API for MLOps pipeline automation? | Manual data transfer is a bottleneck. Strong integration capabilities are essential for an efficient, automated workflow. |

| Security & Compliance | What security protocols are in place (e.g., SOC 2, GDPR)? How is data stored and accessed? | Protecting your proprietary data is non-negotiable, especially when dealing with sensitive information. |

| Scalability & Performance | Can the platform handle massive datasets without lagging? What's the process for scaling up the number of annotators? | Your needs will grow. The platform must be able to handle increased data volume and team size without falling over. |

| Support & Documentation | Is the documentation clear? What level of technical support is offered (e.g., dedicated manager, community forum)? | When you run into an issue (and you will), good support can be the difference between a minor hiccup and a major delay. |

By systematically going through these questions, you move beyond the sales pitch and can make a well-informed decision based on a platform's real-world capabilities and its fit for your specific needs.

Frequently Asked Questions About Data Annotation

When you start moving from AI theory to real-world application, the practical questions pop up fast. Suddenly, you're thinking about costs, tools, and the best way to get the job done. A data annotation platform is a serious investment, so it’s smart to get your questions answered before you commit.

This FAQ section cuts through the noise. We'll tackle the most common questions we hear from teams just getting started with data annotation, giving you the clear, practical answers you need to make the right call.

What Is the Difference Between a Platform and a Service?

This is one of the first and most important forks in the road you'll encounter. Getting this right will shape your project's workflow, budget, and timeline. The difference is actually quite simple, but it's critical.

Think of a data annotation platform as the software itself—it's your digital workshop. You get all the specialized tools needed to label data, but you bring your own team of annotators to do the work. This approach gives you complete control over the process, which is perfect for teams with in-house experts or highly specific quality standards.

A data annotation service, on the other hand, is the "done-for-you" package. You're not just buying software; you're hiring the people, too. The service provider uses their own platform and their own trained annotators to handle everything from labeling to quality checks. They deliver a ready-to-use dataset, which is a lifesaver for teams that need to move fast or don't have the staff to manage a big labeling project.

- Platform (DIY): You subscribe to the software and use your internal team. You get maximum control and it can be more cost-effective if you already have the people.

- Service (Done-For-You): You hire an external team to do the labeling. This gives you speed and scale without the headache of managing the process yourself.

The right choice really boils down to your team's size, internal expertise, and how quickly you need to get moving.

How Much Does a Data Annotation Platform Cost?

There's no single sticker price, and honestly, the cost can be all over the map. How much you pay is almost always tied directly to how you use the platform.

Most pricing models fall into one of these buckets:

- Usage-Based: This is your classic pay-as-you-go. You might pay per hour an annotator spends on the platform or per item they label (like a fee for every image or document). It’s flexible and often makes sense for smaller projects or if your needs come in waves.

- Subscription (SaaS): This is probably the most common model. You pay a recurring monthly or annual fee for a certain number of user "seats" (how many annotators can log in) or for access to a specific tier of features.

- Enterprise License: For large-scale operations, vendors usually put together a custom-priced enterprise package. These deals typically come with premium security, dedicated support, and other services designed for big companies.

You'll also find free and open-source tools out there. They can be great for learning the ropes or for tiny projects, but they rarely have the advanced features, security, and support you need for serious commercial AI development. When you're running the numbers, always think in terms of total cost of ownership—it's not just the license fee, but also the time and effort your team will sink into using and managing the tool.

Can One Platform Handle Both Images and Text?

Yes, many can! Modern platforms are often built to be multimodal, which is just a technical way of saying they can handle different kinds of data—images, text, audio, video—all under one roof. This is a huge benefit for complex AI projects that rely on information from multiple sources.

Think about an autonomous vehicle. To navigate the world, it has to understand:

- Images from its cameras to spot pedestrians and read signs.

- LiDAR data (3D point clouds) to judge distances to other cars.

- Audio from microphones to hear an approaching ambulance siren.

A good multimodal platform lets a team annotate all of this data in one place, which helps keep everything consistent and organized.

That said, some platforms are specialists. You might find a tool that's incredible for medical image annotation, packed with features for that specific niche, but it might be clunky for labeling text. Before you sign on the dotted line, make sure any platform you're considering doesn't just support all your data types but actually excels at annotating each one with the precision you need.

Ready to fuel your AI projects with high-quality, accurately labeled data? Zilo AI offers a full spectrum of data annotation services and strategic manpower solutions to accelerate your growth. Whether you need to scale your team or enhance model accuracy, we provide reliable, end-to-end solutions. Learn more about how we can help.