Let's be real for a moment. When most people hear "data quality," their eyes glaze over. It sounds like a technical headache for the IT department, not something the C-suite needs to worry about. But what if I told you that bad data is silently killing your bottom line and sabotaging your big-ticket AI projects?

Getting data quality right isn't about running a one-off cleanup script. It’s about building a living, breathing system to proactively assess, cleanse, and govern your data. Only then does it transform from a liability into your most powerful asset for making smart decisions.

The Hidden Tax of Bad Data on Your Business

The true cost of poor data quality goes far beyond server errors and system crashes. It’s a silent tax on your entire organization, paid in missed opportunities, frustrated customers, and squandered resources. When you can't trust your data, every department feels the pain. Marketing blows the budget targeting the wrong people, sales wastes time chasing ghosts, and leadership makes critical decisions based on a funhouse mirror reflection of reality.

This isn't some abstract concept. The financial bleeding is real, and it’s significant. Many organizations lose a staggering 20-30% of their revenue to the fallout from data errors. That's a massive drain, often stemming from issues that seem minor on the surface—like duplicate customer records that double your marketing spend or incomplete product specs that grind your supply chain to a halt.

Real-World Fallout from Flawed Data

Forget the spreadsheets for a second and think about the real-world damage. We've all seen it happen.

- AI Projects That Crash and Burn: You spend a fortune on a shiny new AI model, but it was trained on garbage data. The result? Flawed predictions, a total loss of investment, and a deep-seated distrust of any future tech initiatives.

- Embarrassing Customer Experiences: Ever sent a "Welcome!" email to a ten-year loyal customer? Or a special offer for a product they just returned? These aren't just mistakes; they're direct hits to your brand's credibility.

- Catastrophic Strategic Blunders: Imagine your executive dashboard is showing skewed sales figures. You might end up pouring money into a failing product line or completely missing the boat on a breakout market trend, all because the foundational data was wrong.

The problem is only getting bigger. With global data projected to swell to 181 zettabytes by 2025, the pressure to get it right is immense. Poor data quality drains the U.S. economy of an estimated $3.1 trillion every single year. It's no wonder that 95% of businesses now see data quality as absolutely essential to their success. You can explore more about the growing role of AI in solving these data challenges on Techment.com.

Making the Shift: From a Chronic Problem to a Strategic Advantage

The best-run companies I've worked with have made a critical mental shift. They've stopped treating data cleanup as a frantic, one-time fix. Instead, they embrace data quality as an ongoing discipline—a core business function that drives growth and fuels innovation.

The journey starts by understanding the foundational pillars of a robust data quality program. The rest of this guide is your roadmap, built on these four essential components.

The Four Pillars of Data Quality Improvement

To build a lasting data quality framework, you need to focus on four distinct but interconnected areas. Think of them as the legs of a table—if one is weak, the whole structure becomes unstable.

| Pillar | Primary Goal | Key Activities |

|---|---|---|

| Data Profiling | Get a clear, honest picture of your data's current state. | Analyzing data for completeness, accuracy, and consistency; finding outliers and strange patterns. |

| Data Cleansing | Fix, standardize, and remove the "bad" data you've found. | Standardizing formats, merging duplicate records, and intelligently handling missing values. |

| Data Governance | Establish clear rules and accountability for your data. | Defining who owns what data, setting quality standards, and creating policies for data entry. |

| Data Monitoring | Proactively keep your data clean over the long term. | Setting up automated quality checks, tracking key metrics, and alerting teams to new problems. |

Mastering these four pillars is the key to turning your data from a messy liability into a reliable, strategic asset that gives you a genuine competitive edge.

Building Your Data Quality Framework

Alright, let's get practical. Moving from just talking about data quality to actually doing something about it requires a real plan. Think of a data quality framework as your blueprint—a structured system that lays out how you'll define, measure, and enforce data standards across the entire organization.

This isn't just another document to file away. It's a shared commitment to stop treating data as a digital byproduct and start treating it like the valuable asset it is.

Without a framework, you’re just playing whack-a-mole. Teams work in their own little worlds, fixing problems as they pop up, which often just creates new issues for someone else down the line. A solid framework brings order to that chaos. It establishes a common language and clear expectations for what "good data" actually means for your business. This alignment is the first, most crucial step toward building a true culture of quality.

Defining Your Quality Metrics

So, where do you start? You have to define what quality actually means in your context. Vague goals like "improving accuracy" are essentially useless because you can't measure them. Your framework needs to turn those high-level business needs into concrete, measurable data quality dimensions.

I always recommend starting with these core pillars:

- Accuracy: Does the data reflect reality? Is the customer's address in your system their actual, current address?

- Completeness: Are all the critical pieces of information there? A customer record might be technically accurate, but if it’s missing a phone number or email, its value plummets.

- Consistency: Is your data the same everywhere it appears? It’s a classic problem: your CRM has "John Smith," but your billing system has "J. Smith." These small discrepancies cause big headaches.

- Timeliness: Is the data ready when you need it? Stale data can be just as misleading as wrong data, leading to missed opportunities or flawed decisions.

Once you nail these down, you have a scorecard. You can use it to grade your datasets and, more importantly, track your improvement over time. This foundational work is what makes smarter, more confident choices possible. For a deeper dive into how this connects to better business outcomes, our guide on data-driven decision-making is a great resource.

Establishing Ownership and Accountability

A framework is just a piece of paper if no one is responsible for it. This is where data stewardship becomes non-negotiable. You absolutely have to assign clear ownership for different data domains.

Here’s how that typically breaks down:

- Data Owners are senior leaders—think a VP of Sales who is ultimately on the hook for the quality of all customer data. They are accountable for the asset.

- Data Stewards are your subject-matter experts on the ground. They’re responsible for the day-to-day work: defining the rules, monitoring quality, and ensuring the standards are actually met.

This isn't just theory. We've seen this work time and again. A great example is when Optum created its Data Quality Assessment Framework (DQAF) for the healthcare industry. By implementing it at Alberta Health Services, they established a clear system for improving patient data. The result? Within just one year, they saw major improvements in managing patients with chronic conditions because the data was finally reliable enough for proactive decision-making.

Pro Tip: Create a Data Dictionary. Think of it as the official instruction manual for your data. It’s a central document that spells out every data element: its definition, required format, meaning, and business rules. Without one, you’re practically inviting inconsistent data entry and misinterpretation.

By building this structure of ownership, you transform data quality from some vague IT problem into a shared business responsibility. Everyone knows their role, understands the standards, and has the authority to protect one of your company's most important assets.

Alright, let's get into the nitty-gritty. This is where you roll up your sleeves and really start working with the data. Before you can even think about fixing anything, you have to understand what you're dealing with. This first crucial step is called data profiling.

Think of it like a mechanic running diagnostics on a car before touching a wrench. Profiling involves a deep dive into your datasets to get a real sense of their health and structure. You’ll be running analyses to uncover all the hidden quirks—unexpected null values, wonky formatting, and bizarre outliers that could point to much bigger problems. Skipping this step is like trying to navigate a new city without a map; you’re just guessing.

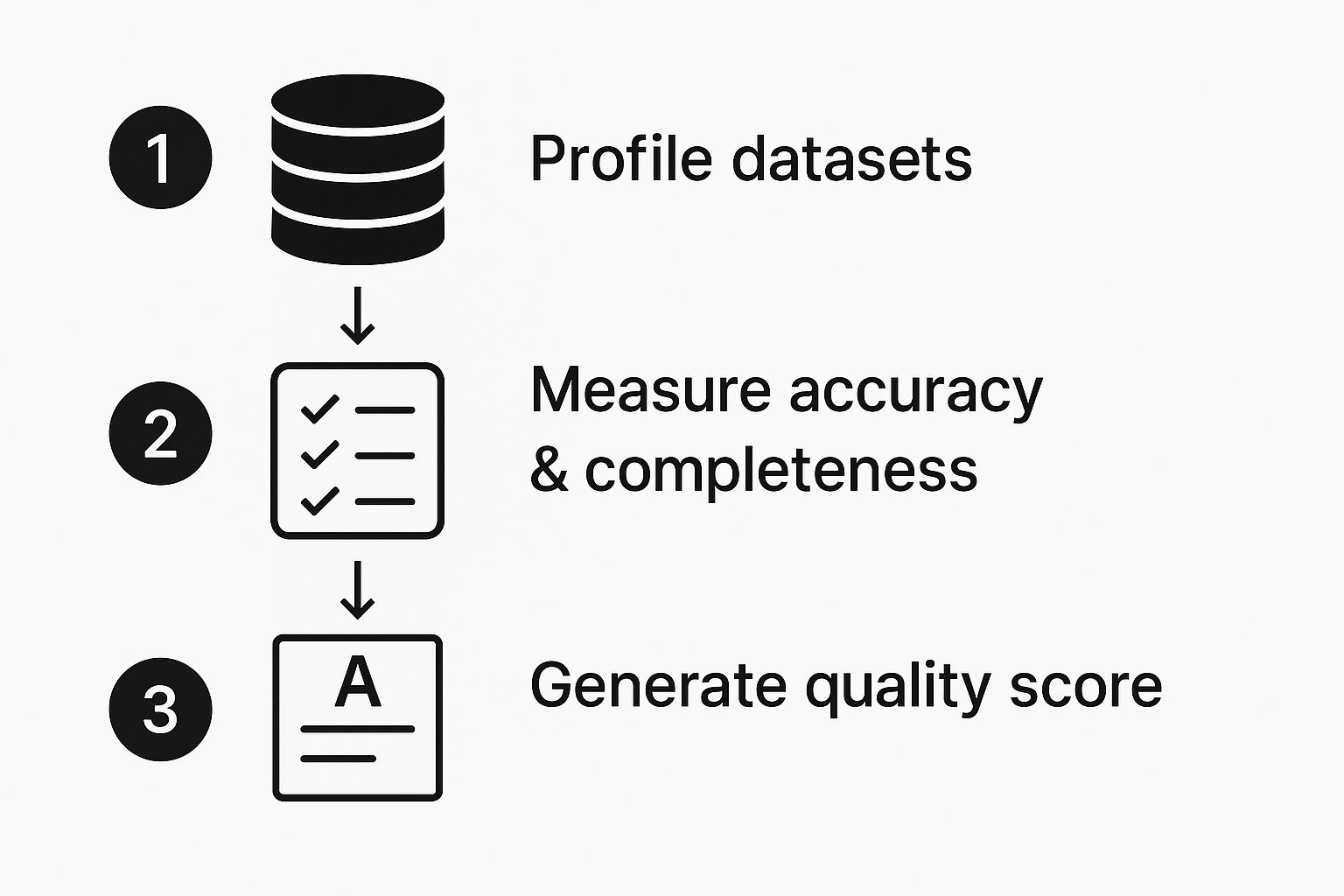

This visual breaks down a simple, repeatable workflow for getting a handle on your data's condition.

Moving from profiling to measurement gives you a solid, quantifiable baseline. You'll know exactly where your data stands before you start making any changes.

Mastering Common Data Cleansing Workflows

Once your profiling work has shined a light on the problems, it’s time for data cleansing. This is the hands-on process of fixing, standardizing, and getting rid of inaccurate, incomplete, or duplicate information. The goal here isn't just one-time fixes; it's about building repeatable workflows that keep your data clean over time.

Here are a few of the most common—and impactful—cleansing tasks I see every day:

- Standardization: This is all about making sure your data speaks the same language. Take address data, for instance. You’ll almost always find "Street," "St.," and "Str." all mixed up in the same column. A good standardization script can automatically convert every variation to a single, consistent format like "St." This might seem small, but it's essential for everything from accurate shipping to reliable location analytics.

- Deduplication: Duplicate records are a silent killer. They inflate your marketing spend, confuse your sales reps, and muddy your analytics. Smart deduplication is more than just finding exact matches. It uses fuzzy matching algorithms to spot near-duplicates—think "John Smith" and "Jonathan Smith" at the same company—so you can merge them intelligently without losing valuable context.

- Handling Missing Values: A column riddled with empty cells can bring any analysis to a screeching halt. Your first instinct might be to just delete those rows, but that’s usually a mistake because you could be throwing away perfectly good data. You need a strategy. For numerical data, you might fill in the blanks with the mean or median of the column. For more complex situations, you can use imputation models that predict the missing value based on other information in the record.

When it comes to AI, the quality of your cleansing and labeling is everything. As many businesses are discovering, proper data annotation is critical for AI startups in 2025, because meticulously prepared data is the bedrock of any reliable machine learning model.

Choosing the Right Tools for the Job

The good news is you don't have to do all of this by hand. The right tool for you will really depend on the scale of your data, your budget, and the technical skills on your team.

Key Takeaway: Start with the data that has the biggest impact on your business. Fixing errors in your main customer contact list is going to give you a much faster return than cleaning up weblog data from five years ago. Prioritize ruthlessly.

For smaller projects, you might get by with the built-in functions in your ETL (Extract, Transform, Load) tools or even some clever formulas in Excel. But as your data volume grows, you'll quickly hit a wall. That’s when specialized data quality software becomes a lifesaver. These platforms come with pre-built rules for common cleansing tasks, slick profiling dashboards, and automated monitoring that can save your team hundreds of hours.

Ultimately, it’s a trade-off. Using your existing ETL tools is often cheaper upfront but requires more custom scripting and maintenance. Specialized tools get you to the finish line faster but come with a higher price tag. The best choice is the one that fits your current workflow and can grow with you as your data quality efforts mature.

Putting Practical Data Governance in Place

So, you’ve put in the hard work to clean up your data. That’s a huge win. But how do you stop the mess from creeping back in?

This is where data governance comes into play. Forget the intimidating corporate jargon for a moment. At its core, data governance is simply about establishing clear rules of the road and making sure someone is accountable for your company’s data. It’s the human element that makes or breaks data quality in the long run.

Without some form of governance, you'll inevitably fall back into old habits. All that clean data you fought for will slowly erode, and you'll be right back where you started. Governance shifts data quality from being a frantic, one-off cleanup project to a proactive, ongoing part of your company culture. It’s about creating smart, simple policies that stop bad data at the source.

Figure Out Who Owns What

The first, most crucial step is to answer a very simple question: who is responsible for what? Ambiguity is the absolute enemy of data quality. When everyone thinks someone else is in charge of a dataset, the reality is that no one is. This is where defining roles is non-negotiable.

From my experience, the most effective way to handle this is by creating a straightforward data stewardship program. It doesn't have to be complicated.

- Data Owners: Think of these as the high-level guardians. They are typically senior business leaders. For example, the VP of Sales is the Data Owner for all sales pipeline and CRM data. They are ultimately accountable for its quality and how it’s used to drive strategy.

- Data Stewards: These are your on-the-ground experts. A sales operations manager might be the Data Steward for the lead contact database. They are responsible for the day-to-day work—defining data entry rules, monitoring for errors, and ensuring the data is fit for purpose.

Once these roles are assigned, you have a clear line of sight. When an issue with lead data pops up, everyone knows exactly who has the authority and the responsibility to get it fixed.

Expert Tip: Data governance isn't about creating more red tape. It’s about empowering the right people with the authority to protect data quality within their own areas of expertise. This actually makes you more agile, not less.

Create Simple, Effective Rules

With clear owners in place, the next step is to agree on some simple, easy-to-follow rules for your data. This is where you document your standards for how data should be entered, updated, and used. Think of them as guardrails that prevent common mistakes from happening in the first place.

For instance, a simple policy might dictate that all new customer addresses must include a ZIP code and be validated against a postal service database. Another might define the mandatory fields required to create a new product SKU in your inventory system.

The key is to start small. Don’t try to write a 300-page manual no one will ever read. Focus on your most critical data first and build from there. For a deeper dive into this process, these essential data governance best practices for SMBs are a great resource.

By establishing these clear policies and empowering your team, you create a sustainable culture where everyone values and protects high-quality data.

Automating Data Quality With AI and Modern Tools

Let's be honest: manually vetting your data is a losing battle. In a business environment where data streams in constantly, periodic human spot-checks just can't keep pace. This is where modern tools and automation become your greatest allies in the quest for reliable data.

Technology can handle the tedious, time-consuming work of monitoring, validating, and flagging data issues in real time. It's not just about moving faster. It's about fundamentally changing your approach from a reactive, "let's fix this mess" model to a proactive defense system that protects your data integrity around the clock.

Shifting to Real-Time Monitoring and Validation

The true unlock with automation is weaving quality checks directly into your data pipelines. Why wait for a quarterly audit to discover your customer data is a wreck? You can—and should—catch errors the moment they happen.

Think about how this works in practice. A new lead enters your CRM. Instead of just sitting there, an automated workflow can immediately kick in to:

- Validate the email address to confirm it's real and can receive messages.

- Standardize the physical address to meet postal service formatting requirements.

- Enrich the record by appending valuable data like company size or industry.

This is a cornerstone of smart business process automation, where small, automated actions create massive downstream improvements in both efficiency and data reliability. A great real-world parallel is seeing how invoice processing automation slashes human error, offering a clear example of how modern tools directly boost data quality.

The Power of AI in Anomaly Detection

Rules-based automation is fantastic for catching known issues—the problems you can anticipate. But what about the ones you can't? That’s where artificial intelligence and machine learning truly shine.

AI models are brilliant at learning the normal rhythms and relationships within your datasets. Once they understand what "normal" looks like, they can flag complex anomalies that even a seasoned analyst might miss. It’s like having an incredibly sharp watchdog that never needs a break.

This kind of intelligent monitoring tackles a growing crisis of confidence head-on. Consider this: industry research shows that a staggering 67% of leaders don't fully trust their organization's data for decision-making. At the same time, 64% cite data quality as their number one challenge.

Key Takeaway: Automation and AI aren’t just about speed; they're about rebuilding trust. When your systems can vouch for data being clean and reliable from the moment of creation, you lay a solid foundation for confident, data-driven decisions that push the business forward. In the long run, this is the only sustainable way to win the data quality war.

Answering Your Top Data Quality Questions

https://www.youtube.com/embed/cRmI_Kkrb8E

Moving from a high-level strategy to the day-to-day work is where the real challenges pop up. Once you leave the conference room, you’re faced with practical questions about budgets, resources, and where to even begin.

Let's tackle some of the most common hurdles I see teams face when they start getting serious about data quality. We'll get into everything from making a business case to leadership to figuring out the first steps for a small team that feels stretched thin.

How Do I Justify the Cost to Leadership?

Getting the budget approved is often the first, and biggest, roadblock. The key is to stop talking like an IT specialist and start speaking the language of the business: value and risk. Forget "data cleansing"—talk about "eliminating wasted marketing spend" or "preventing costly compliance errors."

You have to show them the money. A quick analysis can be incredibly persuasive.

- Pinpoint the cost of duplicates. Let’s say you have a 5% duplicate rate in your 100,000-contact customer database. If each direct mail piece costs $1, you're throwing away $5,000 on every single campaign. That gets attention.

- Show them the missed revenue. If inaccurate lead data causes a 10% failure rate in your sales team's outreach efforts, what's the real-world cost in lost deals? Translate percentages into potential profit.

- Frame it as risk management. In many industries, poor data isn't just inefficient—it can lead to serious fines. Position the investment as a non-negotiable step to protect the business.

When you connect poor data directly to dollars and cents, it stops being an abstract technical issue and becomes a clear business problem that leadership can’t ignore.

Where Should a Small Team Start?

If you're on a small team, the thought of a company-wide data quality project is completely overwhelming. My advice? Don't even try. You don't need to boil the ocean.

My Two Cents: Find one critical business process that is clearly broken because of bad data. Is it sales lead follow-up? Customer onboarding? Pick the area that causes the most visible pain and make it your pilot project.

Concentrate all your initial energy on a single, high-impact dataset. Your core customer list is usually a great place to start. Profile it to find the biggest problems, clean it up, and set up a few simple rules to keep it that way. This approach gives you a quick, measurable win that builds momentum and proves the value of expanding your efforts.

How Do We Choose the Right Tools?

The market for data quality tools is massive, but you don’t automatically need the most complex or expensive platform. The right choice depends entirely on your team's skills, your budget, and the specific problems you're trying to solve.

For simpler jobs, a few scripts or the built-in features of your existing ETL tools might be all you need. But as your data grows more complex, dedicated tools are a game-changer, automating profiling, validation, and monitoring.

When you're looking at different options, focus on how well a tool handles your specific data types and how easily it can plug into the systems you already use. For a deeper dive into this and other common concerns, checking out a detailed frequently asked questions (FAQs) page can provide a lot of clarity.

At Zilo AI, we provide the AI-ready data and specialized manpower to fuel your most ambitious projects. From meticulous data annotation to strategic staffing, we build the foundation for your success. Discover how our end-to-end solutions can accelerate your growth at https://ziloservices.com.