Data labeling is the surprisingly human part of machine learning. It's the process of taking raw, unprocessed data—like a mountain of images, audio clips, or text documents—and adding meaningful tags to it. Think of it like creating flashcards for an AI. Each piece of data is the question, and the label is the answer.

This labeled data is the bedrock for training any AI model. Without it, you have a powerful engine with no fuel.

The Cornerstone of Artificial Intelligence

Imagine trying to teach a child to identify a cat. You wouldn't just describe it; you'd point to different cats in pictures and in real life, saying, "That's a cat." This direct association is exactly what data labeling does for an AI. It provides the essential context that turns a chaotic flood of information into a structured lesson plan that an algorithm can actually learn from.

Without this step, even the most sophisticated algorithms are essentially flying blind. They have the capacity to learn, but no textbook to study. Labeled data serves as the ground truth—the gold standard of facts that the model uses to test its own understanding and get progressively smarter.

Why Quality Labeled Data Is So Important

The old saying "garbage in, garbage out" has never been more true than in machine learning. The performance of your AI model is a direct reflection of the data you feed it. If the labels are sloppy, inconsistent, or just plain wrong, you'll end up with a confused model that makes unreliable predictions.

Meticulous data labeling isn't just a nice-to-have; it's a must-have for several key reasons:

- Model Accuracy: Precise labels train the model to make fewer errors. Simple as that.

- Reduced Bias: A thoughtfully labeled dataset is your first line of defense against embedding harmful human biases into your AI system.

- Reliable Performance: Consistency is key. Well-labeled data ensures your model behaves predictably when it encounters new, real-world information.

- Faster Training: High-quality data can actually help your model learn the right patterns more efficiently, shortening the training cycle.

It’s no surprise that the demand for high-quality data labeling is skyrocketing. The global market, valued at around USD 4.87 billion in 2025, is on track to hit an incredible USD 29.11 billion by 2032. That's a compound annual growth rate of nearly 30%, showing just how central this work is to the entire AI industry. You can discover more insights about the data labeling market's rapid expansion and see what it means for the future of AI.

In essence, data labeling is the bridge between raw data and machine intelligence. It's the patient, detailed work that translates the world's complexity into a language that algorithms can comprehend, making applications like self-driving cars, medical image analysis, and voice assistants possible.

Key Data Labeling Concepts at a Glance

To really get a handle on this topic, it helps to break down the core ideas into simple terms. This table provides a quick summary of the fundamental concepts we've just covered.

| Concept | Simple Explanation | Why It Matters for AI |

|---|---|---|

| Raw Data | Unprocessed information (images, text, etc.) without any context. | This is the starting material, but it's useless to an AI model on its own. |

| Data Labeling | The act of adding descriptive tags or annotations to raw data. | This process creates the "textbook" from which the AI will learn. |

| Labeled Data | The finished product: raw data plus its corresponding labels. | This is the fuel for training, testing, and validating machine learning models. |

| Ground Truth | The verified, accurate set of labels used as a benchmark for quality. | The model uses this to check its work and measure its own accuracy. |

Think of these concepts as the building blocks. Understanding them makes it much easier to grasp the more advanced techniques and workflows we'll explore next.

Exploring the Core Types of Data Labeling

Data labeling for machine learning isn't a one-size-fits-all job. Think of it as a collection of specialized techniques, where the right approach depends entirely on your data and what you want your AI model to accomplish.

Let's walk through the most common types with some real-world examples. Getting a handle on these methods is your first step toward building a truly powerful and accurate AI. Each one provides a different kind of lesson for your algorithm, teaching it how to see, read, or hear the world in a structured way.

Image and Video Annotation

This is probably the most widely recognized form of data labeling, and for good reason. It’s the magic behind everything from self-driving cars to cutting-edge medical diagnostics. At its core, it’s all about adding meaningful tags to visual data.

- Bounding Boxes: This is the simplest method. An annotator just draws a rectangle around an object of interest. If you're an e-commerce platform, you might draw boxes around every "handbag" or "shoe" in an image to train a product recognition model. Simple and effective.

- Polygonal Segmentation: But what about objects with funky, irregular shapes? A simple box just won't cut it. That's where polygonal segmentation comes in. It involves drawing a precise, multi-point outline around an object—like tracing the exact shape of a car or a pedestrian for an autonomous vehicle's perception system.

- Semantic Segmentation: This technique goes a step further by assigning a category to every single pixel in an image. Imagine it as a "color-by-numbers" game for your AI. Every pixel that's part of the "road" might be colored blue, "trees" get colored green, and "buildings" gray. This creates an incredibly detailed map for the model to learn from.

- Keypoint Annotation: This method is all about marking specific points of interest on an object. It's fantastic for understanding posture or structure, like labeling the joints on an athlete's body to analyze their movement for sports analytics.

By meticulously outlining and categorizing objects in images, we are essentially creating a visual dictionary for AI. This detailed machine learning data labeling process is what allows an algorithm to distinguish a stop sign from a pedestrian or a benign mole from a potentially cancerous one.

Text Labeling for Language Models

Text data is absolutely everywhere, from customer reviews and social media feeds to your company's internal documents. Labeling this information is how you teach an AI to understand the messy, nuanced world of human language.

Named Entity Recognition (NER) is a fundamental technique here. It’s all about identifying and categorizing key pieces of information within a block of text. For example, you could train an AI to scan news articles and automatically tag all mentions of "People," "Organizations," and "Locations."

Another critical type is Sentiment Analysis. This is where text gets classified as positive, negative, or neutral. Businesses lean on this heavily to get a pulse on customer feedback from reviews or social media, turning a flood of unstructured opinions into data they can actually act on.

Finally, there’s Text Categorization, which assigns a document to one or more predefined categories. A customer support system might use this to automatically route incoming emails to the "Billing," "Technical Support," or "Sales" departments based on their content. The accuracy of this labeling is non-negotiable and is a massive factor for success, especially for new companies. To dig deeper, check out our guide on why data annotation is critical for AI startups in 2025.

Audio Labeling

From the voice assistants in our pockets to sophisticated call center analytics, audio labeling is the key to training models that can understand spoken language.

The most common form here is Audio Transcription, which is simply the process of converting speech into written text. It’s the backbone of services like Siri and Alexa. Sometimes, you need even more detail, which is where Speaker Diarization comes in—it identifies who is speaking and when.

By turning unstructured sound into labeled, structured data, we give machines the ability to interact with us through voice. It’s a huge step toward more natural human-computer conversations, and it all starts with giving the AI the right "ground truth" to learn from.

Your Data Labeling Workflow From Start to Finish

Getting a data labeling project right isn't about a mad dash to tag everything in sight. It’s more like running a factory assembly line—a structured, repeatable process where every step builds on the last. The quality of your final product, the labeled dataset, depends entirely on how well each stage is executed.

You can break the whole lifecycle down into four distinct, yet connected, stages. When you think about your project this way, an overwhelming goal becomes a clear, actionable plan. This framework helps you sidestep the common traps that cause so many AI projects to stumble. Let's walk through it.

Stage 1: Data Collection and Preparation

Before you can even think about applying a single label, you need to get your raw materials in order. This first step is all about gathering the data you plan to label, whether that’s a collection of thousands of product photos, hours of recorded customer service calls, or a mountain of text documents. It’s like sourcing quality lumber before you start building a house—the better your raw materials, the better the final structure.

Once you have the data, it needs to be prepped. This means cleaning and standardizing it. You might be resizing images so they're all the same dimensions, removing duplicate entries, or filtering out corrupted files that can't be read. A little bit of housekeeping here saves you from major headaches and rework later on.

Stage 2: Creating Labeling Guidelines

This might be the single most important part of the entire process. Your labeling guidelines are the official rulebook for your annotators. Without a crystal-clear and detailed guide, you’re basically inviting chaos and inconsistency, which are poison for any machine learning model.

Your instruction manual needs to cover:

- Clear Definitions: What does each label or category actually mean? Be specific.

- Visual Examples: Show concrete "do this" and "don't do this" examples for every single label.

- Edge Case Instructions: You have to anticipate the weird stuff. For instance, how should an annotator label a car that’s half cut off at the edge of the frame? Settle these debates before they start.

Think of your guidelines as the constitution for your project. A well-written one ensures every annotator—whether they're sitting in the next cubicle or on the other side of the world—is following the same laws. That’s how you get consistent, high-quality results.

Stage 3: The Labeling Process

With clean data and solid rules in hand, the real work can begin. This is the phase where human annotators get into the specialized software and start applying tags based on your guidelines. The goal here isn't just speed; it's about maintaining accuracy over the long haul.

This can be a purely manual effort, or you can use AI-assisted tools that suggest labels for a human to review and approve. Whichever path you choose, keeping the lines of communication open with your labeling team is crucial for keeping the project moving and morale high.

Stage 4: Quality Assurance and Iteration

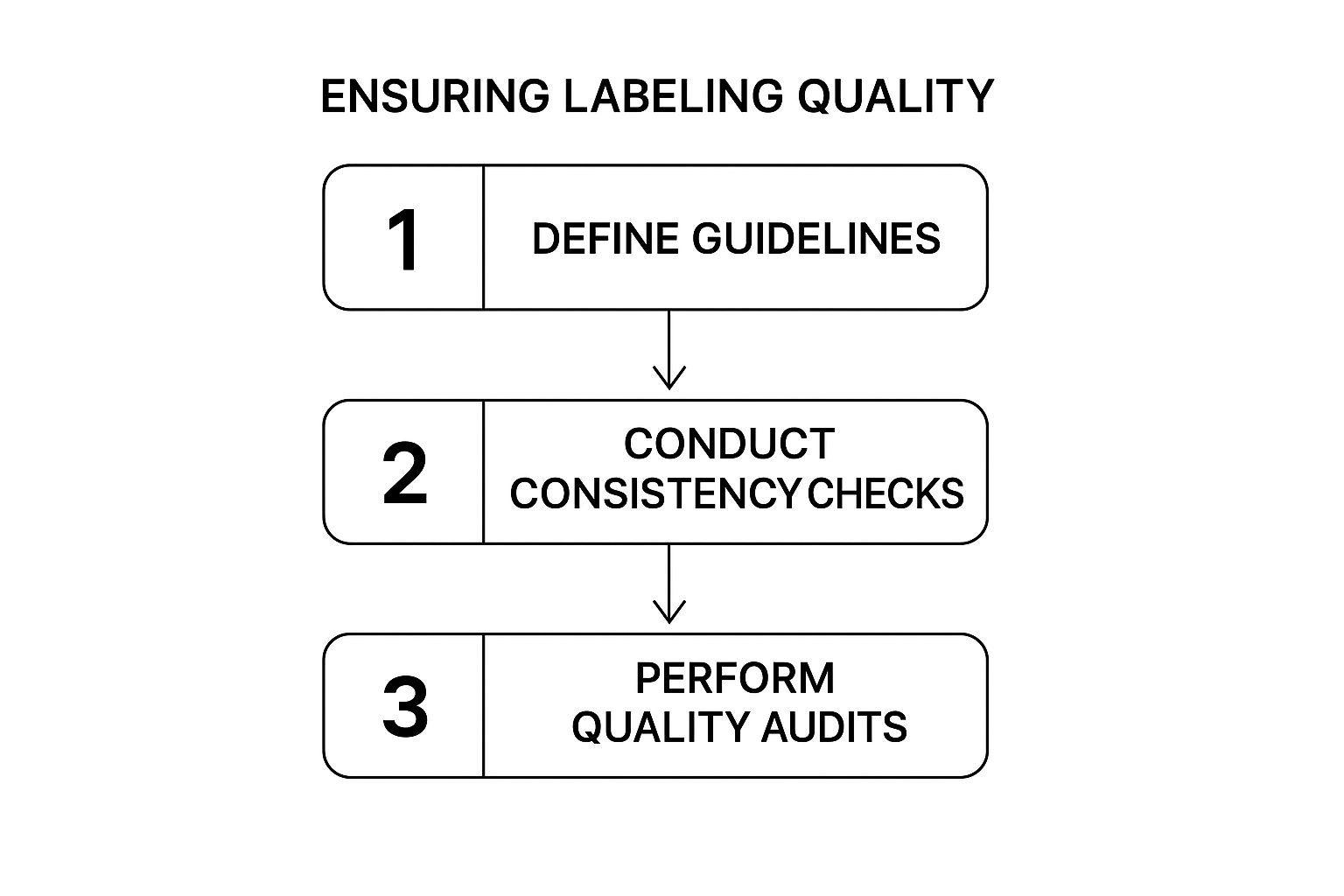

Labeling is never a "one-and-done" task. The final stage is a rigorous quality assurance (QA) process where you spot-check the work to see if it’s accurate and consistent with your guidelines. But this isn't just a final inspection—it’s a continuous feedback loop.

This is the core cycle that ensures your label quality stays high.

As the infographic shows, quality comes from a repeatable loop: you define the rules, check for consistency, and audit the results. Any mistakes found during QA get fixed, but more importantly, the insights from those mistakes are fed back into the labeling guidelines. This creates a cycle of continuous improvement that makes the entire project stronger over time.

Choosing Your Labeling Approach: In-House vs. Outsourcing

Once you've nailed down your workflow, you’ve hit a major fork in the road: who is actually going to do the labeling? This isn't a minor detail. Your choice here will directly shape your project's budget, timeline, and ultimately, the quality of your finished dataset.

You're essentially looking at three main paths, each with its own set of pros and cons. Think of this as less of a simple cost comparison and more of a strategic decision. You need to pick the model that best fits your company's strengths, security requirements, and long-term goals.

Let's break them down.

The In-House Team Model

This is the DIY route. You hire, train, and manage your own employees to handle all the data labeling. It gives you an incredible amount of control over the entire operation, from writing the annotation guidelines to overseeing the final quality checks.

- Maximum Control: Your team lives and breathes your project. They understand the nuances and goals on a deep level, which can translate into exceptional quality.

- Airtight Security: If you're working with sensitive data—think financial records or medical information—keeping it all behind your own firewall is a massive plus.

- High Upfront Costs: This is the big catch. Building a team comes with hefty price tags for salaries, benefits, training, and management. It's almost always the most expensive option to get started.

- Scalability Can Be a Grind: Need to suddenly ramp up for a big push or scale down during a lull? With an in-house team, that process can be slow and clunky.

This model really shines for companies working on long-term, mission-critical projects where top-notch security and deep domain expertise are absolute must-haves.

The Outsourcing Approach

Leaning on outside help for data labeling has become a go-to strategy for a reason. It lets companies tap into specialized skills and ready-made infrastructure without building it all from scratch. The market stats tell the story: outsourced providers accounted for a whopping 69% of all data labeling revenue in 2024.

It's a model that’s helping entire sectors scale up, especially fast-growing fields like healthcare, which is seeing growth at a compound annual rate of nearly 28%. If you're curious, you can discover more about the data labeling market trends to see just how dominant this approach has become.

When you decide to outsource, you generally have two flavors to choose from.

H3: Crowdsourcing Platforms

Think of these platforms as a massive, on-demand workforce. You get access to a global pool of freelancers who can tackle simple tasks at incredible speed and low cost. It’s a great fit for high-volume jobs that don't require specialized knowledge. The trade-off? Keeping quality consistent can be a real headache.

H3: Managed Service Providers

This is the "done-for-you" option. Partnering with a managed service provider like Zilo AI gives you a dedicated, expertly trained team that handles the entire labeling pipeline for you, complete with project management and built-in quality control.

A managed service provider acts like a true extension of your own team. They offer the perfect middle ground, blending deep expertise and reliability with the flexibility to scale your labeling efforts up or down on a dime.

Comparing Data Labeling Sourcing Models

Deciding between building an in-house team, using a crowd, or partnering with a managed service provider can feel overwhelming. Each model comes with its own unique blend of benefits and drawbacks related to cost, control, and scalability.

This table breaks down the key differences to help you see which approach aligns best with your project's specific needs.

| Factor | In-House Team | Crowdsourcing Platforms | Managed Service Providers |

|---|---|---|---|

| Quality Control | High | Low to Medium | High |

| Cost | High | Low | Medium |

| Scalability | Low | High | High |

| Data Security | Very High | Low to Medium | High |

| Speed | Slow to Moderate | Very Fast | Fast |

Ultimately, the best choice depends entirely on your priorities. If security is paramount and your needs are stable, an in-house team is a strong contender. If you just need a mountain of simple data labeled fast and cheap, crowdsourcing gets the job done.

But for most businesses looking for that sweet spot—a reliable balance of quality, speed, and scalable capacity—a managed service provider is often the most effective and efficient path forward.

7 Best Practices for High-Quality Data Labeling

Let's be blunt: the quality of your labeled data is the single biggest factor in your AI model's performance. High-quality labels lead to a reliable model. Low-quality labels—garbage in, garbage out—create an unreliable one.

So, how do you guarantee a solid foundation for your AI project? You need an operational playbook. These best practices transform data labeling from a subjective art into a disciplined, repeatable process that delivers accurate, valuable results every single time.

1. Create Crystal-Clear Instructions

The most common point of failure in any data labeling project is ambiguity. If 10 annotators interpret a rule in 10 different ways, your dataset is headed for disaster. Your labeling guidelines must be the single source of truth for everyone involved.

This document should be comprehensive and packed with visuals, leaving zero room for guesswork.

- Define Every Label: Don't just name categories. Describe them with specific, detailed criteria. What exactly separates an "urgent" customer ticket from a "high priority" one? Be precise.

- Show, Don't Just Tell: Include plenty of visual examples for each label, clearly pointing out correct and incorrect annotations. This is non-negotiable for computer vision projects.

- Hunt Down Edge Cases: Proactively look for tricky or unusual scenarios. What happens if an object is partially hidden? What if a product could fit into two different categories? Answering these questions upfront saves you from chaos later.

2. Establish a Robust Quality Assurance Protocol

You can't just hope labels are correct; you have to verify it. A strong quality assurance (QA) process is your best defense against human error and inconsistency. Think of it as a system of checks and balances that ensures the final data meets your strict standards.

A core QA technique is consensus scoring, often called inter-annotator agreement (IAA). Here, multiple annotators label the same piece of data without seeing each other's work. The labels are then compared. A high level of agreement is a great sign that your instructions are clear and the work is accurate.

This process isn't just about catching mistakes. It’s about figuring out why they happen. When you spot disagreements, it’s a golden opportunity to refine your guidelines and retrain your annotators, making the entire system smarter.

3. Implement a Human-in-the-Loop (HITL) Approach

Modern data labeling is rarely a purely manual effort anymore. The most efficient workflows create a powerful partnership between AI and human experts. This is what we call a Human-in-the-Loop (HITL) system.

In this model, an AI takes the "first pass," generating preliminary labels across a huge dataset. This is sometimes called pre-labeling or semi-automated labeling. Then, a human annotator steps in to do what humans do best: review, correct, and validate the AI's suggestions.

This approach brings some major wins:

- Massive Efficiency Gains: It dramatically cuts down the time humans spend on simple, repetitive tasks. This frees them up to focus their brainpower on the more complex and nuanced cases.

- Higher Accuracy: Human oversight ensures that subtleties missed by the AI are caught and corrected, leading to a much higher-quality final dataset.

- Continuous Improvement: The corrections made by humans are fed back into the AI model, helping it learn from its mistakes and become a better labeling assistant over time.

By combining the raw speed of automation with the precision of human judgment, you get the best of both worlds: scale and quality. Finding the right team is key to making this work, and you can see how experts handle these workflows by checking out the top 10 data annotation service companies in India 2025.

How AI Is Changing the Data Labeling Game

It’s a bit ironic, but the often tedious work of labeling data for machine learning is being completely reshaped by the very technology it enables. The old way—purely manual annotation—is quickly being replaced by a smarter partnership between human experts and AI. This new approach is making the whole data preparation process faster, more accurate, and massively more scalable.

This isn't about replacing people with machines. It's about giving human annotators a powerful assistant. AI-powered tools are now handling the most repetitive, mind-numbing parts of the job. This frees up the human experts to focus on what they do best: making nuanced judgments and catching subtle errors that still trip up an algorithm.

Pre-Labeling: The AI's First Draft

One of the biggest game-changers here is pre-labeling, sometimes called semi-automated labeling. You can think of it like an AI creating a "first draft" of the labels for your team. A model, which might be pre-trained on a huge public dataset, takes a first pass at your raw data and applies its best guess for the annotations.

For instance, an object detection model could automatically draw bounding boxes around every vehicle it identifies in a batch of street-view images. A human labeler then just has to review these suggestions. They can quickly confirm correct boxes, fine-tune the edges, or delete any that are wrong. This is worlds faster than drawing every single box from scratch, making each annotator dramatically more productive.

Active Learning: Working Smarter, Not Harder

Another brilliant technique is active learning. This method completely flips the traditional workflow on its head. Instead of having a person sift through thousands of images to find good examples, the model itself flags the data points it finds most confusing.

By pointing out the examples it's least certain about, the model guides human annotators to focus their effort where it will have the biggest impact. This targeted approach ensures that every bit of human effort provides maximum value, helping the model learn and improve far more rapidly. It’s a smart strategy that fine-tunes the model efficiently, ensuring that data-driven decision making is based on the most potent information available.

This human-AI collaboration is more than just an efficiency hack. It's a fundamental improvement to the labeling process. By combining the raw speed of AI with the precision of human oversight, teams can scale their efforts, reduce costs, and build better, more reliable models.

This integration of AI into the labeling process is a major reason the field is growing so quickly. Automated and semi-supervised techniques are making things more efficient across the board. AI-assisted labeling tools are gaining ground in industries like finance, healthcare, and automotive, where getting things right at scale is non-negotiable. You can learn more about AI’s impact on data labeling solutions and see how it's being adopted in different sectors.

Common Questions About Data Labeling

When you're first diving into machine learning, a few questions about data labeling pop up almost every single time. Sorting these out early can save you a world of headaches down the road and help you build your project on solid ground. Let's tackle some of the most common ones I hear from teams.

How Much Labeled Data Do I Need For My Model?

This is the big one, and the honest answer is: it depends. There’s no magic number. The right amount really hinges on how complex your model is, how varied your data is, and what you’re trying to accomplish.

For a simple classification task, you might get a decent baseline with just a few thousand labeled examples. But if you're building a sophisticated computer vision model for something like an autonomous vehicle, you could be looking at millions of data points, each labeled with painstaking detail.

The best way to approach this is to start small. Get a high-quality starter dataset, build a baseline model, and see how it performs. From there, you can add more data in batches and see if the performance improvements are worth the effort. This stops you from burning time and money labeling data that doesn't actually make your model smarter.

I like to think of it like learning a new subject. You don't read an entire library at once. You start with one core textbook, and then you add more specialized books as you figure out where the gaps in your knowledge are. Active learning works much the same way, helping you pick the most valuable data to label next.

Is Data Labeling The Same As Data Annotation?

Yes, they're essentially two sides of the same coin. In the world of AI, data labeling and data annotation are used interchangeably. Both terms describe the process of adding meaningful tags to raw data so that a machine learning algorithm can understand it.

You might hear "annotation" more often when people are talking about more complex tasks, like drawing intricate polygon masks on an image for segmentation. But really, it’s all just part of the same family. If you use them as synonyms, everyone in the industry will know exactly what you mean.

How Can I Measure The Quality Of Labeled Data?

You absolutely have to measure the quality of your labels—it's not optional. The industry-standard method is called Inter-Annotator Agreement (IAA). It’s a fancy term for a simple idea: have two or more people label the exact same piece of data without consulting each other. Then, you compare their work to see how consistent it is.

Another great technique is to create a gold standard dataset. This is a small, pristine set of data labeled by your absolute best experts—your source of truth. You can use this "gold" set to spot-check the work of other labelers or even to evaluate your model's performance. At a minimum, you should have a senior team member review and audit a sample of the work on a regular basis.

What Are The Biggest Data Labeling Mistakes To Avoid?

I see a few common tripwires that can really derail a project. Staying clear of these from day one is key.

- Vague Instructions: This is the number one culprit. If your guidelines are unclear or leave room for interpretation, you're guaranteed to get inconsistent, low-quality labels.

- Skipping Quality Assurance: Treating QA as an afterthought is a recipe for disaster. Without a solid review process, errors will slip through, multiply, and quietly corrupt your entire dataset.

- Ignoring Scalability: It’s easy to underestimate the sheer effort a large-scale labeling project takes. If you don’t think about how you'll handle volume from the beginning—whether in-house or with a partner—you’ll hit a wall sooner or later.

Ready to build a high-quality foundation for your AI with expert data labeling? Zilo AI provides comprehensive data annotation services with built-in quality assurance and the flexibility to scale with your needs. Learn more at Zilo Services.